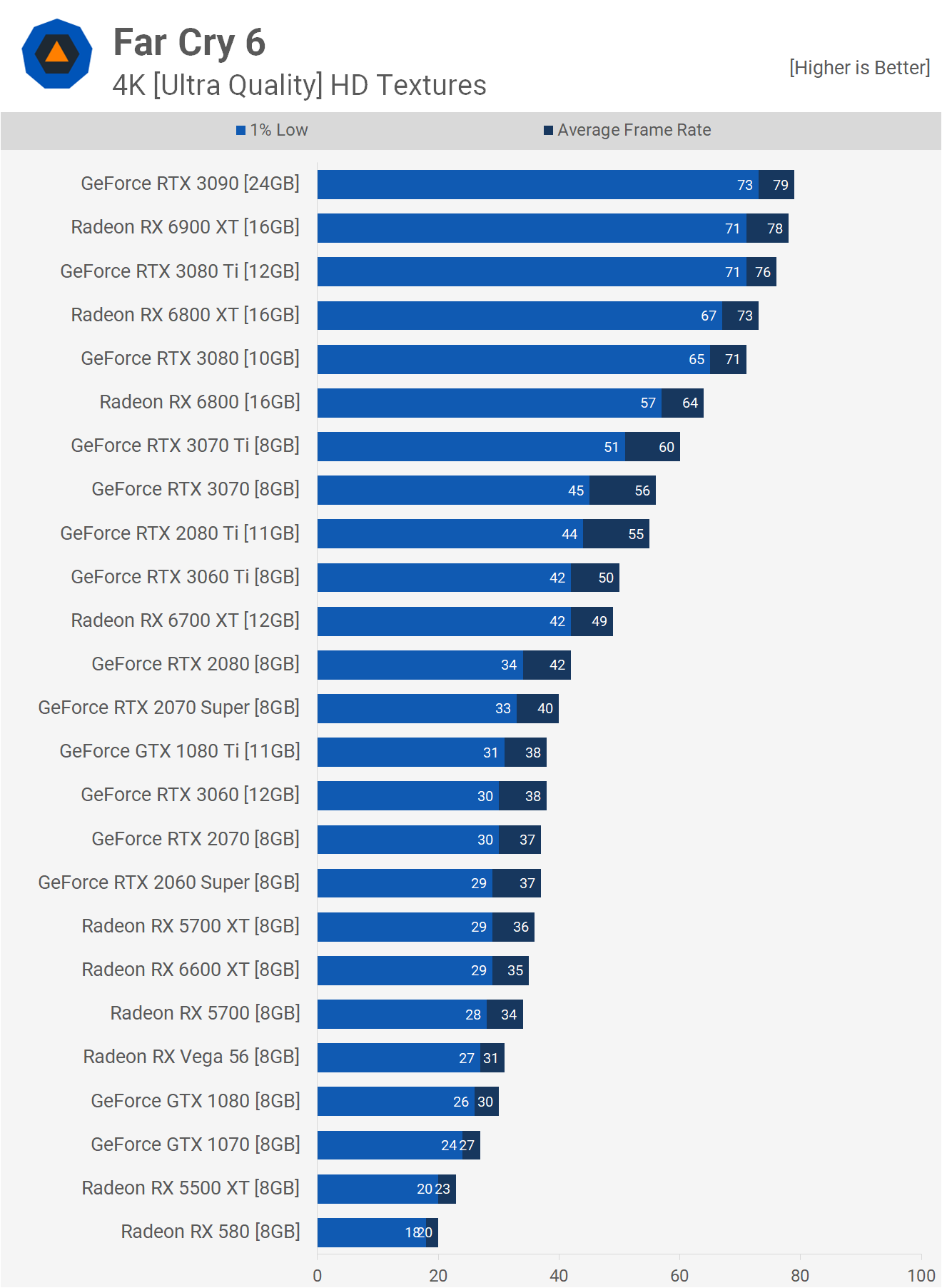

Yeah thats what I have said all along. Pretty much same perf between them. Yet people want to think 6700XT is a 3070 competitor, because it's priced close to 3070, it's not. 3070 is 10-15% faster than both of these cards in 1440p/4K.

6700XT might have more VRAM but suffers from the low bandwidth and 192 bit bus in higher resolutions, which is why 3060 Ti beats it in 4K. DLSS is also a lifesaver here tho. FSR too yeah, but DLSS is far more common and Nvidia cards support FSR too.

6800 non-XT is closer to 3070 than 6700XT is to 3070.

And this is why 6700XT should have been 399 dollars tops.

12GB might age better but 3060 Ti have better RT perf, and more and more games will start to include RT elements like Metro Exodus EE. Meaning that even on lowest settings, RT perf will matter.

This is 1440p cards, not 4K/UHD cards for sure. You won't really need more than 8GB for a GPU in this league. They won't be able to run AAA games maxed out at 4K anyway, meaning that VRAM usage won't be high to begin with.

8GB is plenty for 1440p maxed. Most demanding games use like 5-6GB tops in 1440p.

12GB on 6700XT is pointless, not about futureproofing. Alternative was 6GB since it's 192 bit, so I understand why they went with 12GB just in case.

It is the exact same with 3060 non-Ti, a GPU this weak (40-45% slower than 3060 Ti) will never be able to utilize 12GB, which is why 3060 Ti beats 3060 with ease even in 4K gaming.

By the time 12GB will be needed for 1440p gaming, meaning maxed out settings, all these GPUs will be considered too slow to play on anything else than low to medium settings, sooo