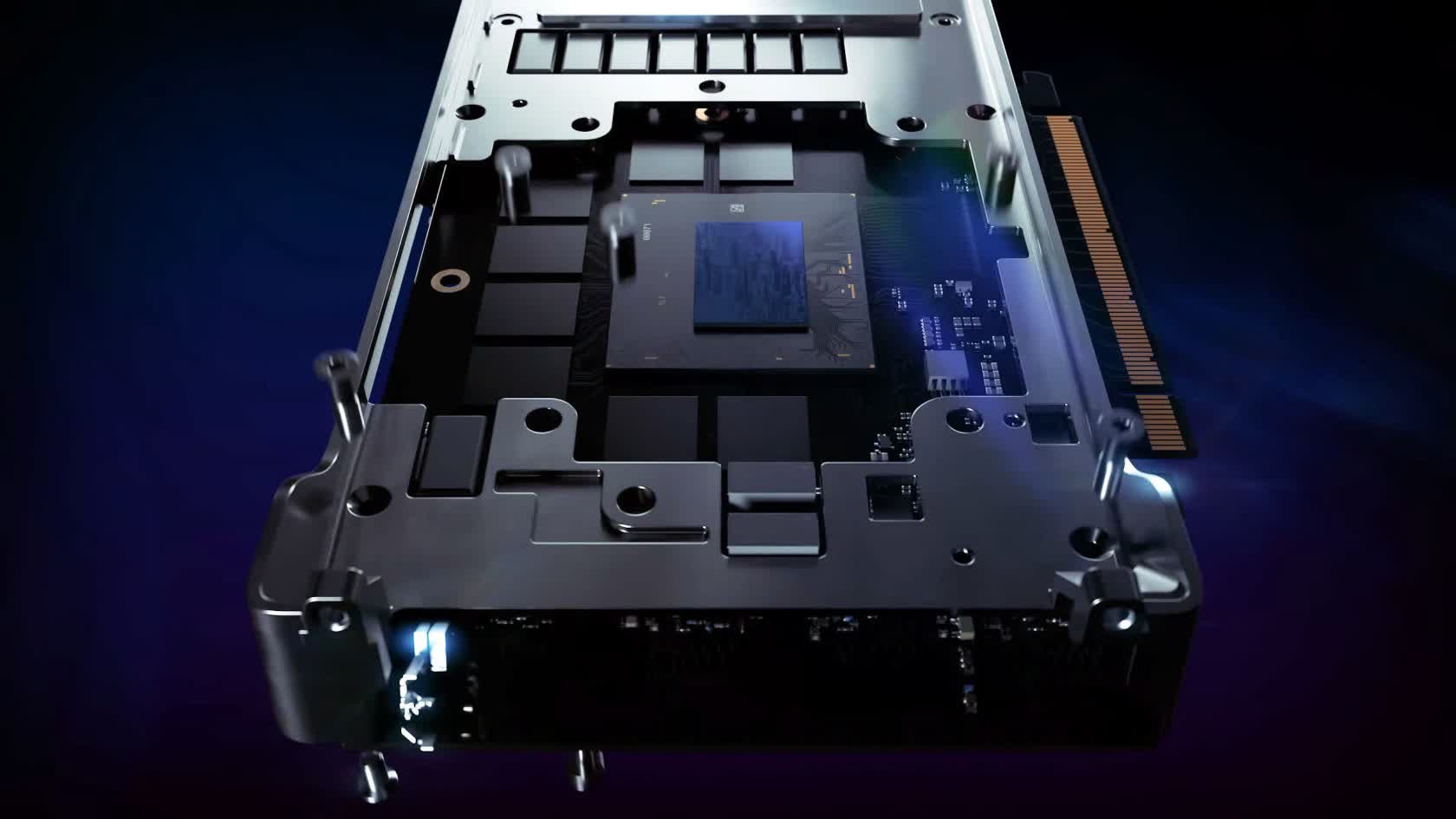

Forward-looking: Intel has finally started releasing its mobile Arc GPUs and is poised to release its first desktop Arc GPUs later this Summer. Somewhere near the top of the pile will be the Arc A770, a fully-equipped part with 512 EUs, a 2-2.5 GHz boost clock, and 16 GB of memory.

Intel first announced the flagship Arc hardware as the ACM-G10 GPU and then paper-launched it as the A770M for mobile. Laptops with the A770M should appear on shelves in the coming months. Its unannounced desktop equivalent has now been found in the Geekbench OpenCL database.

It's not a ground-breaking leak because the hardware itself has been leaked so many times, including once previously in the Geekbench database. It is, however, the first time that it's officially been called the Intel Arc A770 Graphics to give it its full name.

It's also another dot on the clock speed map: 2.4 GHz. Late last year, we talked about rumors of 2.5 GHz, then, in February, we saw 2.4 GHz for the first time. Intel themselves teased 2.25 GHz last month but were perhaps referring to a different model.

And, the critical bit: aligning with past leaks, the A770 achieved an OpenCL score of 85,585 points. Slightly more than the RX 6600 XT, about the same as the RTX 2060 Super and RTX 2070, and a good bit less than the RTX 3060. In other words, thoroughly midrange.

But that isn't the whole story. Geekbench breaks down the OpenCL score into its component categories, of which there are 11. Some architectures are better in some classes than others. For example, the 6600 XT is about 35% faster than the 3060 in the Gaussian blur test, despite being slower in eight categories and having an overall worse score.

Although the 3060 bests the A770 in more than half the categories, the A770 takes a significant lead in the particle physics and Gaussian blur tests. It loses by the largest margins in the Sobel, histogram equalization, horizon detection, and Canny tests, all of which are based on computer vision.

From this, you can see a bit of a pattern: the A770 does poorly in tests sensitive to memory but is otherwise computationally powerful.

Given the on-paper specs of the A770M, it's a surprising result that the A770 presumably shares: 16 GB of GDDR6 clocked at 17.5 Gbps and connected via a 256-bit bus. That's not a bad memory subsystem, so perhaps this is a quirk of OpenCL, or maybe, the Alchemist architecture has a memory bottleneck.

Realistically, there wouldn't be significant ramifications if it did. At worst, it might make the architecture better suited to lower resolutions than higher ones and reduce its longevity. Nevertheless, it's interesting to see what makes the Alchemist architecture different from Ampere and Navi.

In terms of gaming performance, the OpenCL scores don't suggest much. As it says above, the 6600 XT is more than 10,000 points behind the 3060, but in our review, we found that it was faster in most games at 1080p and 1440p. Leaked benchmarks from before we knew its name put the A770 ahead of the 3060 and in the realm of the 3070 Ti.

https://www.techspot.com/news/94232-intel-arc-a770-desktop-gpu-debuts-geekbench-database.html