Something to look forward to: Intel's new graphics card is starting to become a little more real now that designs have been presented on stage. It is now a waiting game to find out how well it will perform against rivals Nvidia and AMD.

Even though Intel is still nearly a year away from launching its own discrete graphics card, the company is already beginning to show off where it is headed. In a GDC 2019 keynote, Intel has shared some early designs of their GPU slated for a 2020 release.

Taking design cues right from their own Optane SSDs, sharp lines and fairly minimal styling is in use. From what we can tell, Intel might be avoiding the obnoxious RGB flair present on some gaming oriented cards.

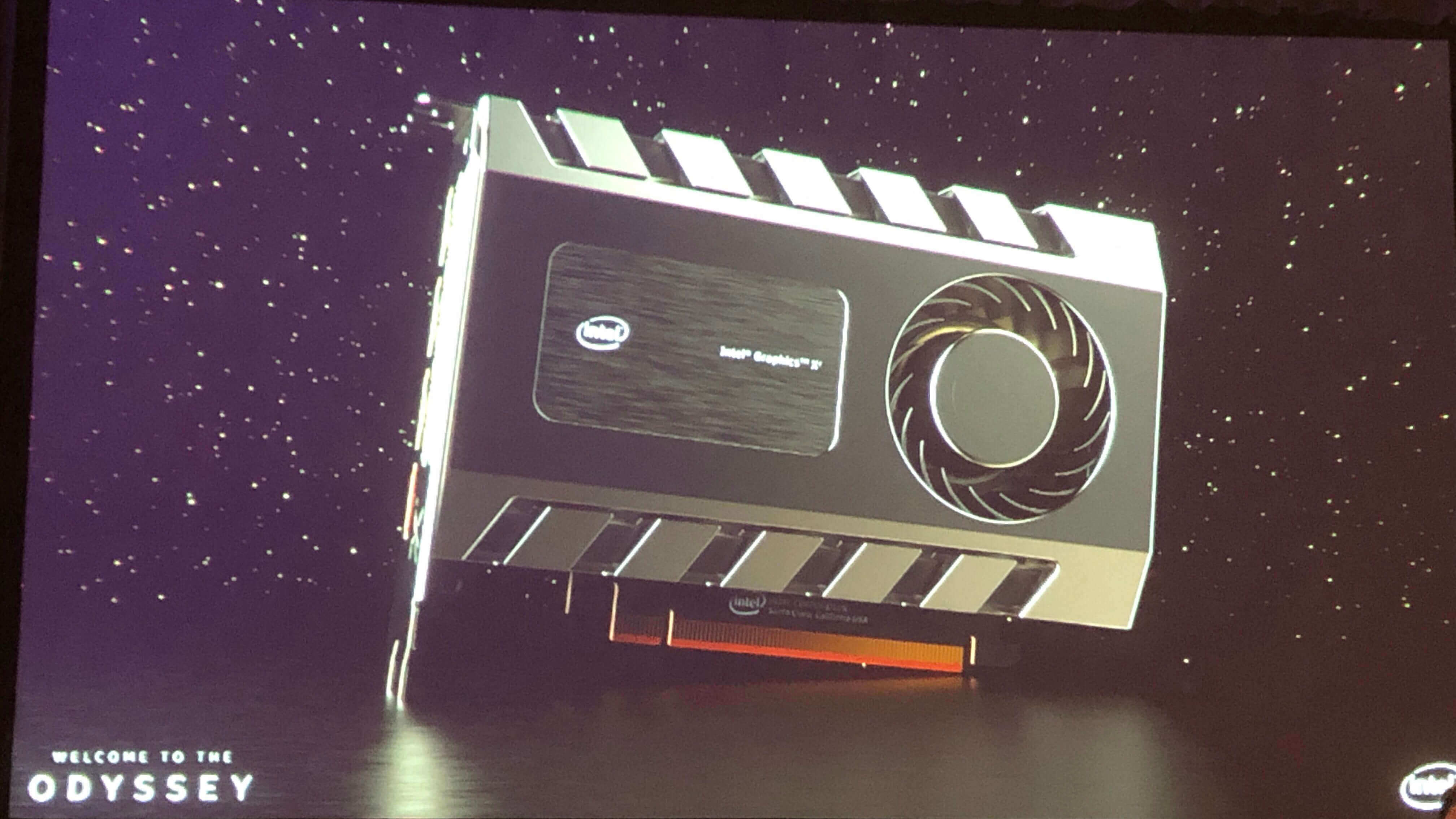

The first design uses the typical blower fan found in many other reference designs. Compared to Intel's blue rectangle cards that have been shown in the past, this first iteration appears to be much the same, but with a housing that would look better through a glass side panel.

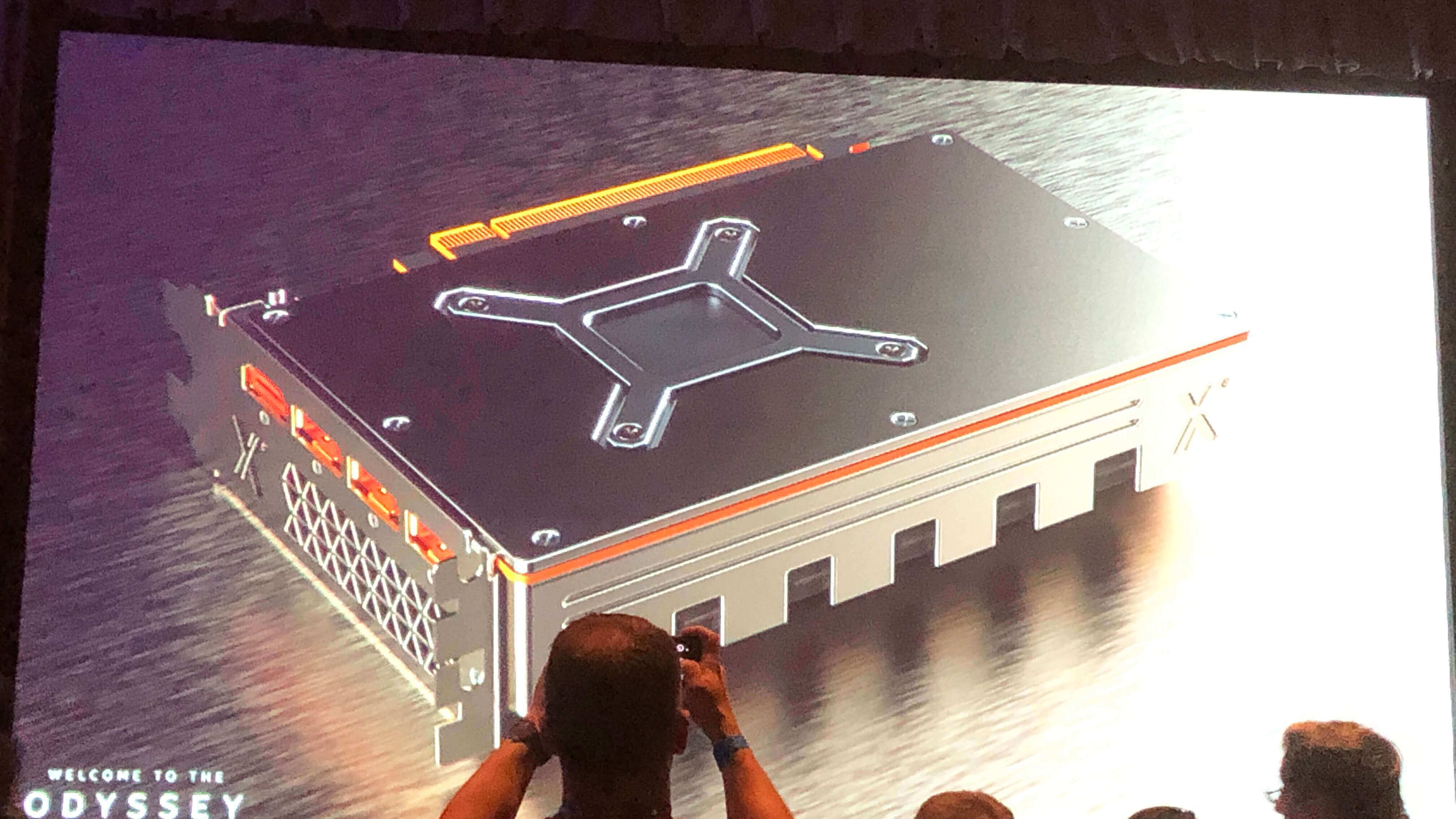

Turning over to the back side of the card, a full backplate is present. You can also catch a glimpse of what appears to be three full size DisplayPort outputs and one HDMI port, although this could change before final release. It should also be noted that the HDMI spec and DisplayPort version used will be more important than just the number of ports.

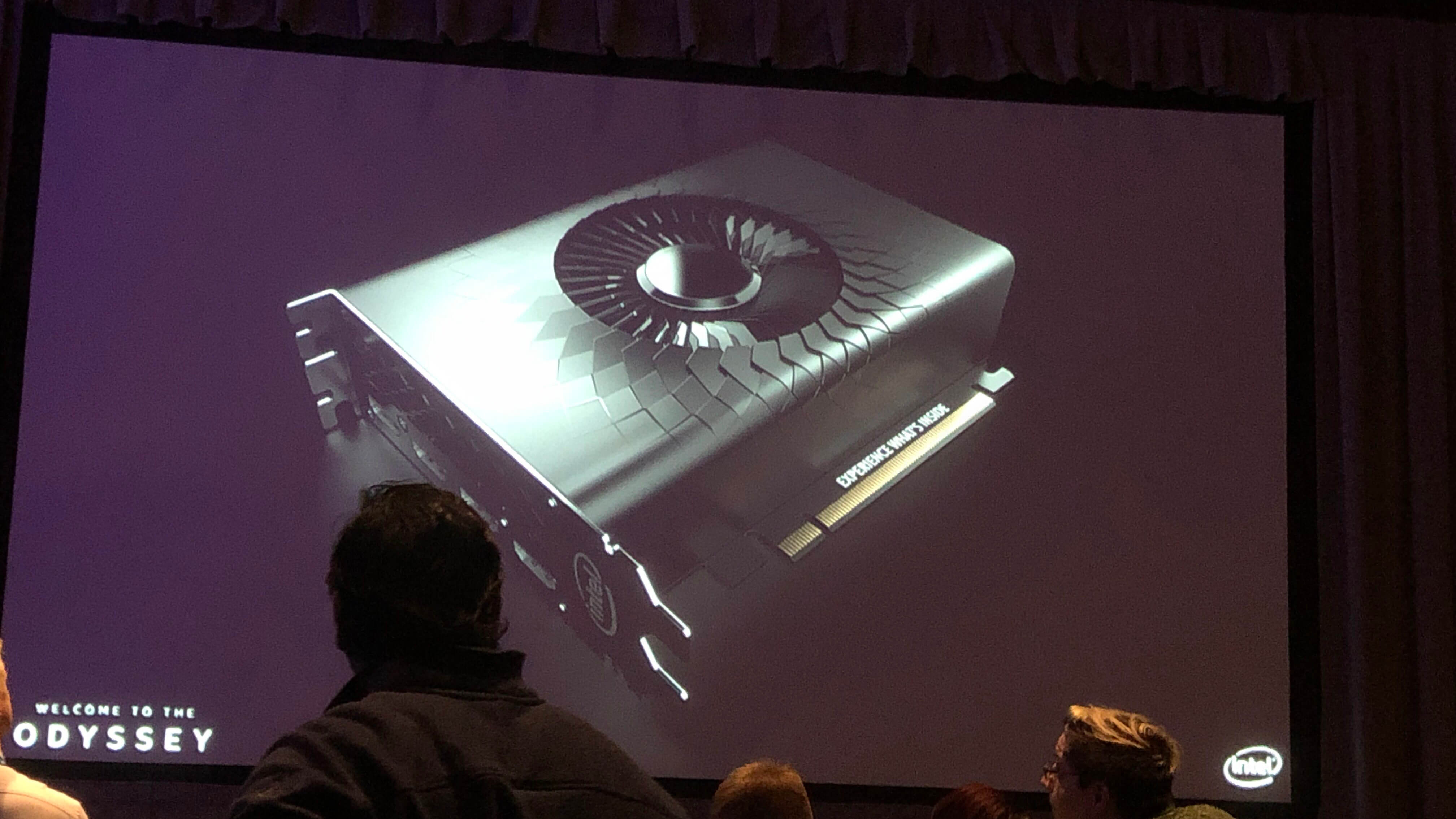

Lastly, we get a look at Intel's most intricate design. Quadrilateral scales surround a cooling fan before smoothing out into a semi-matte black finish, giving it the illusion of a fan breaking out of the housing.

Unfortunately, full specifications are still not yet available for Intel's upcoming graphics card. Real world performance is essentially completely unknown for now. As the year goes on, there is a good chance Intel may share some numbers given how eager the company is to make everyone aware that they have a major new product incoming.

Image Credits: Nick Pino, TechRadar

https://www.techspot.com/news/79298-intel-shows-off-designs-discrete-graphics-card-due.html