Forward-looking: The Intel marketing machine has produced yet another video about the architecture and features of the Arc series, this time with a focus on ray tracing. If Intel's claims are valid, the Arc 7 A770 could have outstanding ray-tracing performance for its tier.

The video is staged as an interview with Ryan Shrout, Intel marketer, posing the questions to infamous engineer Tom Petersen, best known for his 15 years as an Nvidia frontman. Now he's peddling the benefits of Intel's ray tracing hardware acceleration — go figure. He explains it well in the first half of the above video, so give it a watch if you're interested.

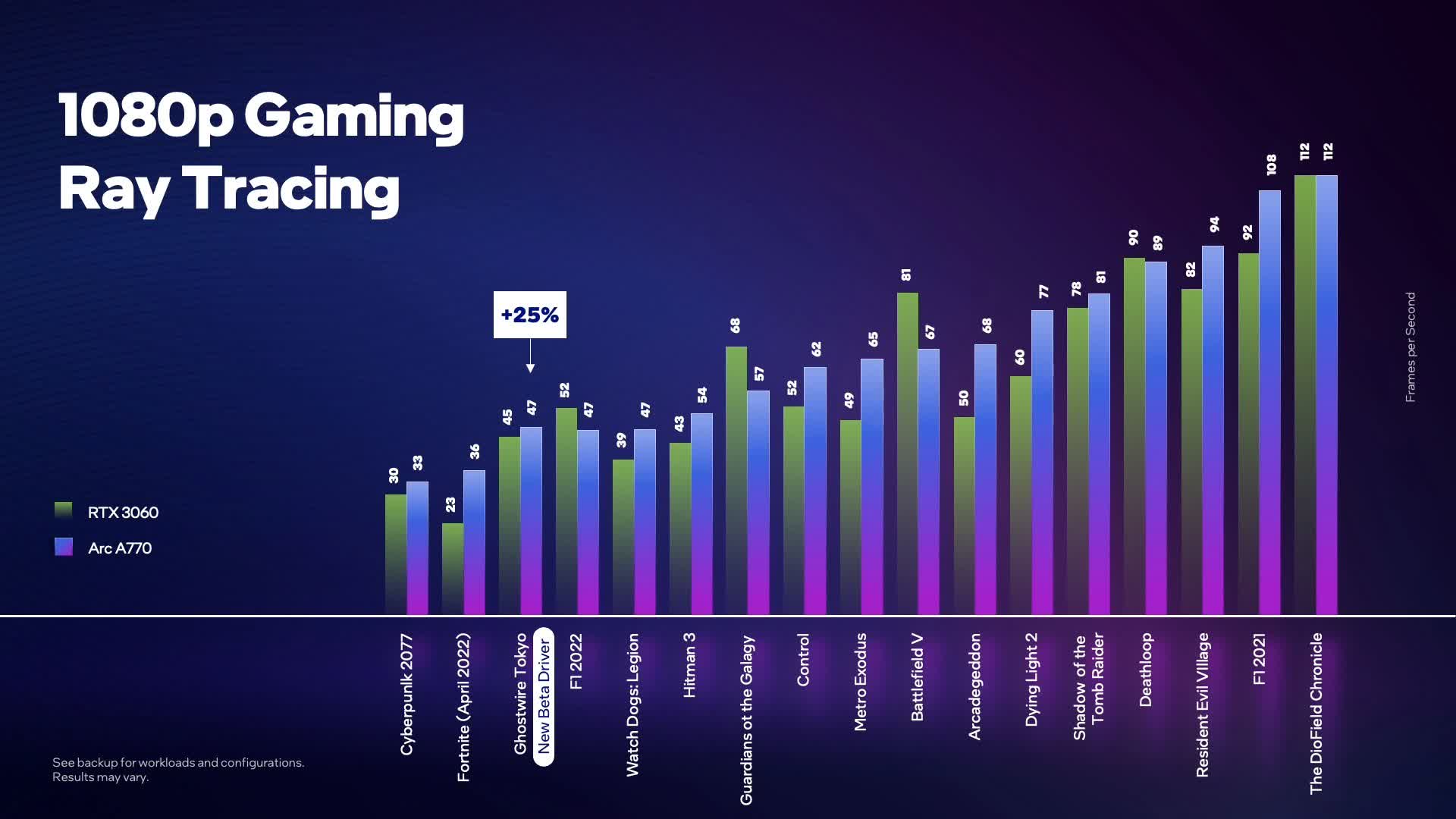

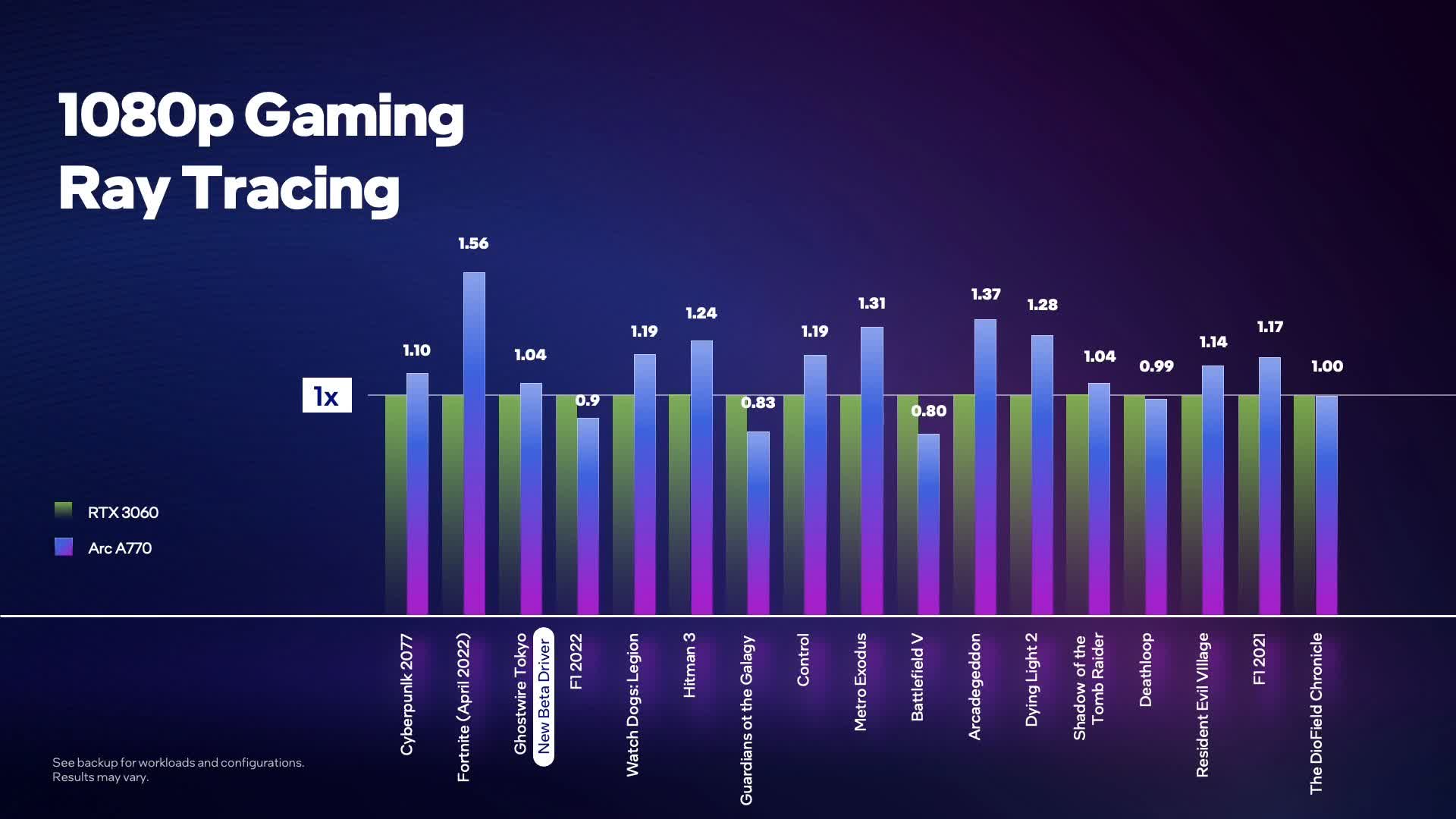

In the second half, Petersen graphs the frame rates of the A770 and 3060 in 17 games with ray tracing enabled. Seeing the results, he says, "we win most — we win, really." But to give Intel the benefit of the doubt, I'll also quote the claim it makes in the fine print: the "A770 delivers competitive ray tracing performance against the RTX 3060 at 1080p across a sample of popular games."

Competitive or the winner? Have a look at Intel's graph and decide for yourself.

Here's where I would warn you that Intel could've cherry-picked these games, except that there probably aren't any other games that support ray tracing on Arc GPUs. In any case, don't place too much faith in Intel's numbers.

On average, the Arc A770 is almost 13% faster than the RTX 3060. Intel benefits from some big swings in its favor, like a 56% lead in Fortnite and a 31% lead in Metro Exodus. However, the A770 falls considerably behind in Battlefield V and "Guardians of the Galagy [sic]."

Intel says it conducted benchmarks with the games' settings maxed out with the justifiable exception of motion blur. With those settings and at 1080p, every game was playable on the A770 bar, maybe Cyberpunk 2077 and Fortnite, but for the most part, you'd want to dial the settings back to medium.

If you look closely, you'll see that Ghostwire Tokyo was benchmarked with a beta driver that (allegedly) improves performance by 25 percent. Intel could've chosen not to add that label, but it did so it could reiterate its plan to enhance the Arc series' performance with each driver update.

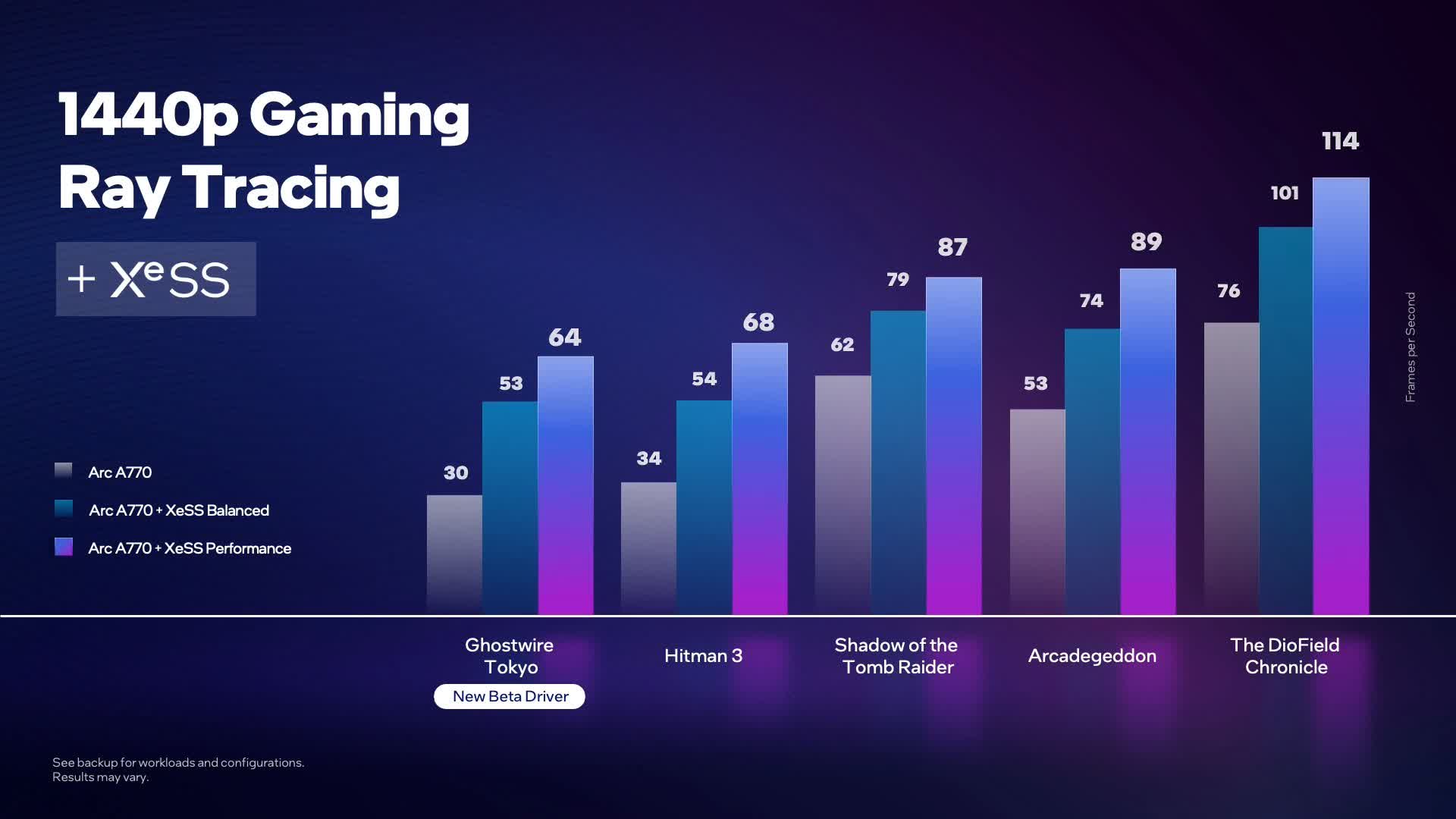

At the end of the interview, Shrout and Petersen talk briefly about using XeSS in conjunction with ray tracing. Nvidia and AMD each suggest using their respective super sampling technologies, DLSS and FSR, when their GPUs don't have the horsepower to push native resolution with ray tracing enabled, and it's the same deal here. The A770 does get some remarkable gains with XeSS enabled, but we'll have to wait and see what the image quality is like.

Intel still hasn't shared a release date for the A770 or the rest of the series but promises it is getting close. The A770 is expected to cost less than $400 and might be competitive with the 3060 near its MSRP of $330.

https://www.techspot.com/news/95861-intel-shows-arc-a770-beating-rtx-3060-ray.html