? My 4770K Haswell can play any game made in the past 5 years and I definitely do not need a 6 Core CPU to do so.

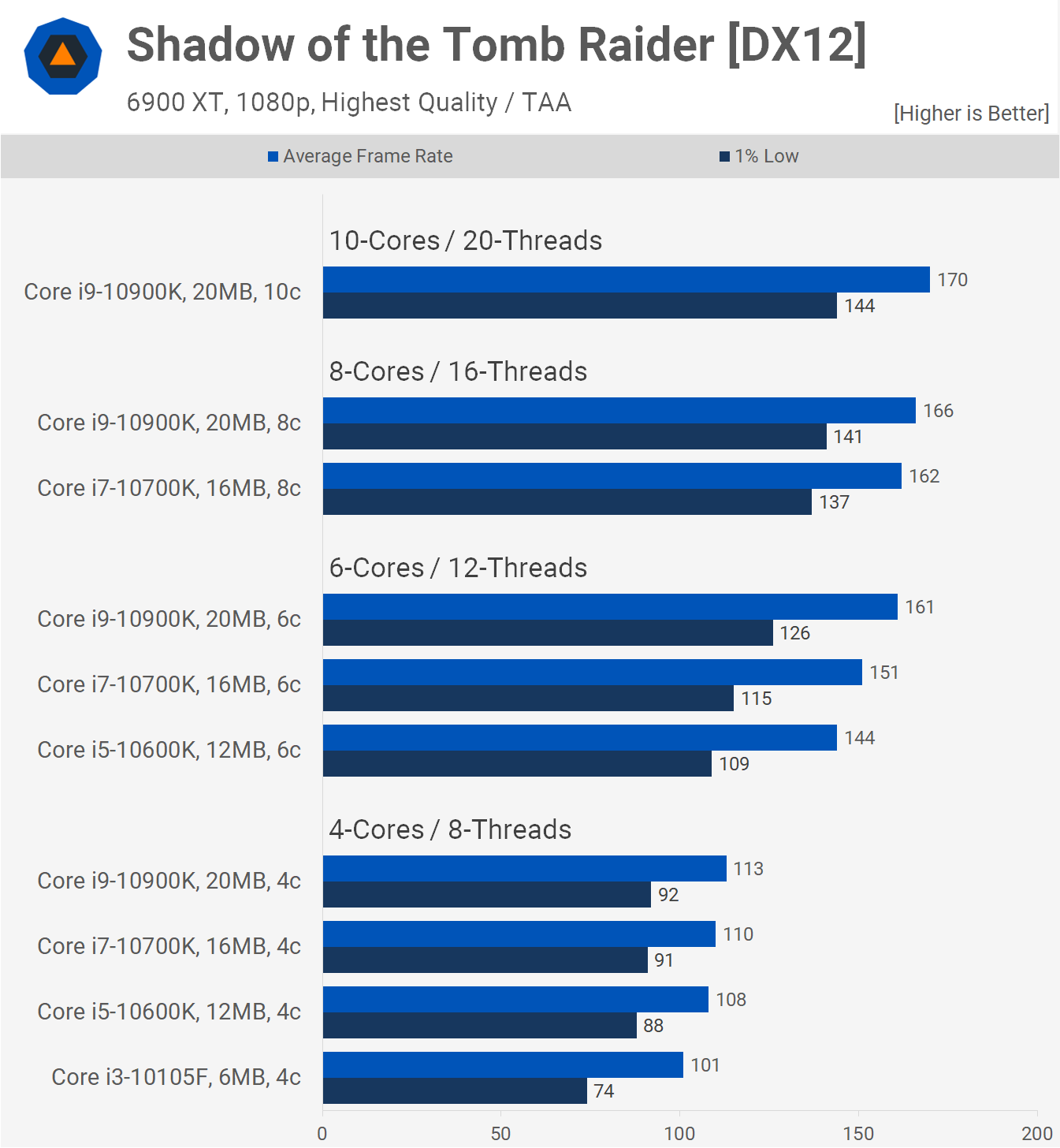

Who games at 150 FPS anyway? Only a small minority does. I don't even have a monitor capable of 100 FPS+ nor do I want to buy one.

Linus Sebastian, using Steam Survey, underlined that 43% of the Steam Userbase are still using 4 Core CPUs. It is obviously absurd to think that game developers would only launch games playable by 6 Core CPUs and up only and would ignore a huge chunk of the User Base who are still on 4C/8T.

As Linus says in the video, the OP tests and machines used concern such a miniscule portion of the global playerbase as to be in essence, 1% tests for 1% machines.

If you think for a moment that the GTX 1060, 1050TI and similar capabilities GPUs still top the Steam Survey lists, you will understand why 150+ FPS gaming concerns for the most part, 1 percenters.