Why it matters: Securing enough manufacturing capacity is going to be critical to the success of Nvidia’s RTX 4000 GPUs, which are expected to land as soon as this summer. The RTX 3000 series were regarded by gamers as paper launches, but the company is said to have paid through the nose so that wouldn’t be the case for its upcoming GPUs.

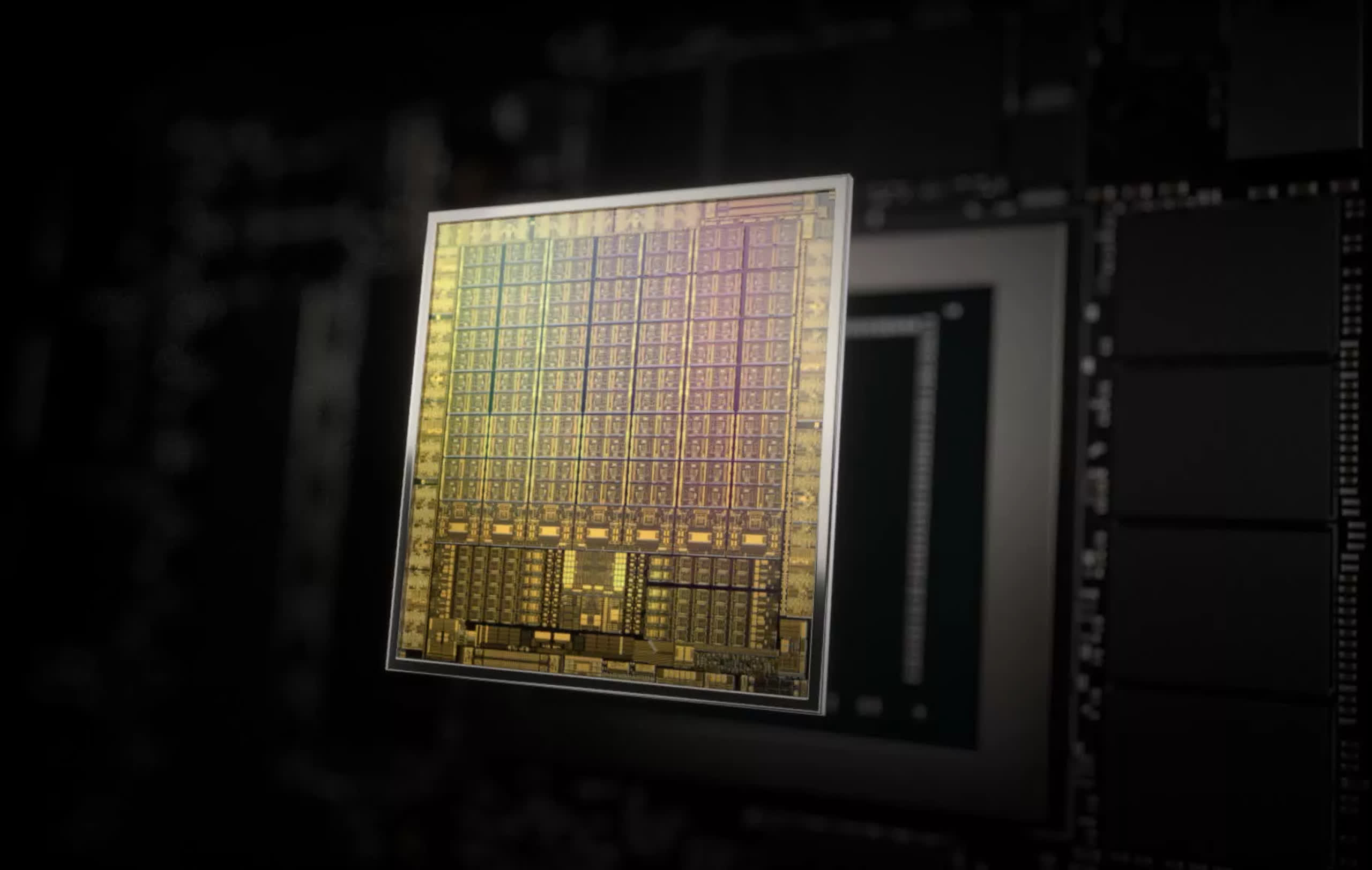

Back in November 2021, the rumor mill was abuzz with hints that Nvidia was planning to use TSMC’s 5nm process node for its upcoming GeForce RTX 4000 series (Ada Lovelace) GPUs. These are widely expected to be significantly faster and more power-hungry when compared to the current Ampere lineup, but a much bigger issue for the Jensen Huang-powered company is securing enough manufacturing capacity.

According to a report from Hardware Times, Nvidia is prepared to pay dearly for the ability to meet growing demand for increasingly powerful graphics cards. Specifically, it may be offering up to $10 billion to TSMC for a significant chunk of its 5nm manufacturing capacity. Other companies tapping TSMC’s N5 process node are Bitmain, AMD, and Apple.

Nvidia previously used Samsung’s 8nm process node to make Ampere GPUs, which are notorious for being power hungry. The company may have also dealt with relatively poor production yields when it comes to its RTX 3000 series GPUs, so moving to a smaller process node could help in that regard. However, TSMC’s 5nm wafers are expected to cost quite a bit more, which could translate into more expensive graphics cards.

With Intel’s Arc (Alchemist) GPUs on the horizon, it makes perfect sense for Nvidia to ensure availability issues won’t plague the launch of its upcoming RTX 4000 series GPUs. The tech supply chain is on the road to recovery, but most industry leaders believe the ongoing shortage of chips and passive components will persist until 2023. When it comes to graphics cards, Nvidia and AIB partners are more optimistic, but we’ll have to wait and see.

https://www.techspot.com/news/93490-nvidia-reportedly-spent-10-billion-chunk-tsmc-5nm.html