Why it matters: As miners and gamers fight to get their hands on the latest graphics cards, Nvidia is taking on the task of trying to please both crowds. By making mining-specific silicon and nerfing the upcoming RTX 3060 graphics card's ability to mine efficiently, the company hopes to quell criticism that it caters only to the crypto crowd.

The crypto craze is having a big impact on the availability of graphics cards, to the point where miners are snapping up laptops equipped with Nvidia's RTX Ampere GPUs to support their ever-growing operations. Some are even actively trying to tick off the gaming community by bragging about having things like mobile mining farms in the trunk of their expensive sports cars, RGB and all.

GPU manufacturers AMD and Nvidia are usually quick to respond to this type of demand, but the situation this time around is vastly different to what we saw a few years ago. Emerging trends like working and studying from home, as well as people looking to entertain themselves during lockdowns have put additional pressure on the tech industry's supply chain, leading to a lot of scalping and higher prices for computer hardware.

Gamers are understandably upset about it, but cryptocurrency mining has helped some businesses that have been affected by the pandemic to stay afloat. On the other hand, Nvidia and AMD made huge profits riding the mining waves, so they haven't felt the need to do much about it. And board partners like Zotac celebrating the issue only adds insult to injury.

Nvidia’s new Cryptocurrency Mining Processor

That changes today, as Nvidia announced it will take two steps to ensure that both miners and gamers can get what they want, or so they claim. The company will essentially nerf the upcoming GeForce RTX 3060 in terms of hash rate for popular mining algorithms, in the hopes that it will become less attractive for miners and in turn put more cards "in the hands of gamers."

According to Nvidia, drivers will be able to detect when, say, an Ethereum mining algorithm is running and throttle the card to lower the mining efficiency to 50 percent. These performance restrictions will be applied to both Windows and Linux drivers, which means that even using custom Linux distributions like NiceHash OS or Hive OS won't work to restore full compute capabilities for mining purposes.

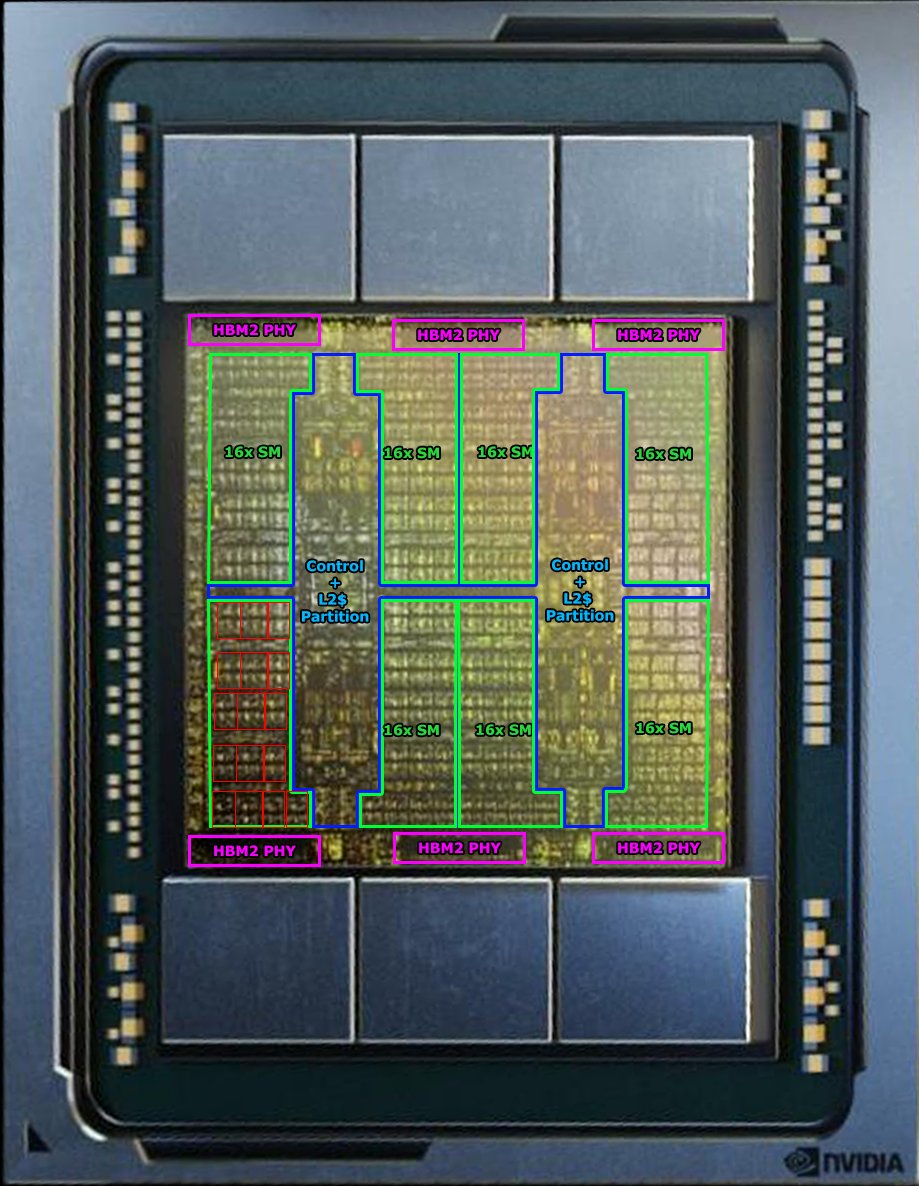

The second announcement is the Nvidia Cryptocurrency Mining Processor, which is not an entirely new idea. Some of Nvidia's board partners have sold mining-specific graphics cards in the past, and those were essentially the same as their consumer counterparts, save for the absence of video outputs and using a different driver.

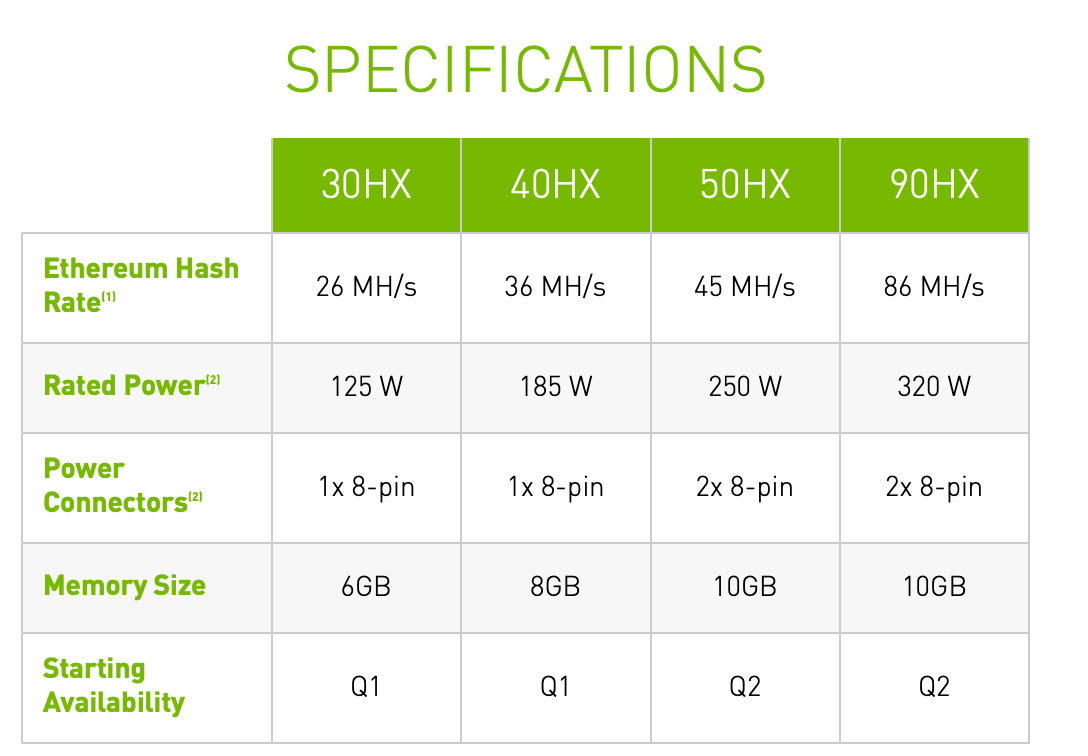

Nvidia says CMP HX products will feature silicon that has no video outputs and is optimized for mining efficiency. The cards will be available from AIB partners in four configurations ranging from 6 GB to 10 GB of VRAM and can achieve up to 86 MH per second in Ethereum mining algorithms. Power consumption ranges from 120 watts for 26 MH per second to 320 watts for 86 MH per second. Of course, miners are able to get significantly better numbers with tuning of individual cards, but that takes additional time and effort.

At this point, there is no way to predict if Nvidia's strategy will be advantageous for consumers.

The RTX 3060 is likely to be Nvidia's top-selling RTX 3000 series graphics card, so limiting its mining appeal is a welcome move, even if it's a software lock that can be circumvented in time. And this doesn't solve the problem for people interesting in buying higher-end Ampere graphics cards.

Releasing mining-specific cards will likely reduce demand for consumer versions, but will also produce a large amount of e-waste when mining is no longer be profitable on them. But the real question is whether miners will bother buying them when gaming graphics cards can be resold to recoup some of the investment money once they can no longer turn a profit.

https://www.techspot.com/news/88675-nvidia-rtx-3060-wont-good-ethereum-mining.html