In context: Despite the reservations from some groups wanting developers to tap the brakes on generative AI, the technology continues to improve at a breakneck pace. This advancement is arguably more evident in the subsector of image generation since it's had some time to brew and simmer down some of the early controversies it faced, which large language models are facing now.

Recently, Adobe released a Photoshop feature called "Generative Fill, " enabling content creators to expand an image beyond its initial borders. In a nutshell, the tool is part of Adobe's image-synthesis model Firefly. Users can extend the edges of a picture in any direction, and Generative Fill will produce cohesive content with or without contextual prompts.

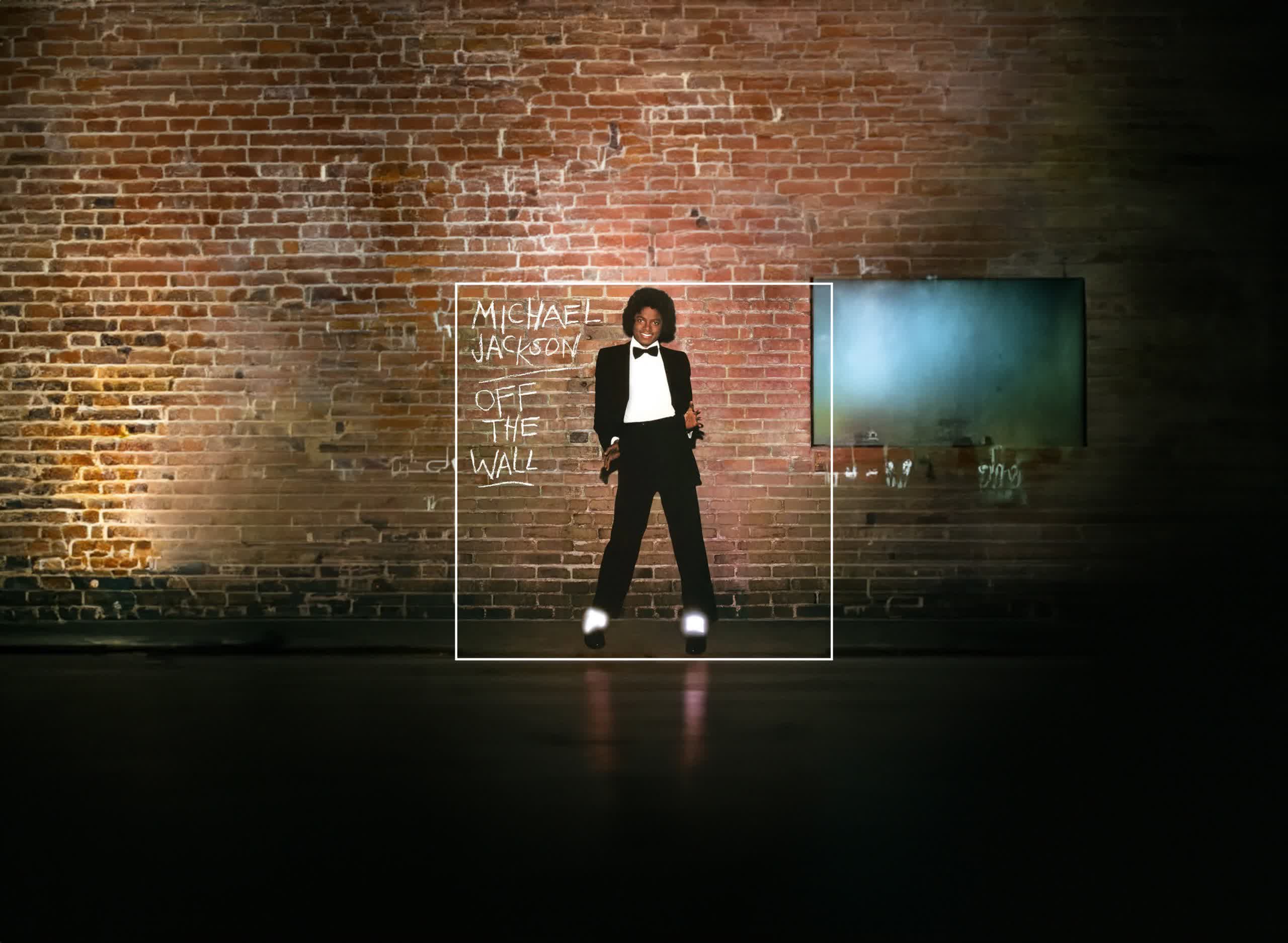

People have already started going wild with the feature on social media, with many examples going viral. One impressive set of images is from a Twitter user going by "Dobrokotov" (AI Molodca on Telegram). The self-proclaimed Russian multimedia artist used Generative Fill to expand some iconic album covers. His rendition of Nirvana's Nevermind (above) racked up over 2.3 million views in just a few days.

While the Nirvana album looked like a quick job – simply expanding the borders and then letting Generative Fill do its thing – some of the other covers took some prompting and trying out a few generated elements before landing on something stunning. Dobrokotov's take on the Abbey Road album is a prime example (below).

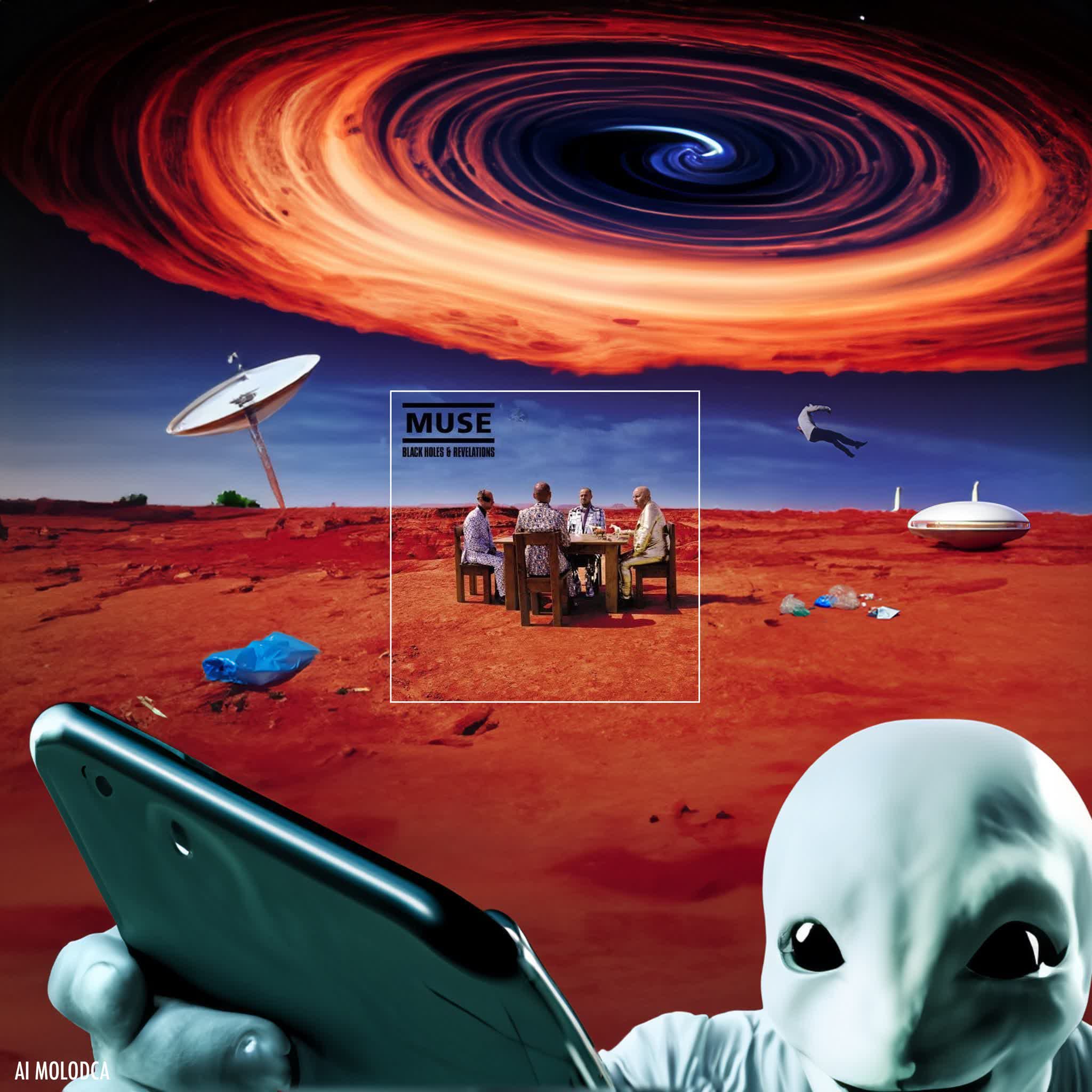

Although the Beatles released Yellow Submarine three years before Abbey Road, Dobrokotov added a yellow submarine off to the side as a neat little tribute. Another effect he likely prompted from the AI is the surrealistic space theme across the top of the image. Additionally, the super-extended crosswalk stripes and the fish-eye effect to the sides make the image fit right in with the 1960s era of psychedelic album art. Speaking of which: Dobrokotov's extension of Muse's Black Holes & Revelations is pretty trippy too (below).

While Dobrokotov didn't share his process for creating his stunning expansions, others have. As you can see in the video below, getting the final image to look good is usually more than just stretching out the borders and calling it a day. Getting a beautiful eye-catching piece takes a good understanding of image composition, a bit of trial and error, and some final touchup work. Generative image AI has come a long way but is still struggling with a few things, like eyes and fingers.

ãªãÂÂã»ã©ãÂÂPhotoshopã®"Generative Fill(ã¸ã§ãÂÂã¬ã¼ãÂÂã£�-�-ãÂÂã¤ã¶�-)ã«ã¯�"ãÂÂãÂÂãÂÂ使ãÂÂ�-�ãÂÂãÂÂãÂÂã®ãÂÂ... pic.twitter.com/Z24quNgDVJ

– æ¨�...�"大ï½ÂAIæÂÂ代ã«�-ãÂÂ�...ãÂÂãªãÂÂçºã®�...è¦Â�...å ±ï¿½'æ¯Â�-��...�信�"� (@shota7180) May 25, 2023

However, with some creative editing and post-generation touchups, artists can mask these flaws enough to pass the work off as fully human-created. The industry has already voiced this concern in the subgenre of AI image generation, and it's still being debated on several levels, but in the meantime, it's fun to see what people can come up with with a little (or a lot) of aid from a machine (below, image credit: fangming.li).

https://www.techspot.com/news/98913-photoshop-generative-fill-tool-has-users-flooding-twitter.html