In brief: Power efficiency is not the first thing that comes to mind when one thinks of the RTX 3090 Ti, yet it seems undervolting the card really can make it less power-hungry than some of Nvidia's and AMD's best while maintaining a performance advantage.

Igor's Lab conducted a test on the RTX 3090 Ti that involved undervolting the card, which has a 450W power consumption, down to 300W. Igor achieves this by adjusting the power limit to 300W using OC tool MSI Afterburner and adjusting the VF curve. He duplicated the curve of the RTX A6000, which also has a 300W max power draw and features the same GA102 die—though it uses GDDR6 instead of GDDR6X memory.

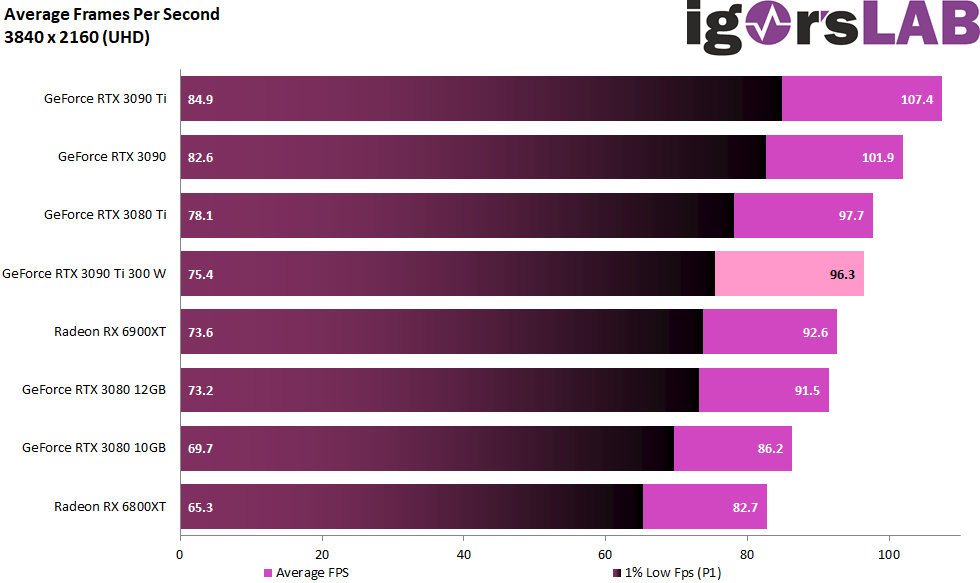

Igor tested the MSI Suprim X RTX 3090 Ti against the same brand of RTX 3080 10GB/12GB, RTX 3080 Ti, and RTX 3090 cards. He used MSI'S Gaming X versions of the AMD RX 6800 XT and RX 6900 XT.

Looking at the 4K benchmarks, we see that the standard RTX 3090 Ti manages 107.4 fps, dropping just over ten fps to 96.3 fps at 300W.

Image credit: Igor's Lab

The RTX 3090 Ti pulls a maximum of 314W in the tests, while the 3080 Ti reached 409W, yet Igor concludes that the two cards are roughly equal in performance. The undervolted card manages to beat the Radeon RX 6900 XT (359W), RTX 3080 12GB (393W), RTX 3080 10GB (351W), and the Radeon 6800XT (319W).

Of course, this is just a demonstration of what's possible with the RTX 3090 Ti. The card costs around $2,000, while the RTX 3080 Ti is roughly $1,200+, and it's going to take a very long time and a lot of use to recoup the $800 difference via power saving. Still, it's certainly an interesting experiment.

Make sure to check out our review of the RTX 3090 Ti, which we called fast and dumb.

https://www.techspot.com/news/94153-rtx-3090-ti-set-300w-rtx-3080-ti.html