Leveraging retrieval-augmented generation (RAG), TensorRT-LLM, and RTX acceleration, you can query a custom chatbot to quickly get contextually relevant answers. And because it all runs locally on your Windows RTX PC or workstation, you'll get fast and secure results.

Features

Chat With Your Files

ChatRTX supports various file formats, including text, pdf, doc/docx, and xml. Simply point the application at the folder containing your files and it'll load them into the library in a matter of seconds.

Chat for Developers

The ChatRTX tech demo is built from the TensorRT-LLM RAG developer reference project available from GitHub. Developers can use that reference to develop and deploy their own RAG-based applications for RTX, accelerated by TensorRT-LLM.

Ask Me Anything

ChatRTX uses retrieval-augmented generation (RAG), Nvidia TensorRT-LLM software and Nvidia RTX acceleration to bring generative AI capabilities to local, GeForce-powered Windows PCs. Users can quickly, easily connect local files on a PC as a dataset to an open-source large language model like Mistral or Llama 2, enabling queries for quick, contextually relevant answers.

Rather than searching through notes or saved content, users can simply type queries. For example, one could ask, "What was the restaurant my partner recommended while in Las Vegas?" and ChatRTX will scan local files the user points it to and provide the answer with context.

The tool supports various file formats, including .txt, .pdf, .doc/.docx and .xml. Point the application at the folder containing these files, and the tool will load them into its library in just seconds.

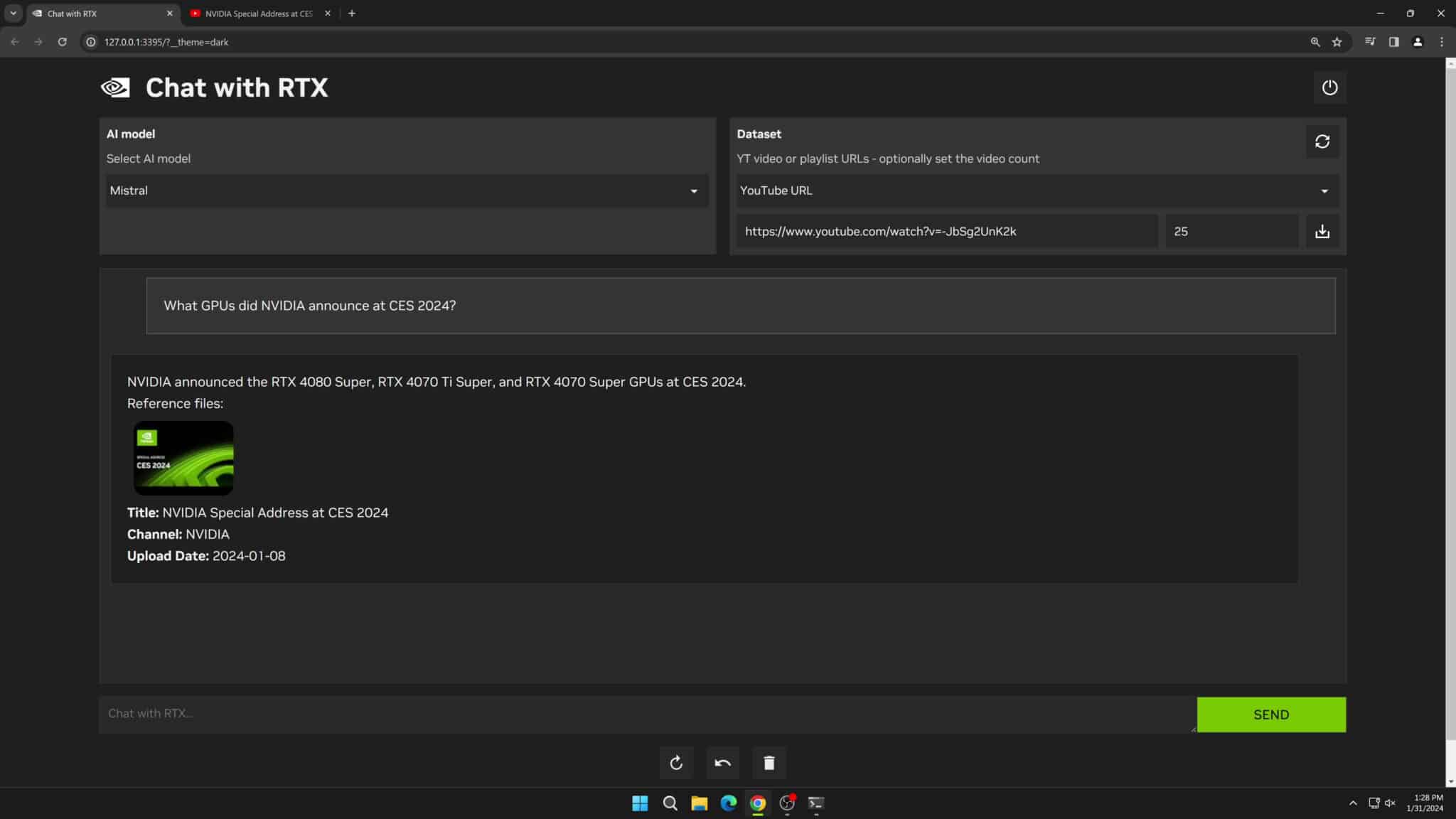

Users can also include information from YouTube videos and playlists. Adding a video URL to ChatRTX allows users to integrate this knowledge into their chatbot for contextual queries. For example, ask for travel recommendations based on content from favorite influencer videos, or get quick tutorials and how-tos based on top educational resources.

Since ChatRTX runs locally on Windows RTX PCs and workstations, the provided results are fast – and the user's data stays on the device. Rather than relying on cloud-based LLM services, ChatRTX lets users process sensitive data on a local PC without the need to share it with a third party or have an internet connection.

In addition to a GeForce RTX 30 Series GPU or higher with a minimum 8GB of VRAM, ChatRTX requires Windows 11, and the latest Nvidia GPU drivers.

ChatRTX can integrate knowledge from YouTube videos into queries.

What's New

ChatRTX uses retrieval-augmented generation, NVIDIA TensorRT-LLM software and NVIDIA RTX acceleration to bring chatbot capabilities to RTX-powered Windows PCs and workstations. Backed by its powerful large language models (LLMs), users can query their notes and documents with ChatRTX, which can quickly generate relevant responses, while running locally on the user's device.

The latest version adds support for additional LLMs, including Gemma, the latest open, local LLM trained by Google. Gemma was developed from the same research and technology used to create the company's Gemini models and is built for responsible AI development. ChatRTX also now supports ChatGLM3, an open, bilingual (English and Chinese) LLM based on the general language model framework.

Users can also interact with image data thanks to support for Contrastive Language-Image Pre-training from OpenAI. CLIP is a neural network that, through training and refinement, learns visual concepts from natural language supervision – that is, the model recognizes what it's "seeing" in image collections. With CLIP support in ChatRTX, users can interact with photos and images on their local devices through words, terms and phrases, without the need for complex metadata labeling.

The new ChatRTX release also lets people chat with their data using their voice. Thanks to support for Whisper, an automatic speech recognition system that uses AI to process spoken language, users can send voice queries to the application and ChatRTX will provide text responses.

System Requirements

- Windows 11

- GPU: Nvidia GeForce RTX 30 or 40 Series GPU or Nvidia RTX Ampere or Ada Generation GPU with at least 8GB of VRAM

- RAM: 16GB or greater

- Driver: 535.11 or later