What just happened? A number of Alienware m15 R5 owners have found fewer cores than expected in their RTX 3070s when checked with GPU-Z and other monitoring tools. Users claim to have fixed it by flashing the vBIOS of the R4 model—but Dell are advising against doing so until they're able to put together a proper fix.

Things aren't going well with Dell's Alienware gaming notebooks as of late. With the Alienware Graphics Amplifier external GPU recently killed off in favor of Thunderbolt 4 solutions, and the class-action lawsuit now being brought against the advertising of their Area 51m R1 laptops, they could be forgiven for wanting some good press right now.

Unfortunately, users seeming to find the GeForce RTX 3070 graphics cards in their Alienware m15 R5 gaming laptops being distinctly below-par is anything but that.

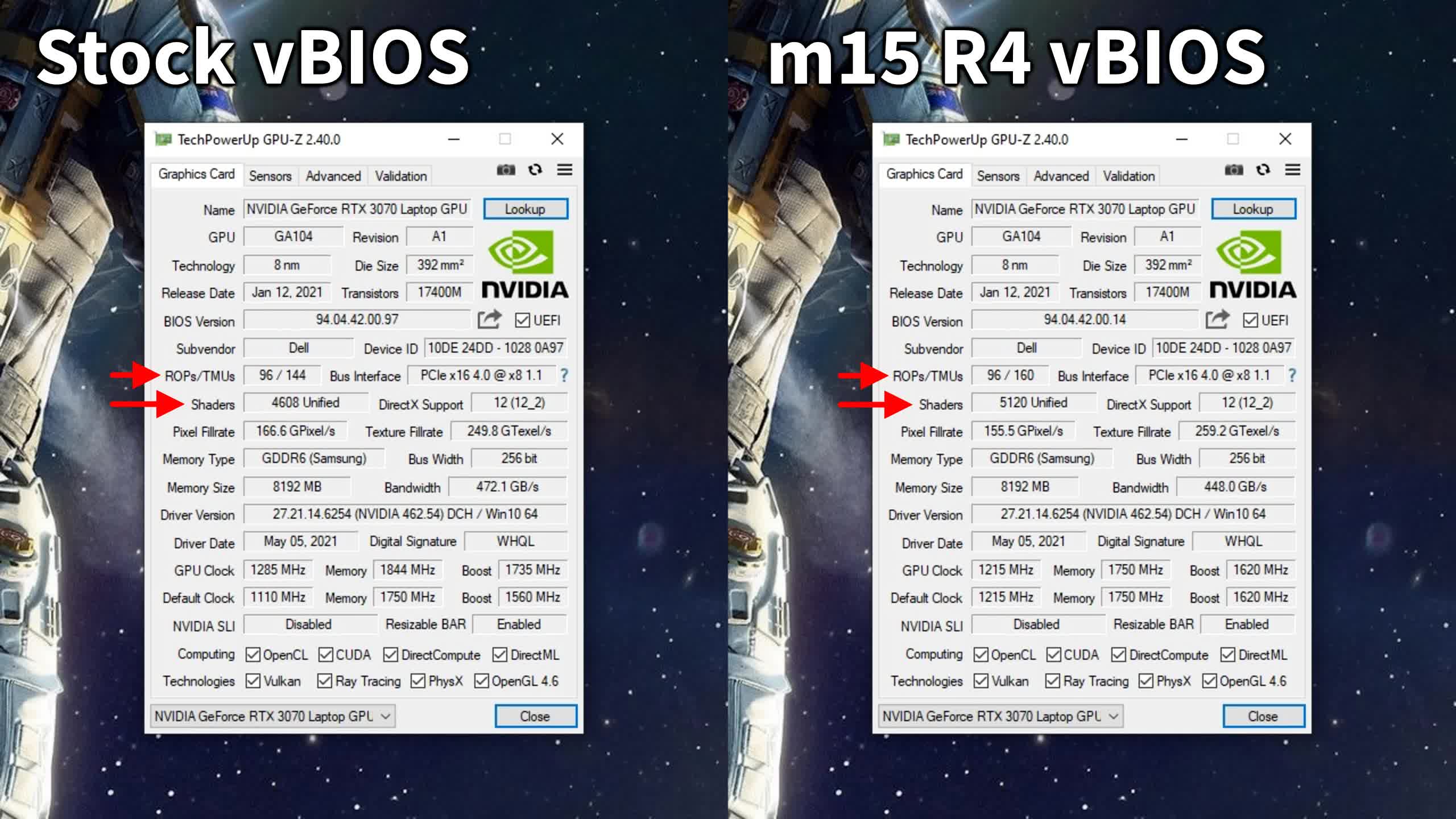

The laptop version of the RTX 3070 should sport 5,120 CUDA cores—already cut down from the desktop model by 768—but a number of m15 R5 owners have seen their laptops reporting even fewer than that, with only 4,608 cores showing up in both GPU-Z, HWiNFO, and even Nvidia's own control panel.

That's a loss of 512 CUDA cores, and a remaining total that's lower than the 4,864 of the desktop RTX 3060 Ti.

Compounding things, the RTX 3070 also seems to only have 144 of the expected 160 TMUs and tensor cores, and 36 out of 40 expected ray tracing cores, giving the appearance that a full 10% of the entire graphics card is simply unavailable—or even outright unusable. Contrasting with those numbers, however, are the 96 ROPs reported in GPU-Z and HWiNFO; more than the expected 80, and the maximum possible on a GA104-based GPU, which should only be seen on the mobile RTX 3080 and desktop RTX 3070.

In search for a fix, some users have taken to flashing across the vBIOS from the previous model, the m15 R4. This does appear to have restored the full RTX 3070 CUDA core count, not to mention the other missing sub-components, although the abnormally high ROP count remains.

However, the user that produced the screenshots shown above later reported instability and hanging issues that forced him to revert to the stock vBIOS, potentially due to the higher TDP of the R4's vBIOS—and in any case, a fix like this is always risky business. Flashing the laptop's vBIOS comes with the danger of bricking the GPU, especially when it's one from an entirely different unit, and the soldered nature of laptop GPUs doesn't leave much room for second chances.

Dell seems to agree, telling Hot Hardware...

We have been made aware that an incorrect setting in Alienware’s vBIOS is limiting CUDA Cores on RTX 3070 configurations. This is an error that we are working diligently to correct as soon as possible. We’re expediting a resolution through validation and expect to have this resolved as early as mid-June. In the interim, we do not recommend using a vBios from another Alienware platform to correct this issue. We apologize for any frustration this has caused.

We'll have to see how Dell goes about fixing this issue, but with graphics TDP varying between units, and the mobile GPU silicon being more heavily cut-down from desktop compared to previous generations, another case of laptops not quite performing as expected is unlikely to go down well with the gaming crowd.

https://www.techspot.com/news/89950-alienware-vbios-abducts-cuda-cores-rtx-3070.html