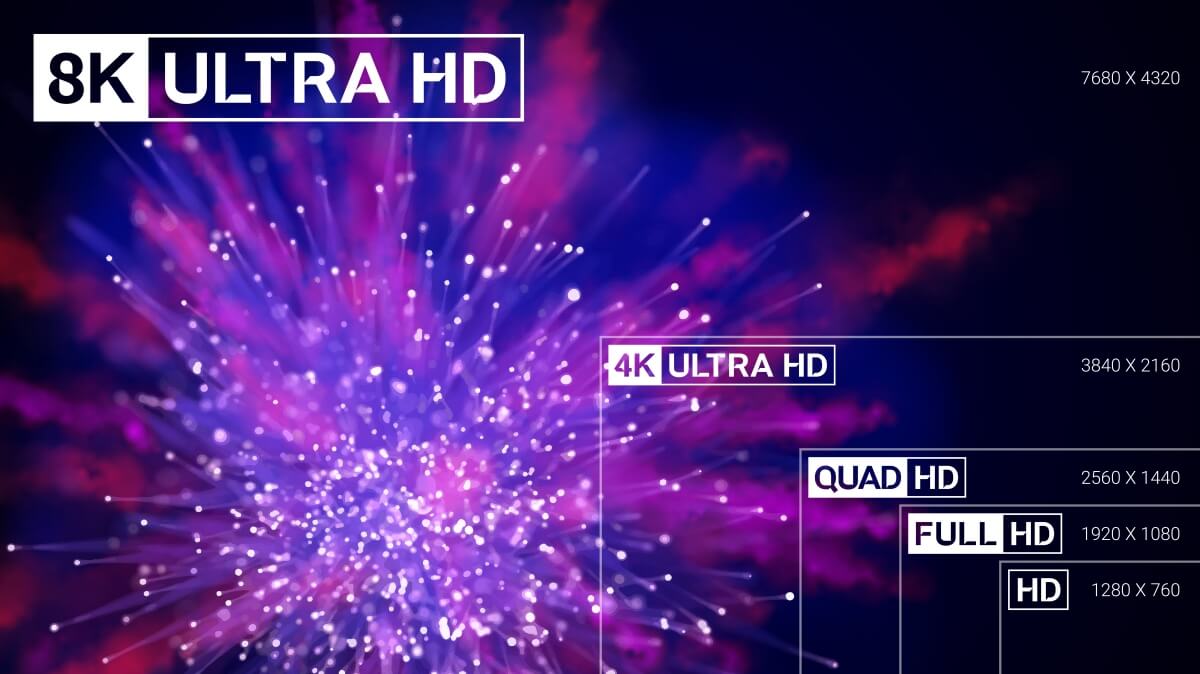

Pixel-to-vector mixed compression (semi-vector compression), combined with AI-based approximation. Plus there are already AI-based up-scaling solutions that are way better that regular pixel up-scaling and anti-aliasing.

or to put it in other terms or expand the concept... yes you can create something out of nothing, as long as it fits with what the viewer expects to see.

think for a moment if the task was given to a human and it was one frame/ a still image. if one had painting talent like many art school grads/consumers of green do lets pretend they are given a 640x480 face printed on a 30in medium that accepts their paint type of preference.

is it so hard to concive that they couldnt add lines, colors/tones and shading to greatly improve the detail in this image? have you seen any museum art galleries? photo realism from scratch is something a talented painter can pull off.

with a bit of artistic skill and preferably a drawing tablet one could take a 640x480 image of a face and paint in the lines and shadows thrown out by lack of dots to represent them.

the face isnt important the rez isnt important, whats important to make it fly is that the additions are context aware. aka if it fits what people expect in a face, bridge, building etc.

if it does the majority of people will never see it.

30 of these images per second is a double edged sword. on the one hand you see it for a lot less time. on the other, you add the challenge of anything added better follow the spot it was added to, aka if the face gets closer to the cam everything better scale. same goes for if actor turns 90deg or if camera is moving on any living or inanimate subject.

if you look into it there are libraries for coding...freely available that can track and identify subjects of images or features in an image. see open cv. geting to having ai paint with that data is no small feat but certainly with in the rhelm of possible now and getting easier every day.

if you limit it to source content that can accept delay (aka games are out) the task is way easier. if you add say a 2 second delay on channel change... you make it so the ai isnt racing the clock to produce and validate each frame. the major risk with smaller windows is when you go from an olson twin to the shot of the bridge.; the ai needs to realize the context of what its suposed to paint is different and its previously tracked points are invalid. if the ai gets caught (er probably shouldnt use with its pants down lol) you end up with the actors face/the upscale painted additions as part of the bridge or some weird jumbled mess in between the two frames or what would be a new twist on the term compression artifact. the more frames the ai can churn through ahead of what is being blasted to your eyes, the less likely this is to happen.

if the source content was compressed in a format that added vector info id bet at least the task of keeping things where they should be gets exponentially smaller

im an ai noob but have a programing background and some friends who are anything but ai noobs. id think this is the sort of task that would be accomplished with something like a Generative adversarial network but I could be off base.