Forward-looking: OLED gaming monitors are becoming increasingly popular and, while most are still pricey, they are expected to get cheaper as time goes on. But what does the future of these displays look like? According to an industry insider, a slew of new 240Hz 32-inch and larger OLED panels will arrive next summer.

Writing in a post on Reddit, a representative for boutique monitor startup Dough gave a good explainer of the different types of OLED panels – W-OLED, RGB OLED, and QD-OLED – including their structure, advantages, and disadvantages.

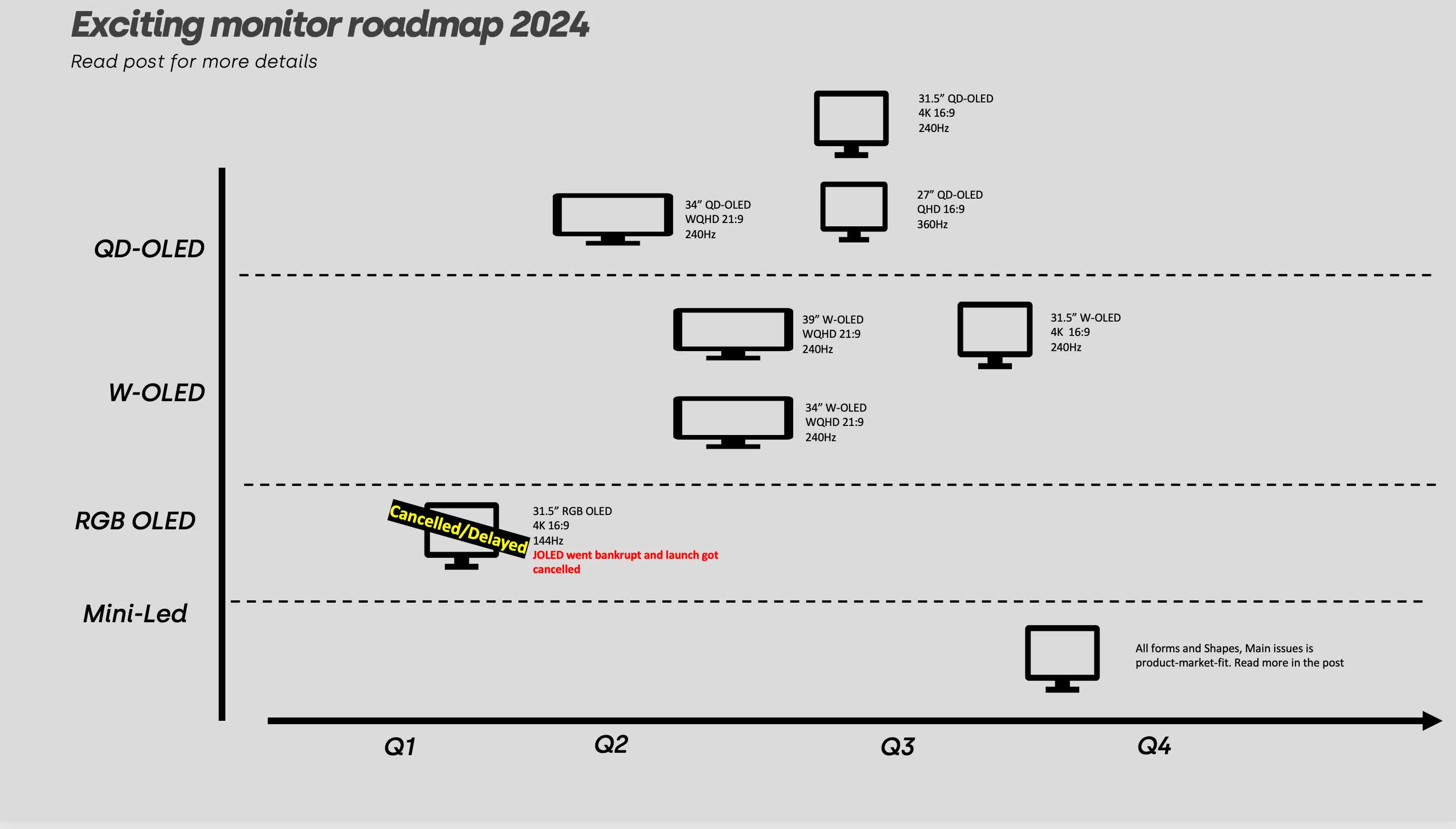

The post also includes a roadmap of expected OLED panels arriving next year, though the earliest entry, a 31.5-inch 4K 144Hz RGB OLED (the only one of this kind on the map) from JOLED, has been canceled. The Japanese company, formed from the OLED businesses of Sony and Panasonic in 2015, went bankrupt in March, meaning its panel isn't coming to market. The RGB OLED subpixel structure offers more color accuracy and better text rendering, and doesn't suffer from color-fringing issues caused by the unique subpixel layout of QD-OLED and W-OLED.

Includes top OLED monitor choices: The Best Gaming Monitors - Mid 2023

The middle of next year will see a slew of new OLED panels. Three W-OLEDs are expected to arrive from LG in 31.5-inch (4K), 34-inch (WQHD), and 39-inch (WQHD) sizes, all with 240Hz refresh rates. Samsung also has 31.5-inch 4K and 34-inch WQHD panels with 240Hz refresh rates on the map, along with a 27-inch QHD panel with a 360Hz refresh.

OLED monitors remain more expensive than LCDs. Next year's QHD models are expected to be in the range of $700 to $1100 while the 4K models are predicted to fall between $1000 and $1500.

Other panels not on the map are launching next year, but the Dough rep says they only included what they think are the most exciting ones.

Another noticeable absence from the roadmap is LCD panels. The rep says LG is following Samsung, which exited the LCD business last year, by drastically cutting down on these models, mainly because the likes of BOE can now make better-quality LCDs at lower prices subsidized by the Chinese government.

In June, industry insider Bob Raikes spoke with Merck, a supplier of LCDs to companies like Samsung, claiming the conversation led him to believe that no further advancements will be made in the core display technology. Merck later disputed the claim, and Merck emphasized that the likes of miniLED backlights, optical components including quantum dots, phosphors, and films will continue to be developed for LCD TVs.

The Dough rep also assured people that LCDs were here to stay, especially in lower-to-mid-range products, with their costs falling as refresh rates and resolutions increase.

https://www.techspot.com/news/99653-slew-32-inch-4k-oled-gaming-monitors-240hz.html