Why it matters: Nvidia's new flagship RTX 4090 is a beast in multiple aspects. On top of its unprecedented gaming performance, consumers have expressed worry over its power requirements. Those fears may now bear out weeks after warnings about the GPU's power connectors.

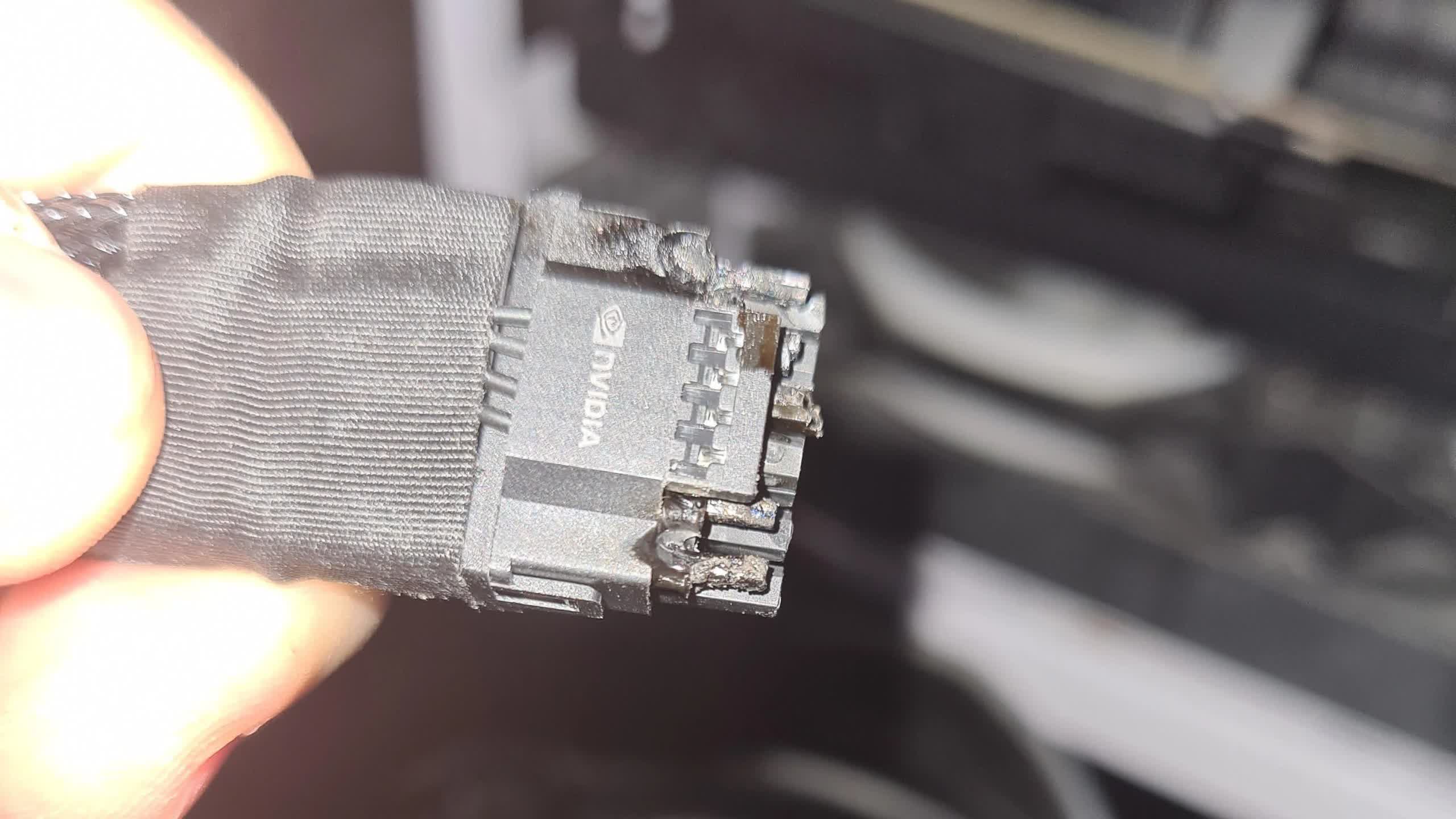

Nvidia said it's investigating at least two cases of burning and melting power adaptors for its new flagship RTX 4090 graphics card. The requirement of a new power connector standard to use with the GPU has caused some controversy.

Two Reddit users shared photos of burnt and melted RTX 4090 power connectors on the end of the cable and on the card itself. Nvidia contacted one of the owners in hopes of diagnosing the problem. It's unclear if the incidents are related to issues the Peripheral Component Interconnect Special Interest Group (PCI-SIG) highlighted in September.

The core of the controversy is the new power connector standard the RTX 4000 series graphics cards use. The GPUs employ the 16-pin 12VHPWR cable standard for ATX 3.0 power supplies. Those looking to upgrade without replacing their ATX 2.0 PSUs can use adapters bundled with the cards connecting three 8-pins or four 8-pins to one 12VHPWR cable.

Last month, PCI-SIG warned that certain situations could put the adaptors in danger of over-current and over-power. For example, pushing the PSU to its limit in a hot case could burn the adaptor connectors, but Nvidia claims it fixed the issue before the official launch. Cable vendor CableMod warns that bending the cables less than 35mm can threaten the connections since the 12VHPWR connectors are smaller than previous generations.

It's unclear if either of these concerns is behind the Reddit users' incidents. In any case, it's probably safest to use RTX 4000 GPUs with ATX 3.0 PSUs if you can afford the upgrade.

In response to the burnt connector photos, AMD confirmed its upcoming RTX 4000 competitor — the RDNA 3 GPUs — wouldn't use 12VHPWR. Many users will likely celebrate the new cards retaining full compatibility with their existing PSUs.

Worries over 12VHPWR came in addition to early concerns that the RTX 4090 might draw 800W. Nvidia confirmed that it only needed 450W, recommending an 850W PSU for systems using the card. However, some AIB partners suggest PSUs over 1,000W out of caution for users attempting to pair the GPUs with cheap PSUs.

Rumors indicate Nvidia intended to launch an even more powerful RTX 4000 card --the Lovelace Titan --but canceled it because it required too much energy. Reports say that it melted PSUs and tripped breakers in internal tests. However, the monster card could return with 27Gbps or GDDR7 memory.

https://www.techspot.com/news/96444-nvidia-investigating-cases-melting-rtx-4090-power-cables.html