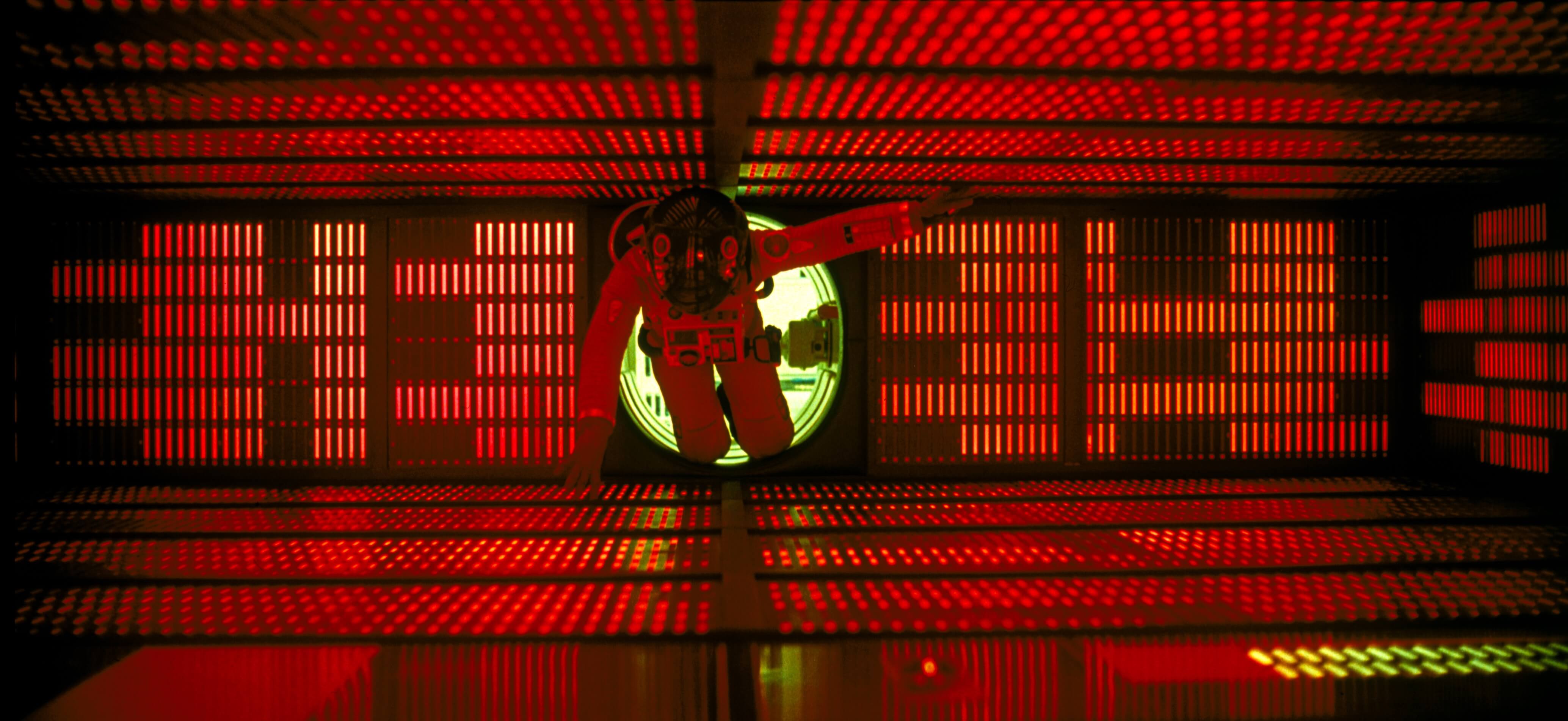

Through the looking glass: National broadcasting network NHK launched the world’s first 8K TV channel yesterday in Japan. Not unironically, NHK provided the 8K televisions and dedicated satellite dishes to guests at the launch ceremony who celebrated the beginning of 8K television with the “masterpiece of film history,” 2001: A Space Odyssey.

Adoption of 8K television is still a pipe dream for most of the world, but in Japan, the government has pushed networks to be at the forefront. NHK has happily complied, and their research and development over the last few years have culminated in a dedicated 8K TV channel.

For the first ever 8K television broadcast, NHK wanted to show a classic, something everyone can respect. Unfortunately, only the most recent films have been shot in 8K, and they didn’t suit the occasion. To work around this, NHK requested that Warner Brothers scan the original negatives of the 1968 Sci-Fi 2001: A Space Odyssey, which happened to have been recorded on 70mm film, the best at the time.

According to NHK, "the many famous scenes become even more vivid, with the attention to detail of director Stanley Kubrick expressed in the exquisite images, creating the feeling of really being on a trip in space, allowing the film to be enjoyed for the first time at home.”

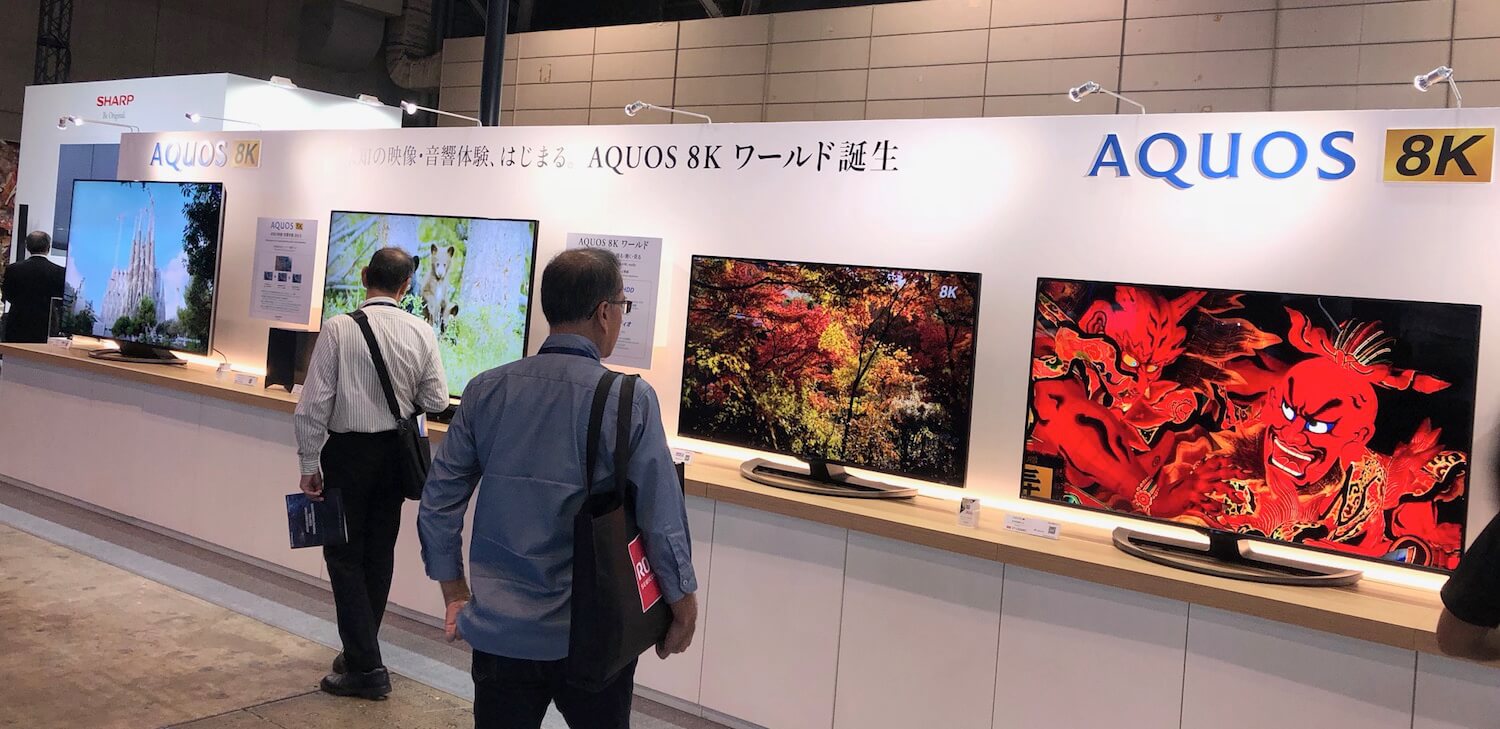

In addition to upgrading the film to 8K, they also improved the audio to support the 22.2 channel system that they’ll be broadcasting with from now on. Because the audio has such high fidelity, it requires a cable of its own, in addition to the four HDMI cables the image needs. Plugging into one end of the cables is Sharp’s $2,200 satellite dish, and at the other end is Sharp’s $14,000 8K Aquos TV.

The new 8K channel will be broadcasting exclusively in Japan for around 12 hours a day. Rather than upscaling 4K video like Samsung has been hoping to do, they’ll be filming new content and events and playing recent high-budget 8K movies. Starting soon, they’ll also be sending a film crew to Italy to present “popular tourist attractions from Rome, as well as food, culture and history.” In March, they’ll be showing 1956 hit My Fair Lady starring Audrey Hepburn, another film that was shot on 70mm.

All this is of course in preparation for the 2020 Tokyo Olympics, which NHK will be broadcasting live in 8K. A major part of the Japanese government’s plan, the Tokyo Olympics may finally make 8K something worth considering. Communications Minister Masatoshi Ishida says that he hopes Japan can play a leading role in 8K broadcasting.

Will 8K ever become mainstream? There is an obvious answer to that question. But it's possible it will be adopted first on VR displays and on close up screens like tablets. On televisions it's harder to see a difference from other high resolutions like 4K or 6K at a distance. For reference, Sharp’s 70” 8K TV has 126 pixels per inch, which is nearly 40% higher than a 24” 1080p monitor – something you’d sit at least 6 feet closer to.

The irony of choosing a sci-fi classic to demonstrate what is nearly sci-fi technology hasn’t gone unnoticed. But by making 8K content available, NHK has finally created a use case that may bring enough consumers to the table to merit the development of cheaper 8K televisions.

https://www.techspot.com/news/77667-world-first-8k-tv-channel-has-kicked-off.html