In brief: As Nvidia's RTX 4070 is expected to launch tomorrow with 12GB of GDDR6X, AMD has decided now's a good time to take a shot at its rival by pointing out how much memory new games require when played at higher resolutions. Team Red notes that its mid- to high-end cards have more VRAM than Nvidia's equivalents, though some of its benchmark results don't exactly line up with our own.

In a post titled 'Building an Enthusiast PC,' AMD writes that graphics card memory requirements are increasing as the games industry embraces movie-quality textures, complex shaders, and higher-resolution outputs.

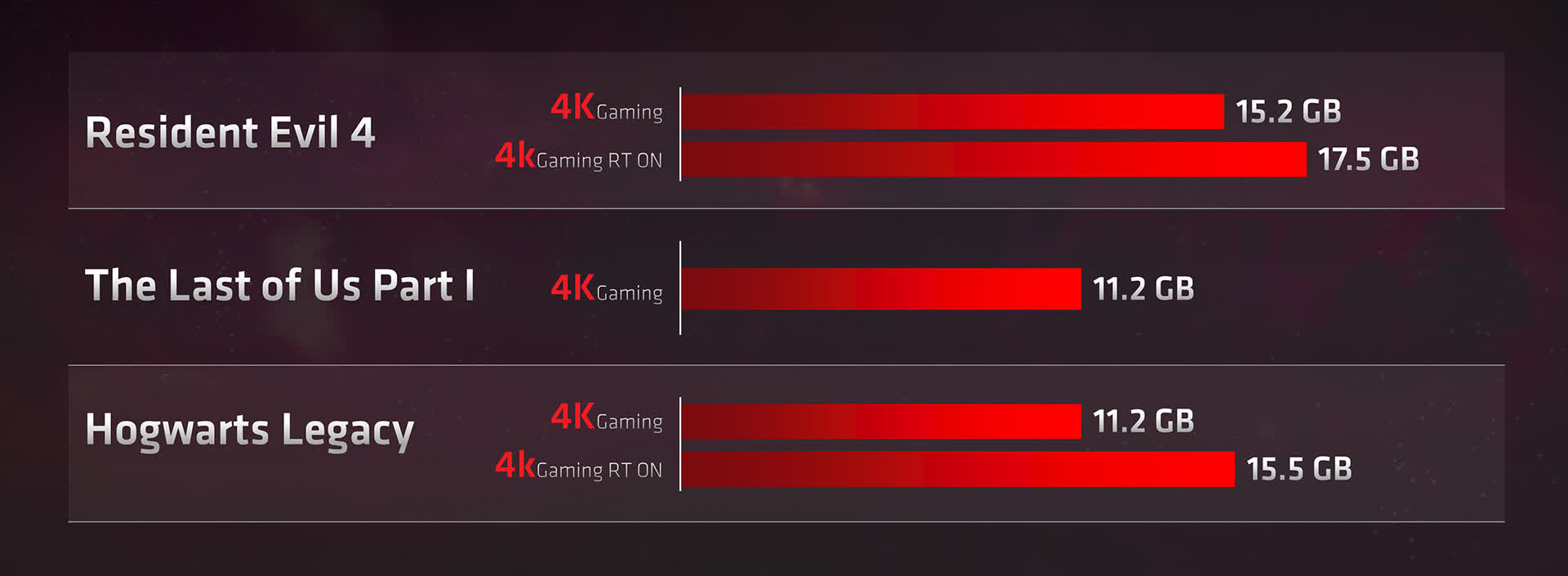

The post highlights some of the most VRAM-hungry recent games and their peak memory usage when playing in 4K: Resident Evil 4 (15.2GB/17.5GB with RT), The Last of Us Part 1 (11.2GB), and Hogwarts Legacy (11.2GB/15.5GB RT on). It notes that without enough video memory to play games at higher resolutions, players are likely to encounter lower fps, texture pop-in, and even game crashes.

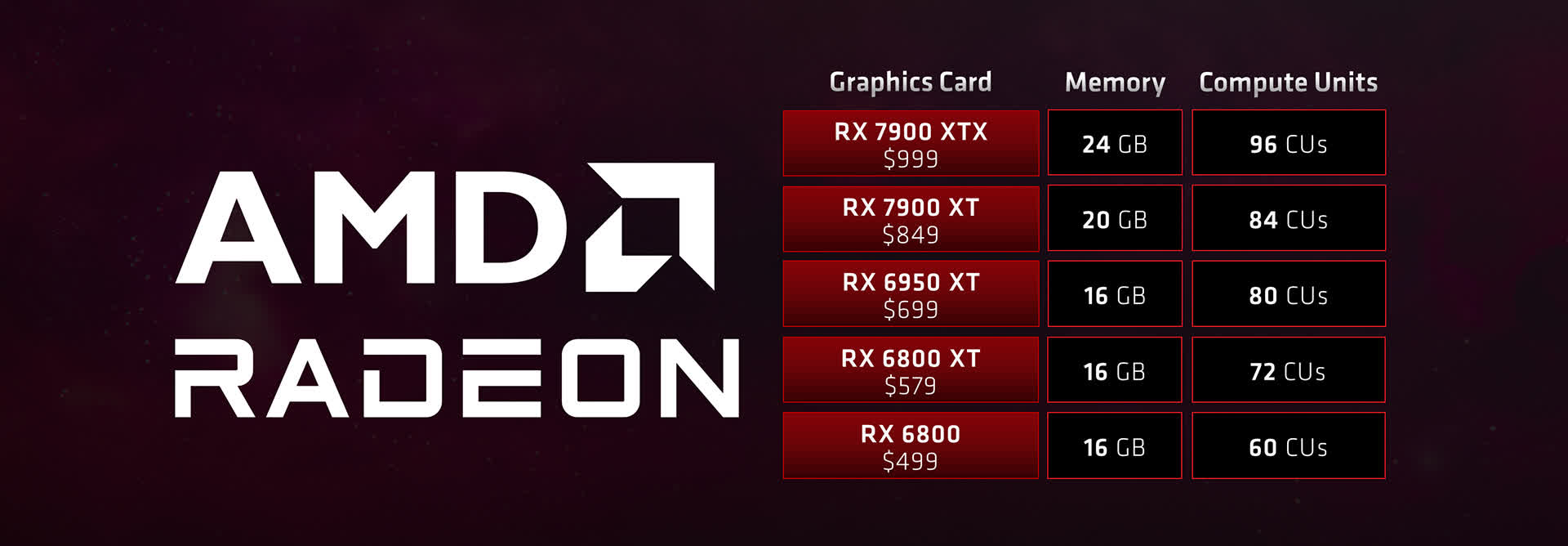

As such, AMD has listed which of its cards are best suited for various resolutions: the 6600 series with its 8GB of VRAM for 1080p, the 6700 series (12GB) for 1440p, and the 6800 and above (16GB+) for 4K. The latest Radeon cards, the RX 7900 XT and RX 7900 XTX, pack 20GB and 24GB of VRAM, respectively.

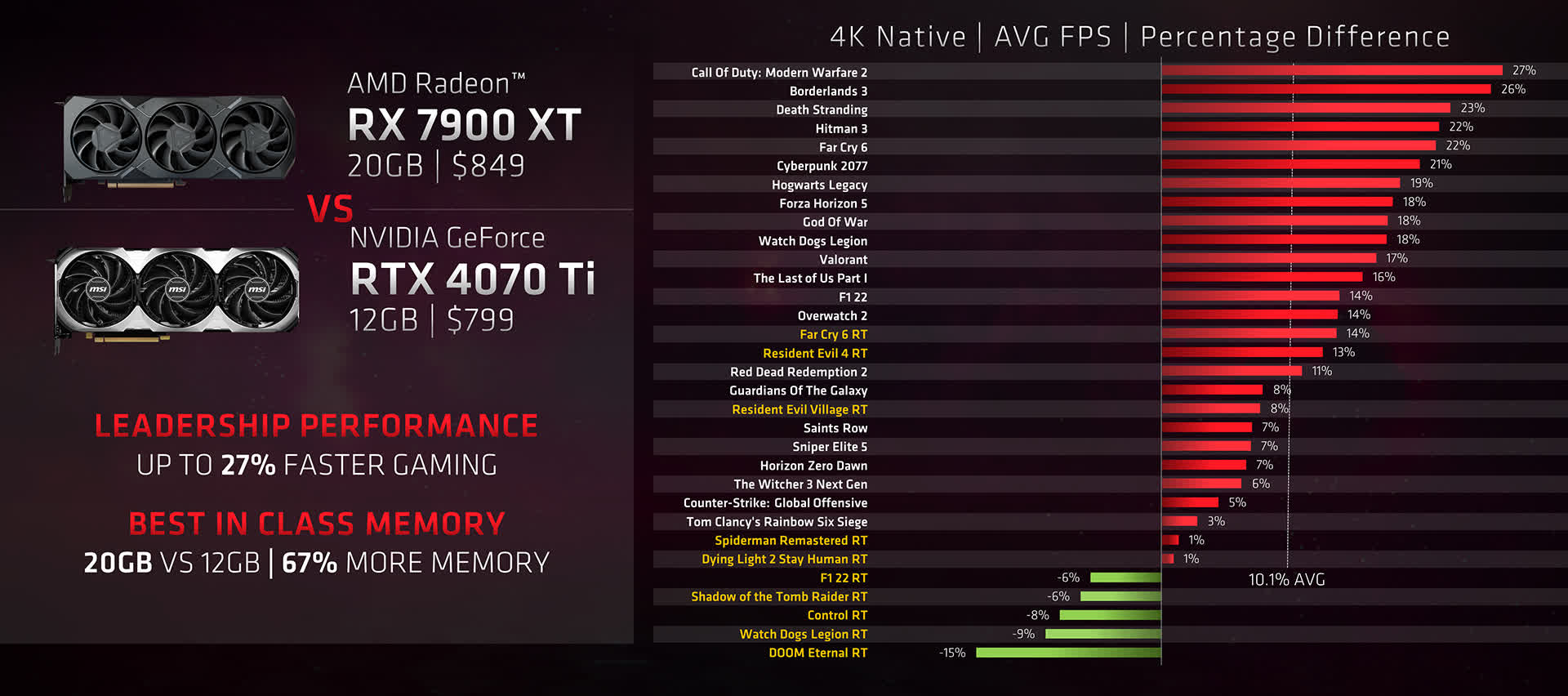

The post doesn't miss out on the opportunity to highlight the price difference between AMD cards and Nvidia's. One benchmark comparison is between the $849 RX 7900 XT (20GB) and the $799 RTX 4070 Ti (12GB), though finding the latter at that price isn't easy.

The benchmark charts cover 32 "select" games at 4K. AMD obviously wants to look good here, hence the extensive notes about the various system configurations used in the testing. For example, its RX 7900 XT vs. RTX 4070 Ti chart has the former card 10.1% faster on average at 4K, while our own results put the difference at just 3% in favor of the Radeon.

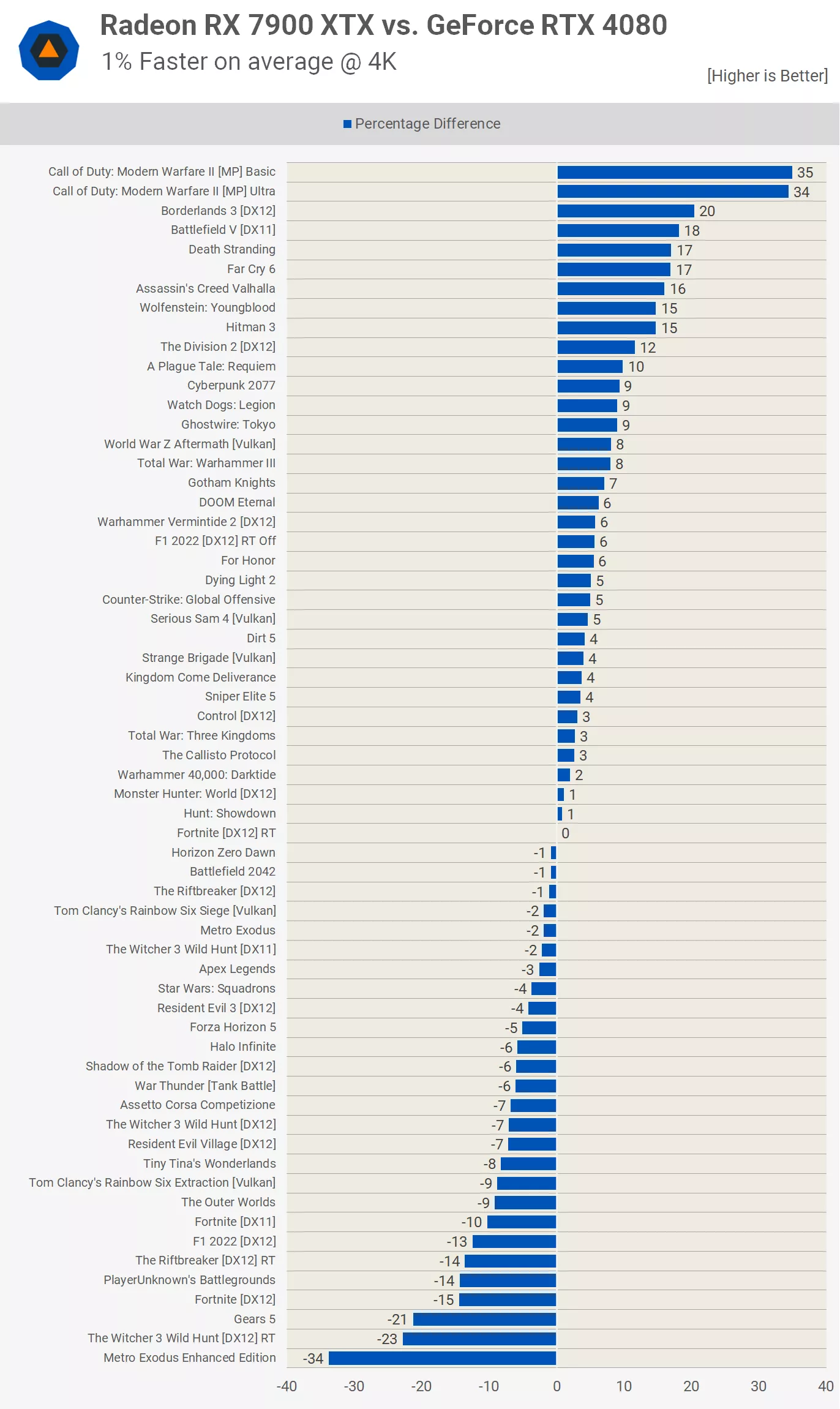

It's a similar story with the RX 7900 XTX vs. RTX 4080. AMD has its card 7.6% ahead on average; our testing puts the Radeon a mere 1% faster. It's still a couple of hundred dollars cheaper than Nvidia's card, however.

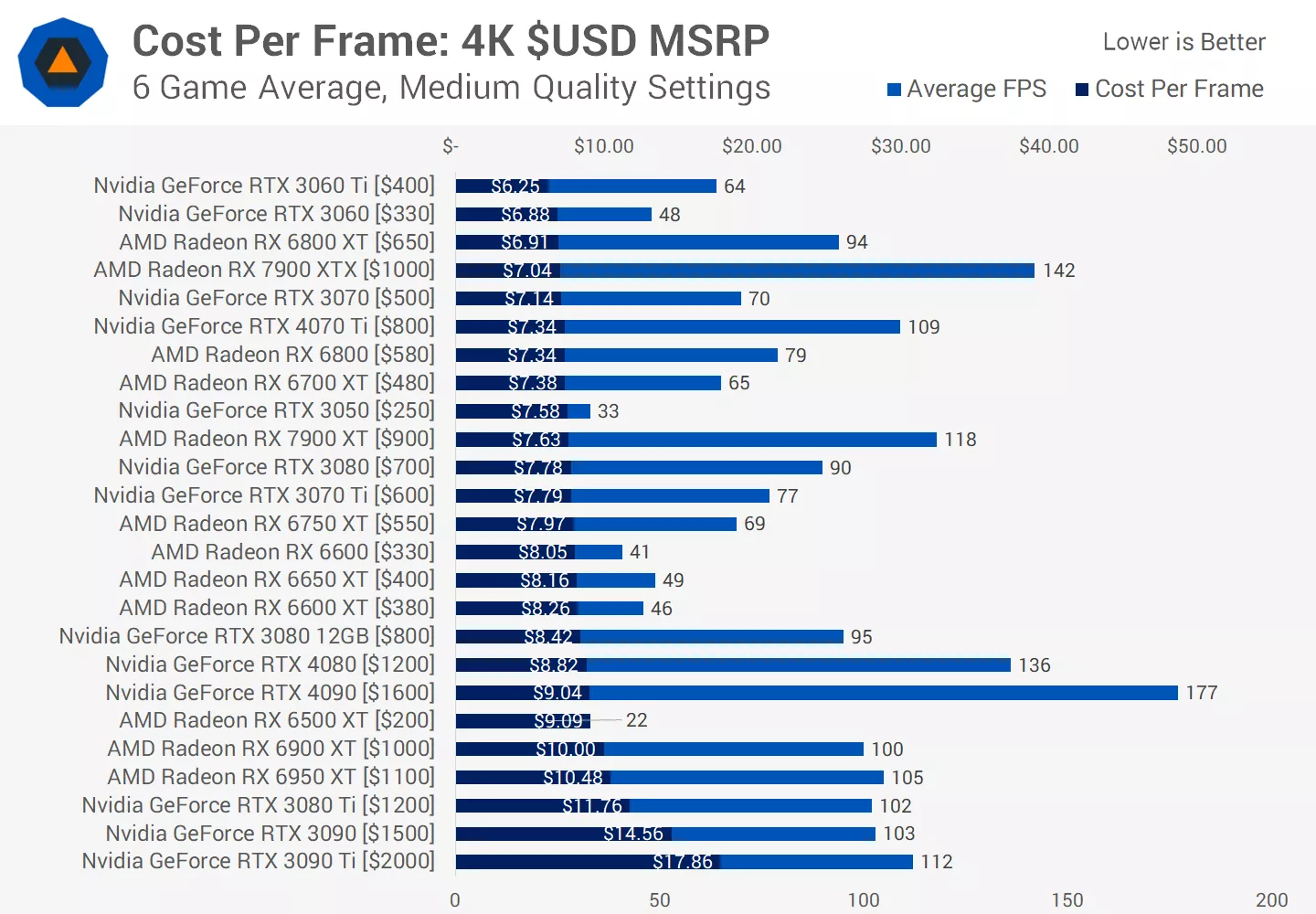

While memory size in graphics cards can make a difference, especially when playing in 4K, it's not the only factor. AMD usually packs more VRAM in its cards, but that doesn't stop Nvidia's equivalents from matching and, in some scenarios, outperforming its competitor's products. However, AMD's claim of offering better performance per dollar at the high end is something we agree with – only the RTX 3060 and its Ti version provide better value than the RX 6800 XT and 7900 XTX in our testing (below). It'll be interesting to see how much the RTX 4070 shakes things up.

https://www.techspot.com/news/98280-amd-takes-aim-nvidia-highlights-importance-vram-modern.html