Why it matters: There are hints that AMD is prepping mid-range RDNA 3 GPUs, but the company may take its sweet time bringing them to market. The GPU market definitely needs more competition in this segment, though with gamers rejecting the RTX 4070 it should surprise no one that Team Red isn't interested in falling into a price trap should Nvidia decide to discount its Ada offerings.

AMD's Radeon RX 7900 XT is the second-best-selling high-end graphics card on Amazon right now, and the XFX Speedster Merc310 model is currently $797.30 – 16 percent off its list price. As noted by Steven in his extensive analysis of the card against the similarly-priced RTX 4070 Ti, the RX 7900 XT is faster in rasterization and offers more VRAM, so it's an easy choice for people who don't care much about ray-tracing performance.

However, many gamers have been waiting for Team Red to come up with mid-range offerings at a more palatable price point. So far, the company has been relatively quiet on that front, though we know it's not busy making an RTX 4090 competitor based on the RDNA 3 architecture due to it being against AMD's general philosophy of balancing price and competitive performance.

When looking at the latest Steam survey, you can clearly see that Nvidia GPUs practically dominate with the RTX 3060 being the most popular overall. AMD needs to build strength in the value segment where Intel is also looking to gain some ground with its Alchemist GPUs, all of which have been receiving price cuts in the past several weeks.

The rumor mill says we'll see RX 7800 XT, RX 7700 XT, and an RX 7600 break cover around Computex this year, though it's possible the first two cards might be kept under wraps for longer due to yield issues with the Navi 31 and Navi 32 dies.

There's even word that AMD has been working on an RX 7800 XTX to sway more gamers away from the RTX 4070 Ti, but the company postponed plans for a release indefinitely until it can solve the high rate of manufacturing defects during trial production runs. The purported specs for the new card include a Navi 32 GPU with 70 compute units (8,960 shader cores) and 64 megabytes of Infinity Cache spread over 4 memory dies (MCDs), and 16 gigabytes of 21 Gbps GDDR6 memory connected over a 256-bit wide bus – all at a TBP of 300 watts.

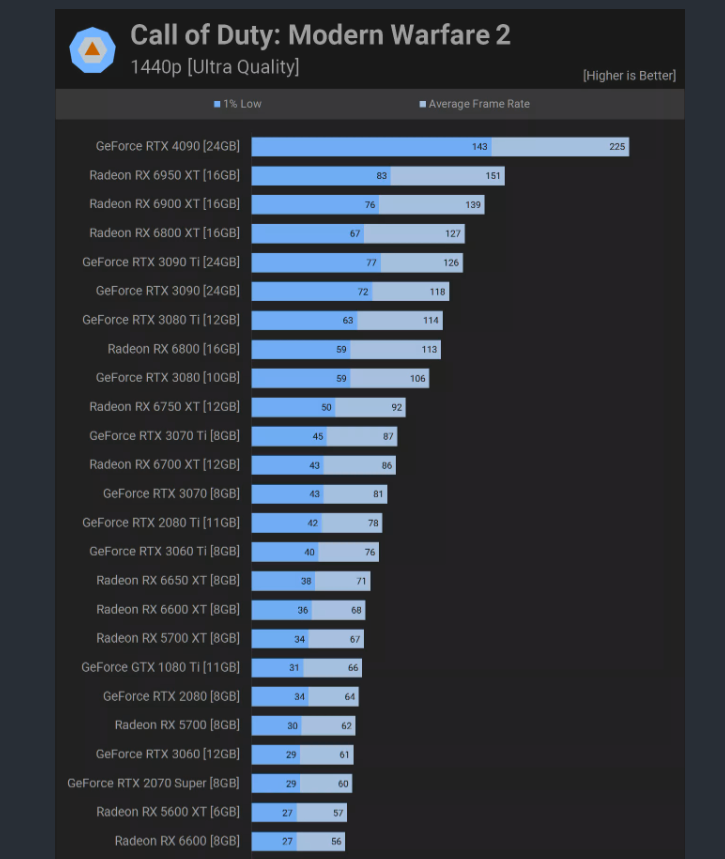

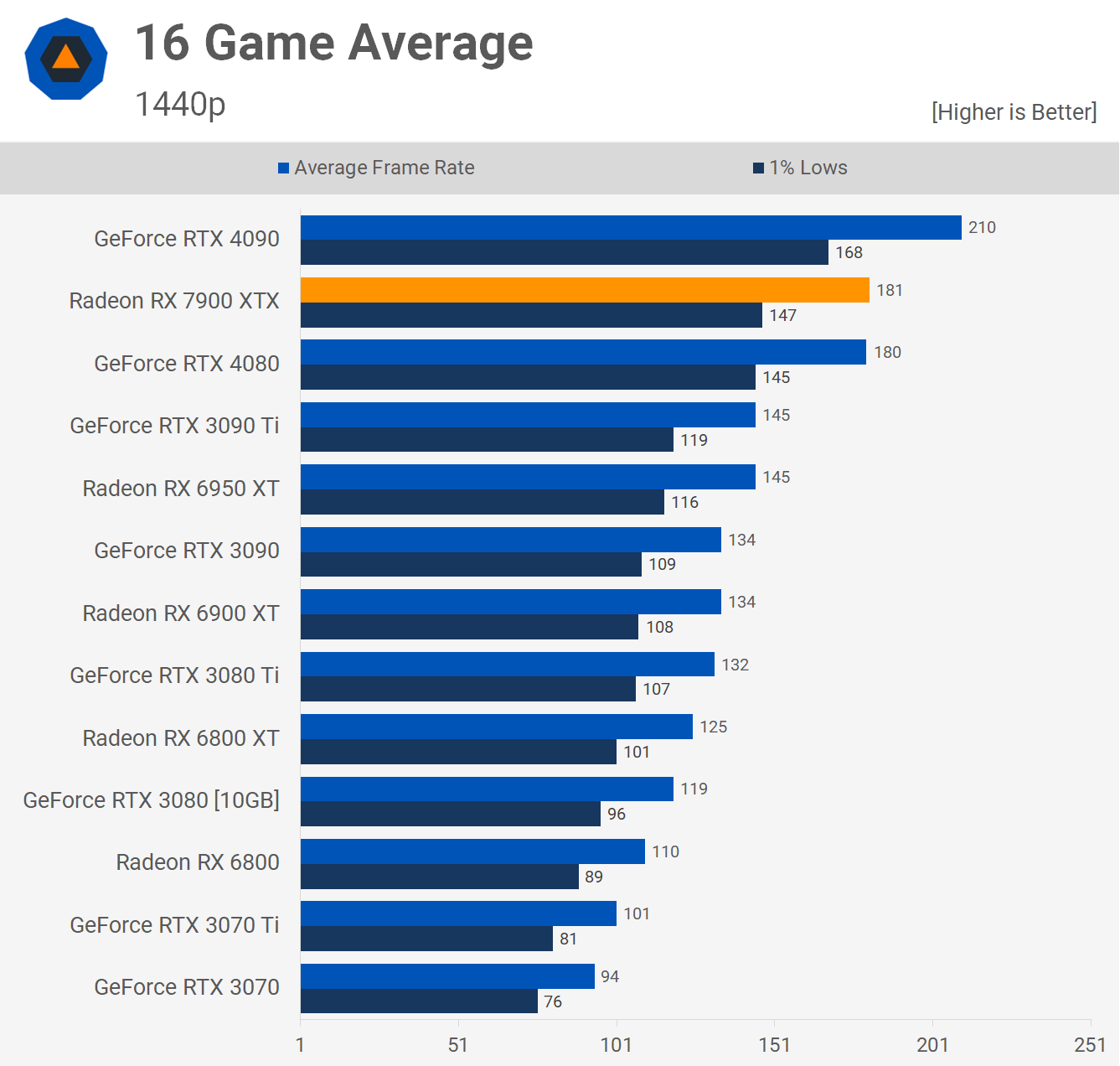

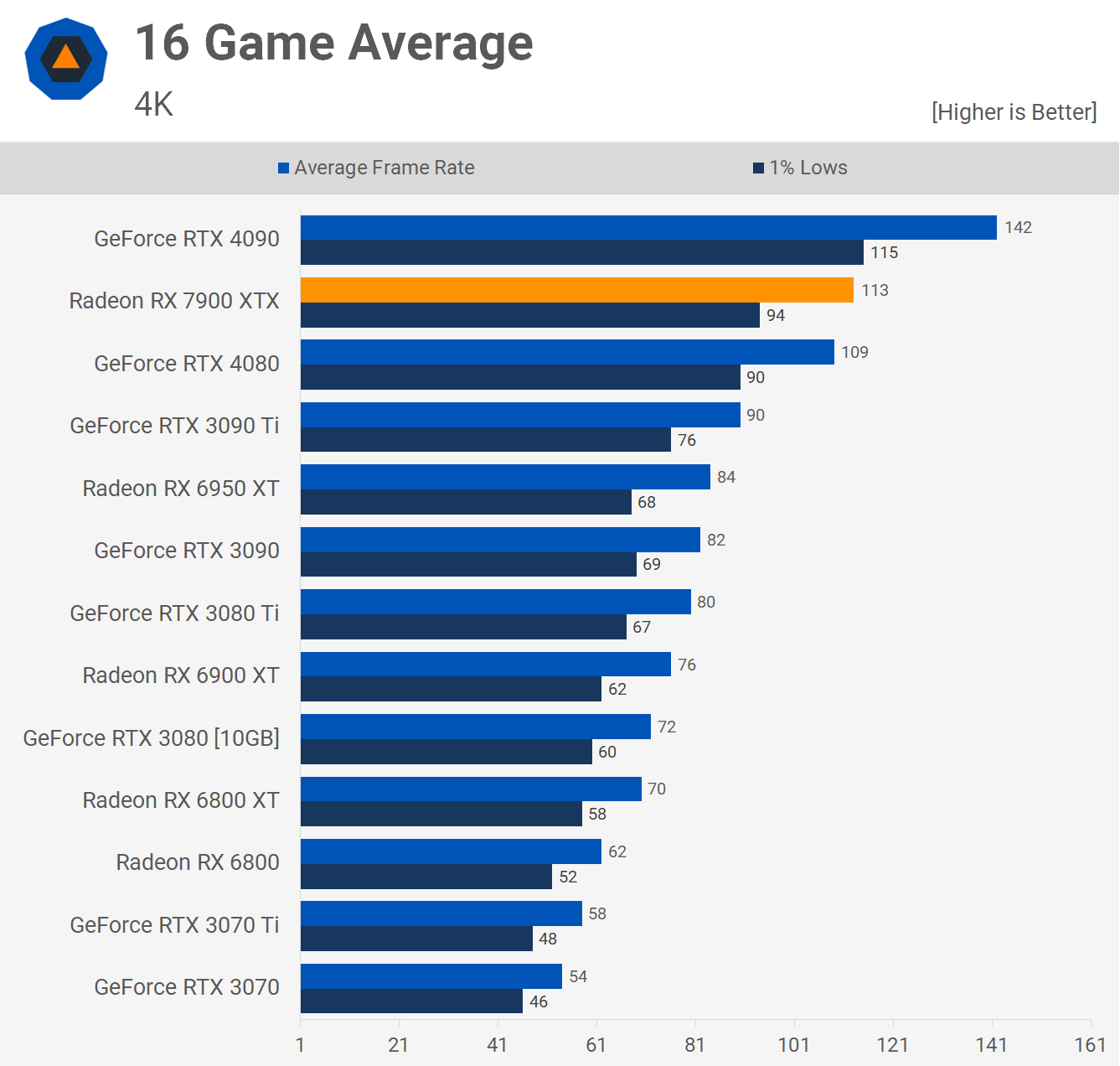

Moving lower down the stack, the RX 7800 XT is expected to slot somewhere in between an RX 6900 XT and the RTX 4070 Ti in terms of rasterization performance. This card supposedly utilizes the full Navi 32 die so it would pack a total of 60 compute units (7,680 shader units). The MCDs, Infinity Cache size, and GDDR6 memory size are all the same, but the TBP is expected to fall between 250 and 285 watts.

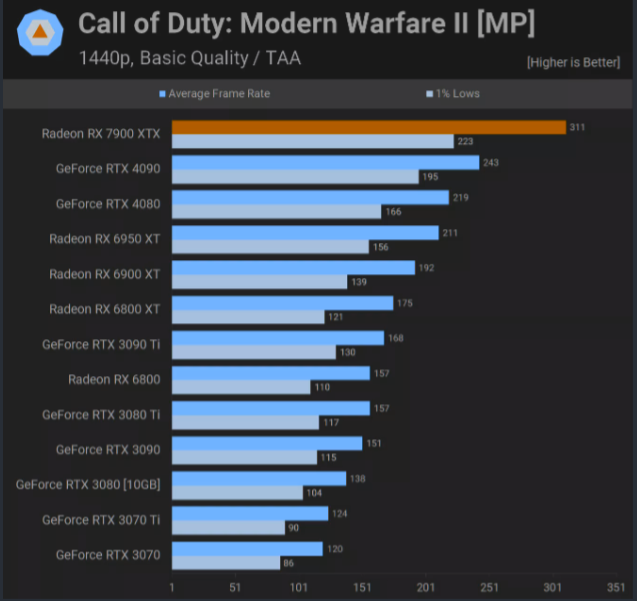

A cut-down version of the Navi 32 GPU will be utilized for the RX 7700 XT, which is reportedly set to go against Nvidia's upcoming RTX 4060 Ti. The latter card is all but confirmed to feature a modest eight gigabytes of VRAM, while the Team Red counterpart will have 12 gigabytes of GDDR6. Otherwise, the rest of the design specs include 54 compute units, 48 megabytes of Infinity Cache, and a TBP of 225 watts.

Also read: GPU Pricing Update April 2023 – Is the Nvidia RTX 4070 Another Flop?

Understandably, AMD isn't sure how to price these GPUs without eating too much into its margins and also making them worthwhile for its AIB partners to integrate into custom cards. Gamers so far are rejecting Nvidia's mid-range Ada offering, and the RTX 4070 Ti sees even lower demand even at $815. At the same time, retailers have yet to clear RX 6000 series stock even after AMD slashed prices on cards like the RX 6800 XT and RX 6950 XT.

Then there's the RX 7600, which may also be revealed at Computex and could be great value for many people who are still gaming at 1080p. The upcoming card has the potential to offer RX 6750 XT levels of rasterization performance, but the limited VRAM capacity means it could have trouble selling even at $300.

https://www.techspot.com/news/98493-amd-rx-7800-xt-7700-xt-could-get.html