What just happened? Crysis Remastered arrives tomorrow (September 18) and while its recommended specs are very generous, the game's top graphics setting, called 'Can it run Crysis?' is as demanding on a PC's hardware as the notorious original game. According to one of the devs, "there is no card out there" capable of running the mode in 4K at 30fps.

The excitement that followed Crytek's announcement of Crysis Remastered in April turned to disappointment following the fugly trailer. Responding to the criticism, the company delayed the July release date by two months to ensure the remaster met fans' expectations. Judging from the latest comparison video (top), it could have succeeded.

Earlier this month, Crytek released the game's minimum and recommended PC specs, the latter of which asks for just a GeForce GTX 1660 Ti / AMD Radeon Vega 56 and Intel Core i5-7600k or higher / AMD Ryzen 5 or higher.

The company also revealed that the game's highest graphical setting would be called 'Can it run Crysis? ' a nod to the long-running meme that arrived following the original's 2007 release. Few PCs at the time could handle the FPS, especially at higher settings, and hardware right up until a few years ago still struggled.

Today's post is dedicated to our PC community!

— Crysis (@Crysis) September 6, 2020

We want to show you, for the very first time, an in-game screenshot using the new "Can it Run Crysis?" Graphic mode, which is designed to demand every last bit of your hardware with unlimited settings - exclusively on PC! pic.twitter.com/kVHEf63oWe

Speaking to PC Gamer, Project Lead of Crysis Remastered, Steffen Halbig, said the 'Can it run Crysis?' mode brings "unlimited view distances. No pop ups of assets, and no LoD changes anymore." That all sounds like it will push graphics cards to their limits; Steffen went as far as to claim, "in 4k, there is no card out there which can run it [the game] in 'Can it Run Crysis? ' mode at 30 FPS."

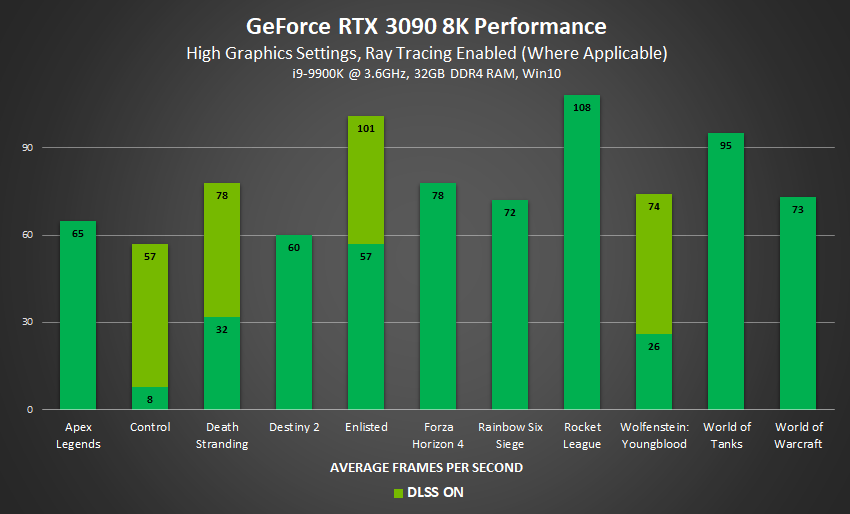

That's quite a bold statement, though using the words "out there" likely refers to non-Ampere cards—the RTX 3080 only launched today. Even the crushing Microsoft Flight Simulator can reach 40 fps (high quality) at 4K in our tests. The beastly RTX 3090, meanwhile, can run Forza Horizon 4 at 78 fps at high graphics settings in 8K, according to Nvidia.

Even if Ampere is able to pass 30 fps in 'Can it run Crysis?' mode at 4K, the mode is obviously going to crush lesser cards and could become a new benchmark for testing GPUs.

Crysis Remastered lands on the Epic Games Store this September 18.

https://www.techspot.com/news/86785-crysis-remastered-dev-game-top-graphics-setting-no.html