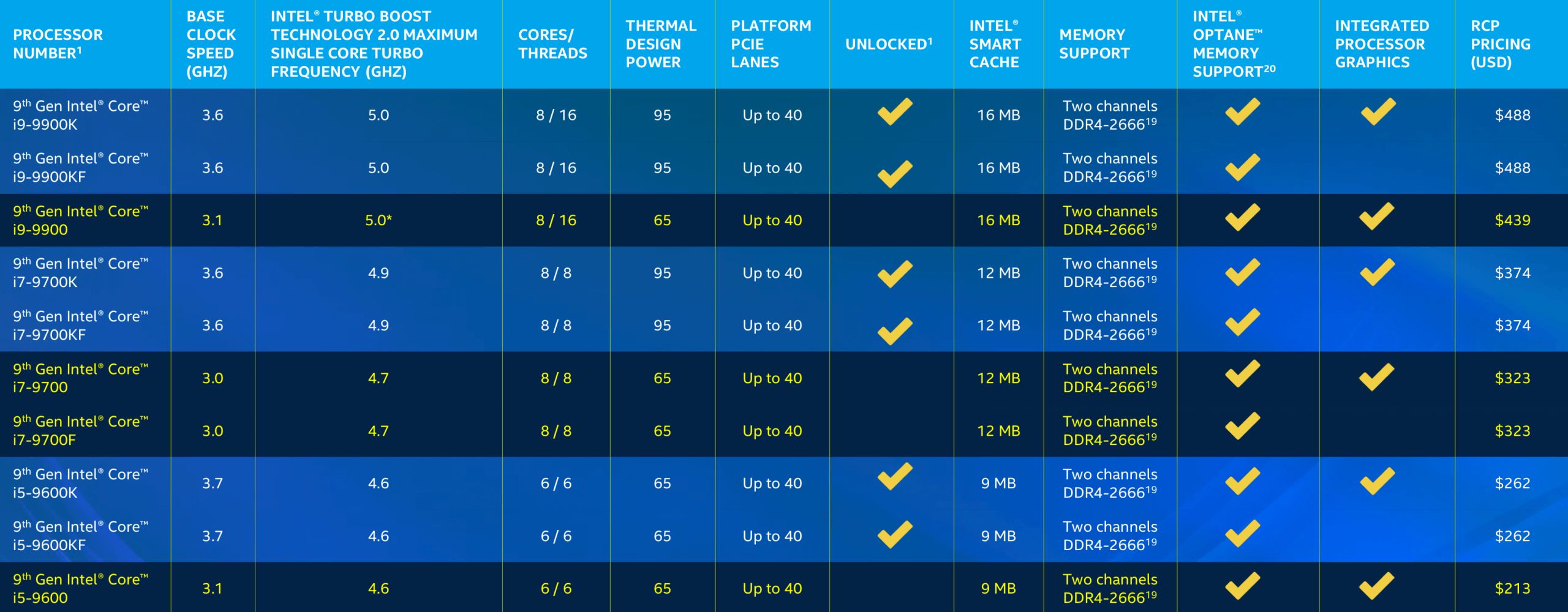

In brief: Intel's belated 9th-gen lineup, also known as Coffee Lake Refresh, will see Intel bring up to eight cores to desktops and laptops. Support for denser DRAM, solder TIM, improved Wi-Fi, Turbo Boost for the i3 models, plus the new F and KF SKUs round out the notables. The chips should be compatible with current 300-series motherboards.

Intel's Coffee Lake Refresh that makes up its 9th-gen processor line has been a long time coming; Intel rolled out the i9-9900K and its i7 and i5 K-SKU brethren late last year, but it didn't come with many of the mid to low end models. As of now, Intel's 9th-gen desktop lineup is complete, coming alongside the new H-series mobile parts powering new 6-core laptops from vendors multiple vendors, with 8-core models soon to come.

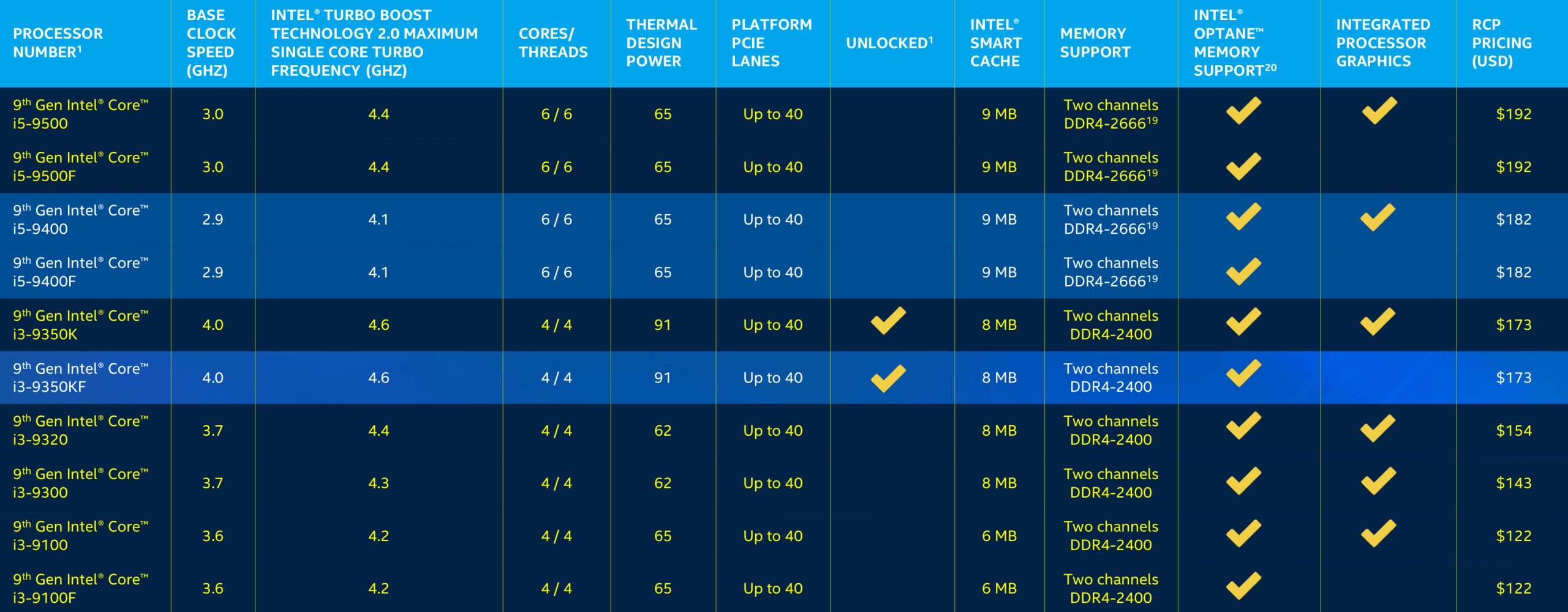

Like the i9-9900K, Intel is fleshing out its 9th-gen lineup with chips based on its 14nm++ manufacturing process. Being another extension of Intel's Skylake architecture, there isn't a wealth of new features. Aside from higher clocks within the same TDP envelope that come with refined silicon, there are things like solder TIM on the K-series. One point of interest is the inclusion of Turbo Boost support for Core i3 chips. This is undoubtedly meant to bolster Intel's defense on the budget segment, where AMD has been considerably dangerous to Intel's marketshare with Ryzen 3 and APU offerings.

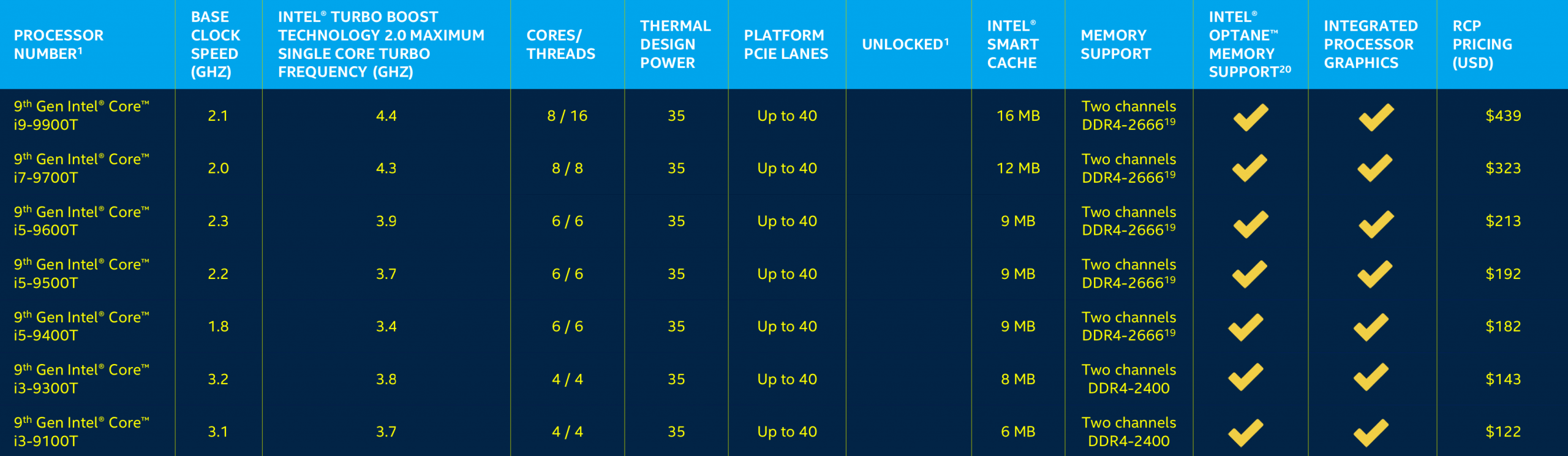

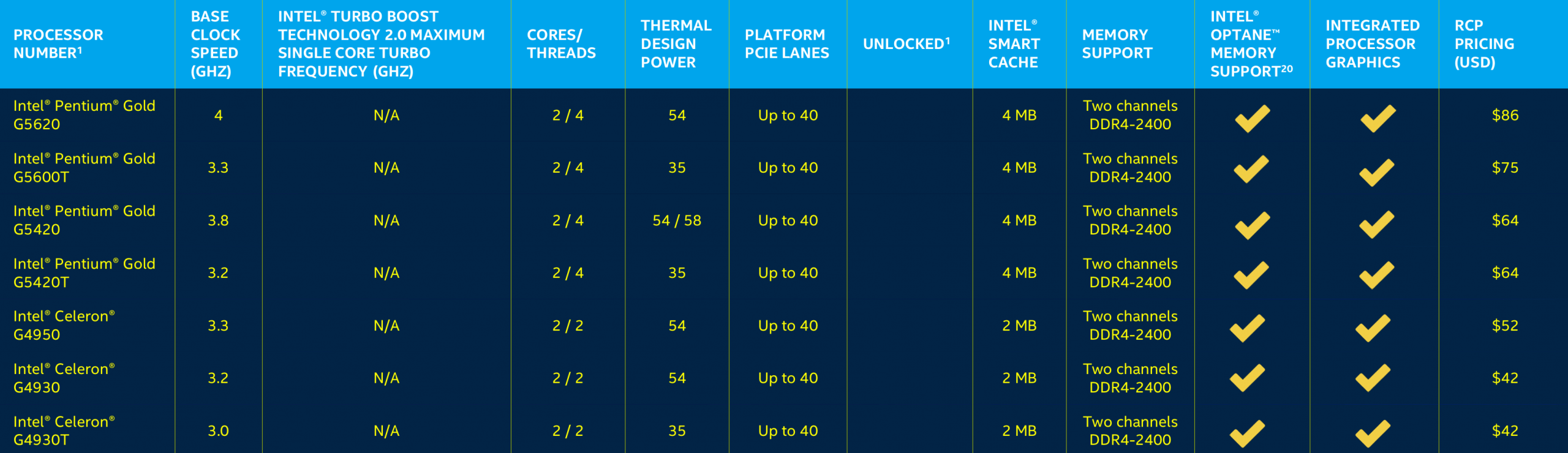

Intel is presumably hoping its full 9th-gen family will help stave off the encroaching AMD and its looming 7nm Ryzen 3000-series. Alas, that's probably a job better suited for Intel's 10nm chips. Nevertheless, Intel's 9th-gen lineup will drop into existing 300-series boards (likely requiring a BIOS update) and will offer more 8-core models, support for up to 128GB of RAM, improved Wi-Fi, and extends Optane Memory support to the entry-level Pentiums and Celerons.

The 9th-Gen series will see Intel continue its well known price and feature segmentation, as well as introducing its newest "F" and "KF" suffixes. These models are stripped of integrated graphics, a feature Intel tested the waters with somewhat recently. Pentiums and Celerons are now the only dual-core models, as the i3 has gained two more cores in recent years, and Intel has extended Turbo Boost support to the value-minded chips as well.

Intel states the new processor family is available from retailers immediately; however, color us skeptical with Intel's pervasive 14nm shortages. We'll be keeping an eye on price and availability.

https://www.techspot.com/news/79772-intel-9th-gen-desktop-lineup-here-finally-fleshes.html