Today we're getting back to what we love doing, big benchmark comparisons, and we're going to start with the GeForce RTX 3080 12GB and Radeon RX 6900 XT. If you're wondering why these two, that's because they're occupying roughly the same price point. Though admittedly graphics card pricing is highly volatile right now, and we expect things to keep moving around in the weeks and months to come, hopefully for the better...

But as it stands right now, the typical asking price for the GeForce RTX 3080 12GB is $1600 to $1800 in the US, while the Radeon RX 6900 XT is around $1500 to $1600. In other regions however like Australia, they're a close match.

So if you have a large wad of cash you'd like to part with in exchange for a graphics card, which one should you buy?

To answer that question we've got a new Asus RTX 3080 TUF Gaming OC 12GB graphics card on hand and we'll be comparing it to the AMD Radeon RX 6900 XT. To make this comparison as fair as possible, the TUF Gaming will be run at the official clock specifications, so we'll be showing the stock performance of both products.

For all tests we used our Ryzen 9 5950X test system with 32GB of DDR4-3200 CL14 dual-rank, dual-channel memory. Both GPUs were tested at 1080p, 1440p, and 4K across 50 games using Windows 11. The driver versions used were Radeon Adrenalin 22.2.1 and GeForce Game Ready 511.79 as these were the latest available drivers when we started testing about a week ago.

We're not going to go over the data of all 50 games individually, as that would take you all day and it does get kind of repetitive. Instead we're going check out closely the results for about a dozen of them and then we'll see how these two GPUs compare head-to-head across all games in a single graph.

Benchmarks

First up we have the new Dying Light 2 survival horror title. For those of you targeting lower resolutions, both GPUs pushed well over 100 fps at 1080p and around 100 fps at 1440p. The RTX 3080 12GB was 6% faster at 1080p and 10% faster at 1440p, so a reasonable performance advantage. Where you're really going to notice that gap though is at 4K, where the GeForce GPU offered a 16% performance bump, producing a ~60 fps experience.

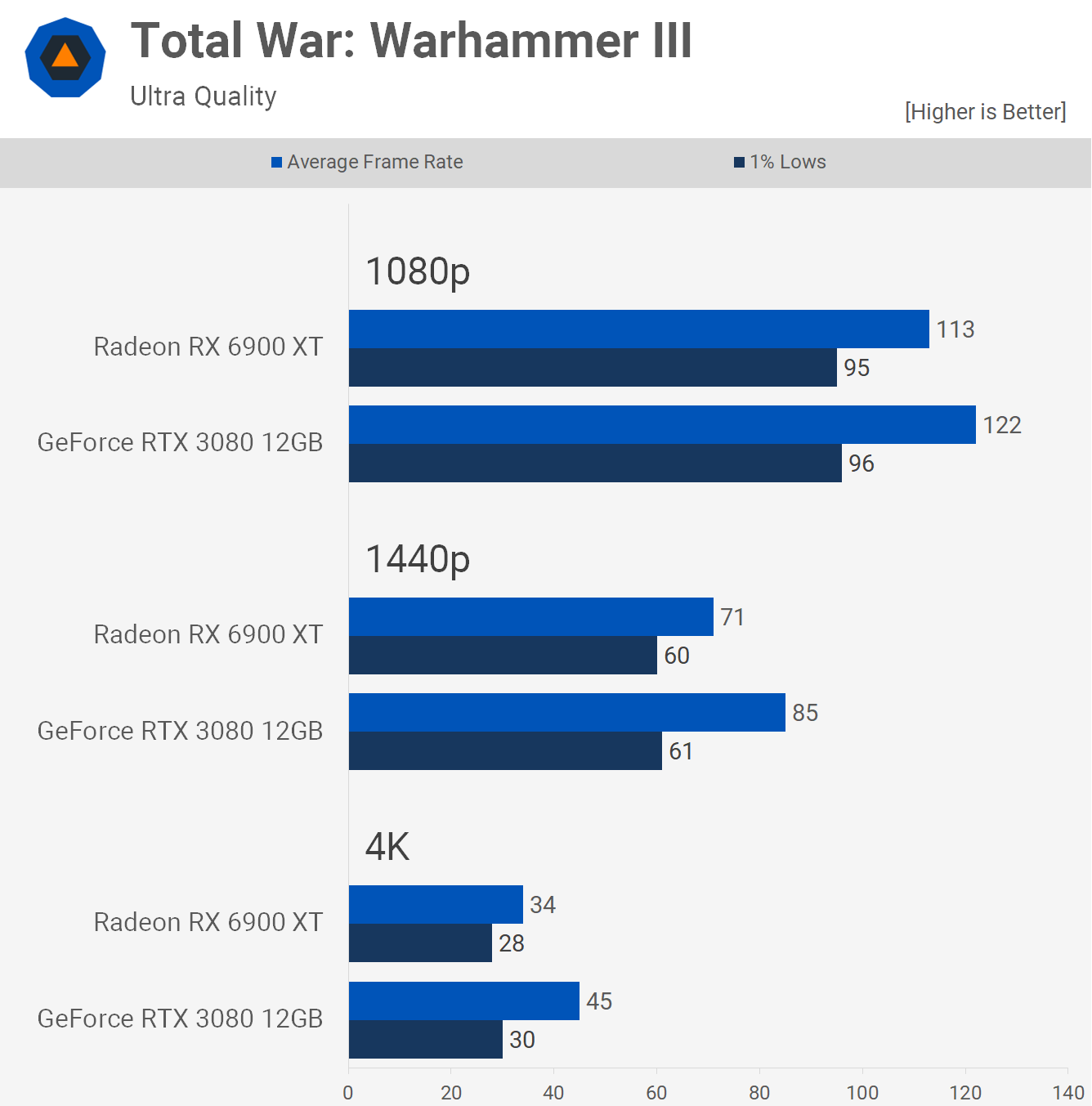

For testing Total War Warhammer III we're running the built-in benchmark using the Battle scene and this is another new game that plays better on Nvidia hardware.

Here the RTX 3080 12GB was 8% faster at 1080p, 20% faster at 1440p, and a massive 32% faster at 4K. This is an easy win for the GeForce GPU and it will be interesting to see if AMD manages to claw back any performance with future driver releases.

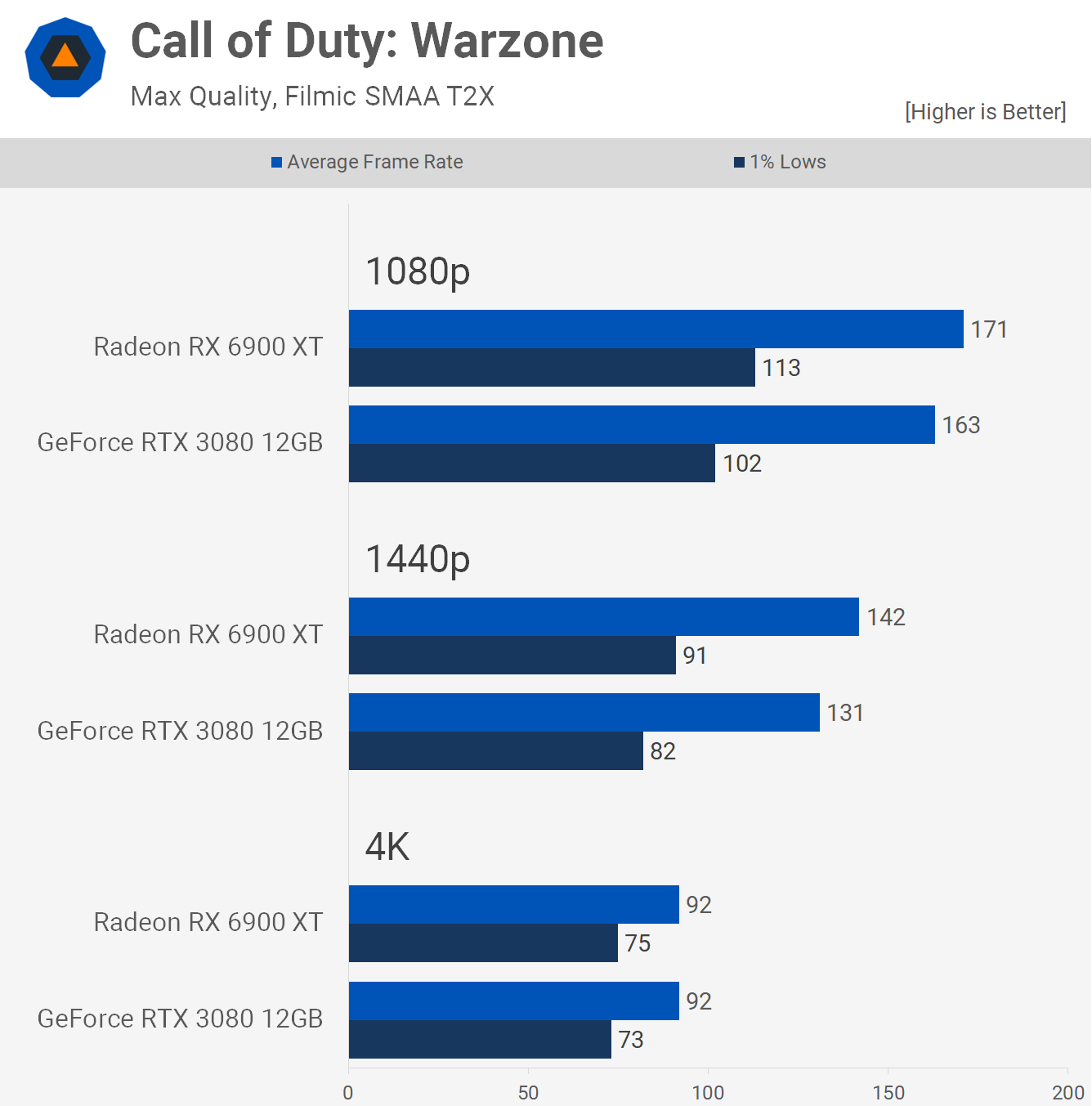

Next we have the ever popular Call of Duty Warzone and for this one, like most of the games tested, we're using the highest quality settings. Despite that both GPUs pushed up well over 144 fps on average at 1440p, this time the Radeon GPU came out on top, though we're talking about a small 5% performance advantage.

Interestingly, that advantage was expanded slightly to 8% at 1440p, probably due to the game becoming less CPU bound. At 4K where the Ampere architecture is often better utilized, the 3080 comes back to match the 6900 XT with 92 fps.

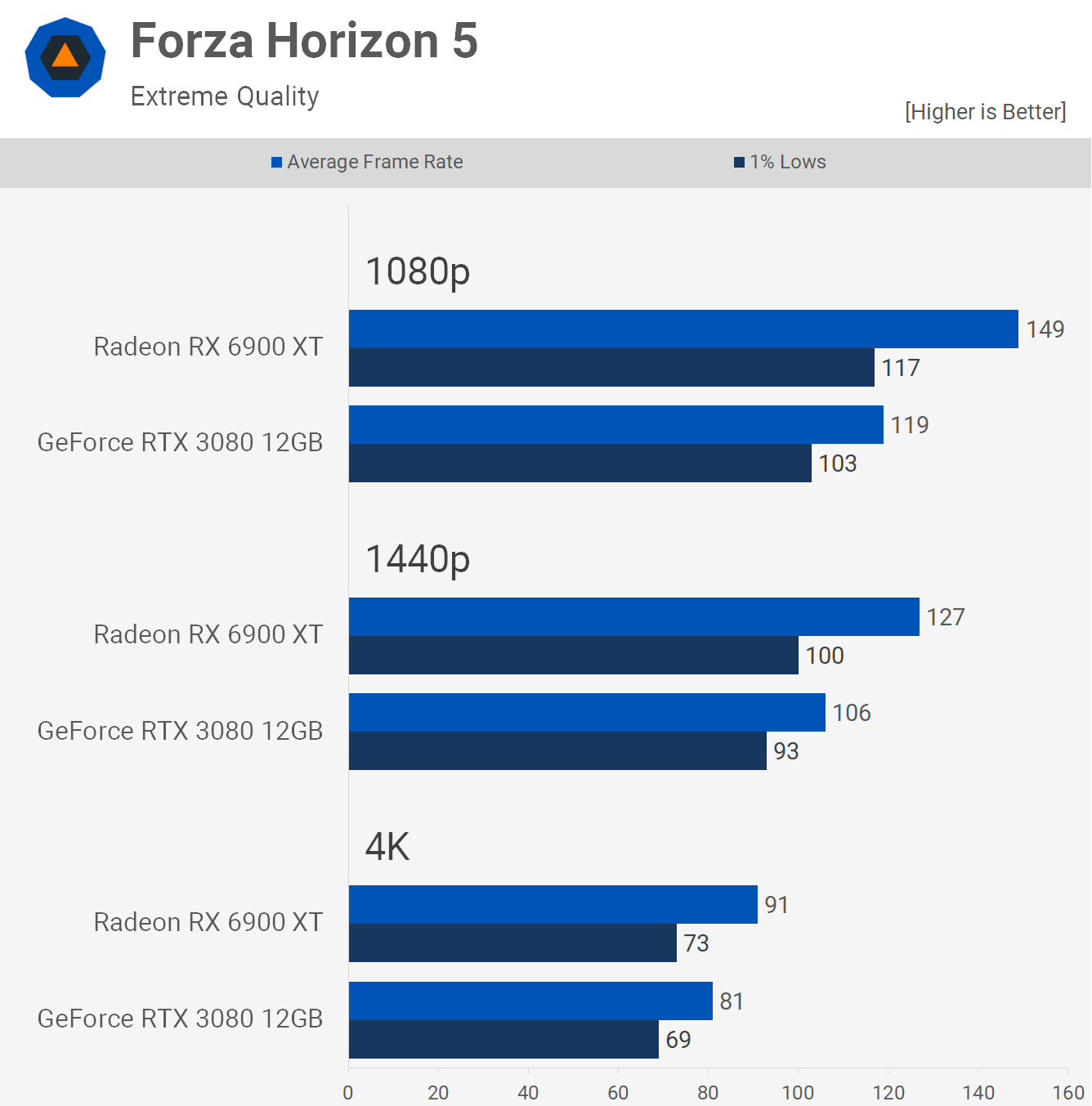

Moving on to Forza Horizon 5, the 6900 XT pulled well ahead at 1080p with an impressive 149 fps on average making it 25% faster. That margin was reduced slightly at 1440p, but even so the Radeon GPU was still 20% faster, which is a significant margin.

AMD was able to maintain their advantage even at 4K, and the extra 12% you get out of the 6900 XT will be noticed by some gamers.

Another new game we have for testing is God of War and this is a gorgeous looking game. Despite the breathtaking visuals, it runs really well on modern high-end PC hardware, even at 4K where the 3080 was good for 92 fps on average, making it 18% faster than the 6900 XT.

That's a big win for Nvidia, though the 6900 XT still delivered highly playable performance at this extreme resolution with 78 fps.

The GeForce GPU provided stronger performance at 1080p and 1440p, though the margins here are heavily reduced to single-digit figures and both rendered well over 100 fps.

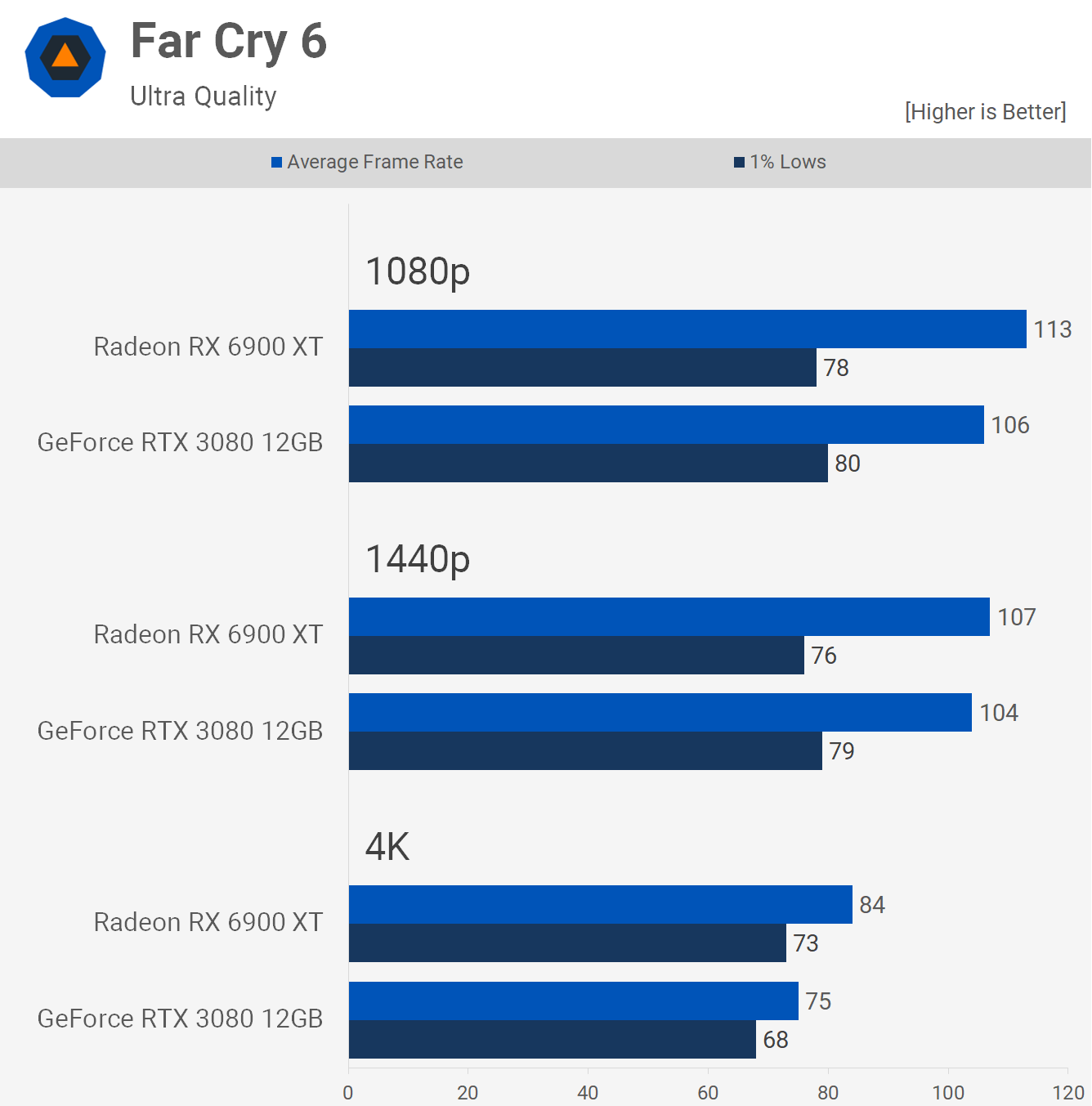

Next we have Far Cry 6 and unfortunately this lightly threaded game is very easy to CPU bottleneck at lower resolutions when using high-end GPUs, as seen here. This is why the 1080p and 1440p results are similar with a very small fps boost from 1440p to 1080p.

Moving to 4K, where the game starts to become GPU bound, the 6900 XT was 12% faster with 84 fps on average compared to 75 fps with the RTX 3080. Needless to say, both produced highly playable performance.

F1 2021 was tested using the ultra high quality preset which enabled ray tracing when supported, so both the RTX 3080 12GB and 6900 XT were tested with RT effects enabled. As we've found in the past with this title, the 1% lows are lower with Radeon GPUs, though the difference is hardly noticeable when gaming.

It's impossible to tell which GPU you're using, especially at 1080p and 1440p. The 3080 was 12% faster at 4K which is a noteworthy improvement and I'm sure some gamers will be able to spot/feel the difference, but overall they are very similar.

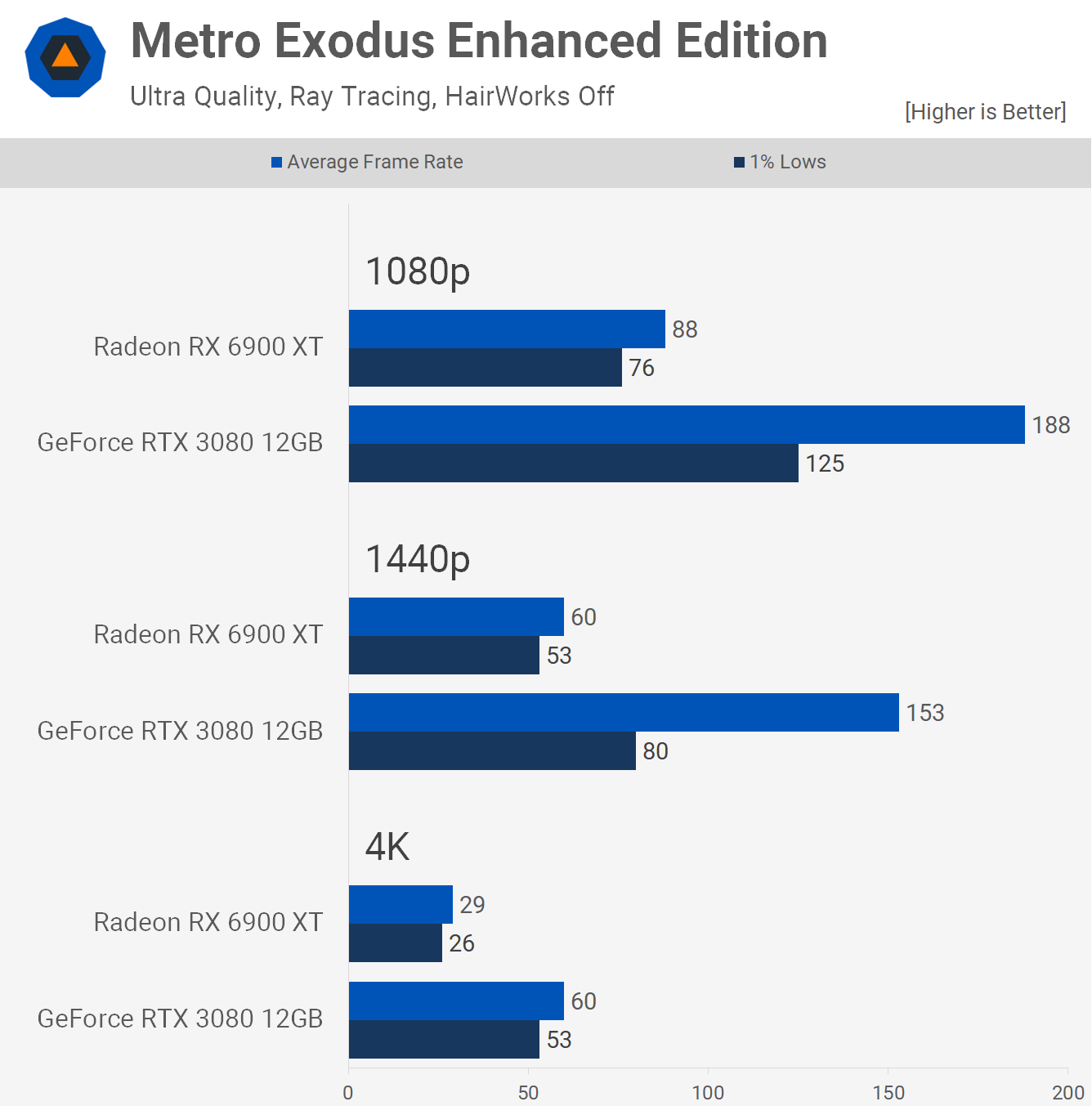

Metro Exodus Enhanced was designed to showcase RTX features and was developed before AMD's RDNA2 architecture was available, so unsurprisingly it doesn't run very well on Radeon GPUs. This version of the game only works with hardware that supports hardware accelerated real-time ray tracing, and we're testing with the ultra preset enabled.

The 6900 XT is capable of playable performance at 1080p and 1440p, but at 1440p you're looking at 155% greater performance with the RTX 3080. Then for those wanting to play this title at 4K, you can get around 60 fps with the 3080, while the 6900 XT falls short of even 30 fps.

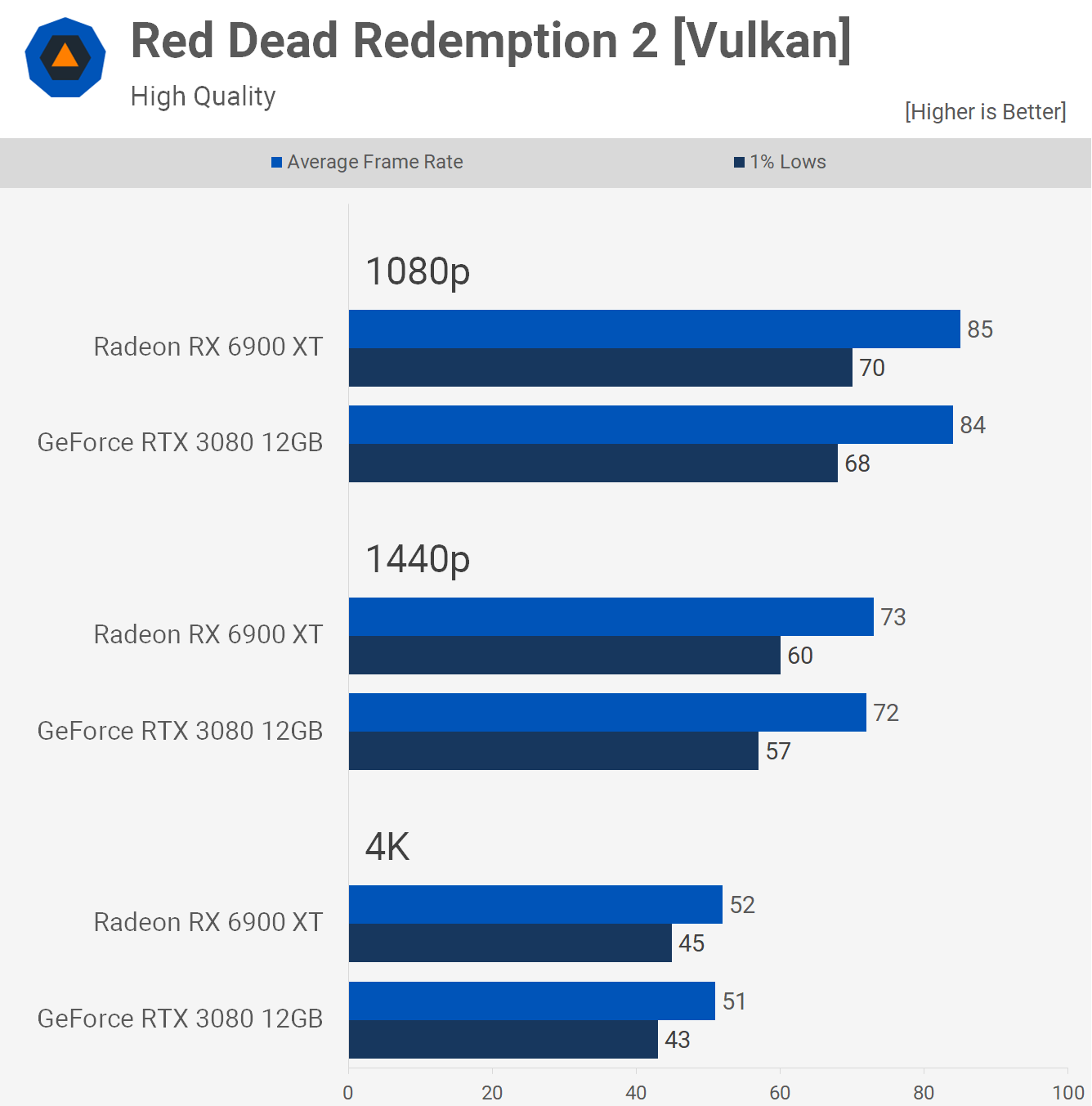

Next we have Red Dead Redemption 2 and this title has been well optimized for both AMD and Nvidia hardware. As a result we're looking at almost identical performance.

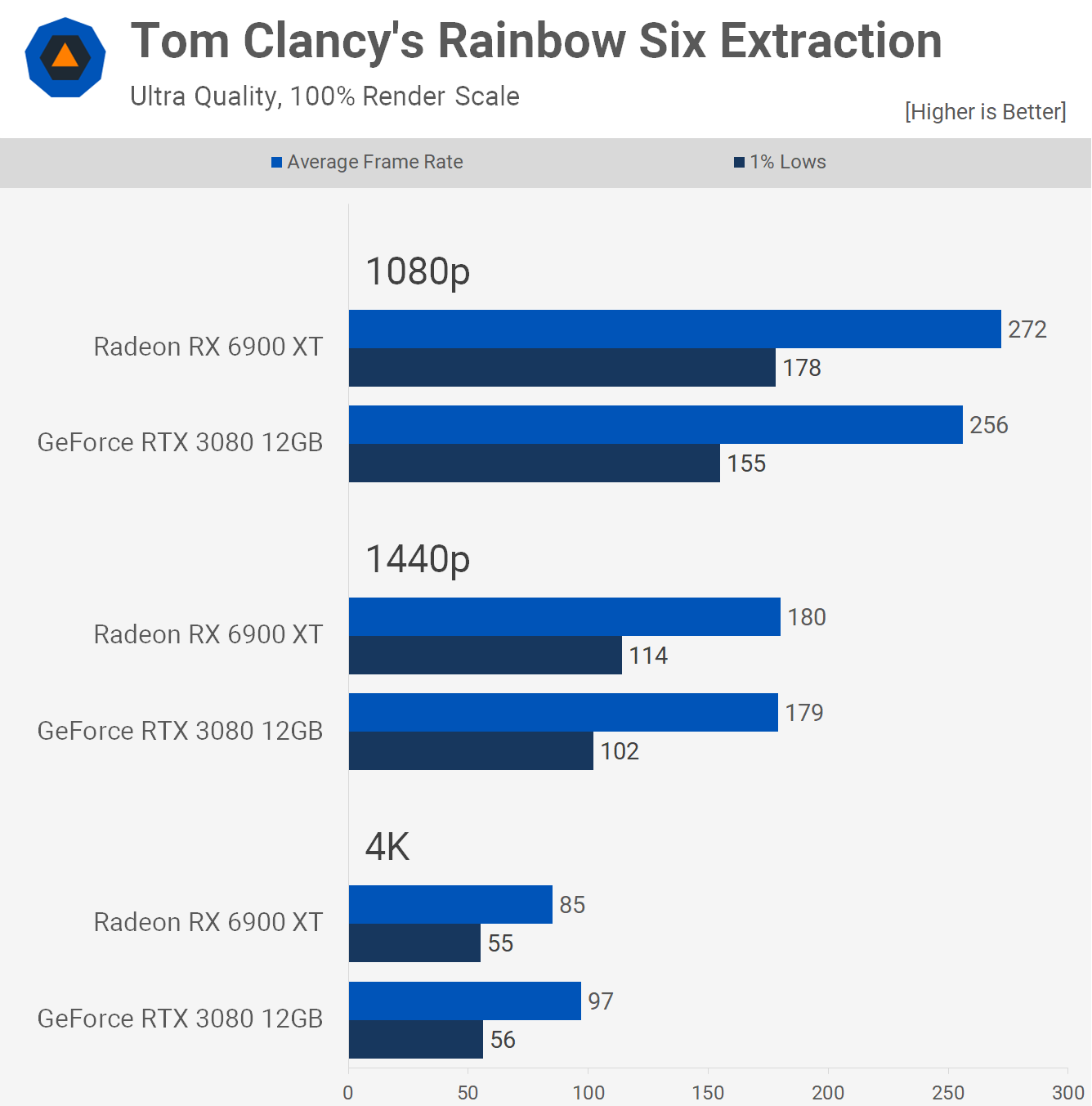

Another newly released game, Rainbow Six Extraction is well optimized for AMD and Nvidia hardware. The 6900 XT enjoys a small performance advantage at 1080p, we then see similar performance at 1440p, while the GeForce GPU pulled ahead by a reasonable margin at 4K, though the 1% lows were comparable.

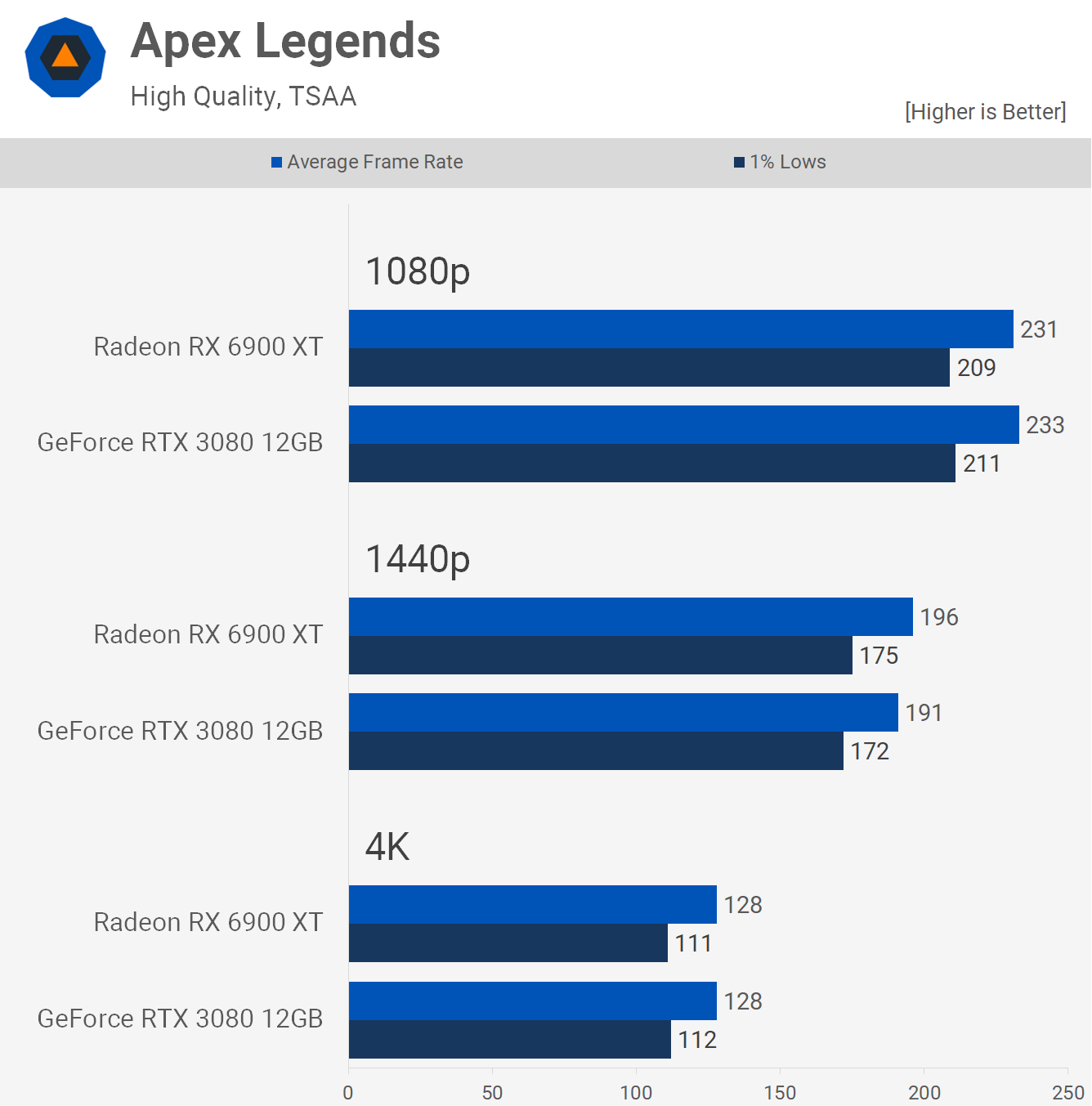

We're also looking at virtually identical performance between these two GPUs in Apex Legends, and with the highest possible quality settings in place, both easily pushed up over 100 fps at 4K.

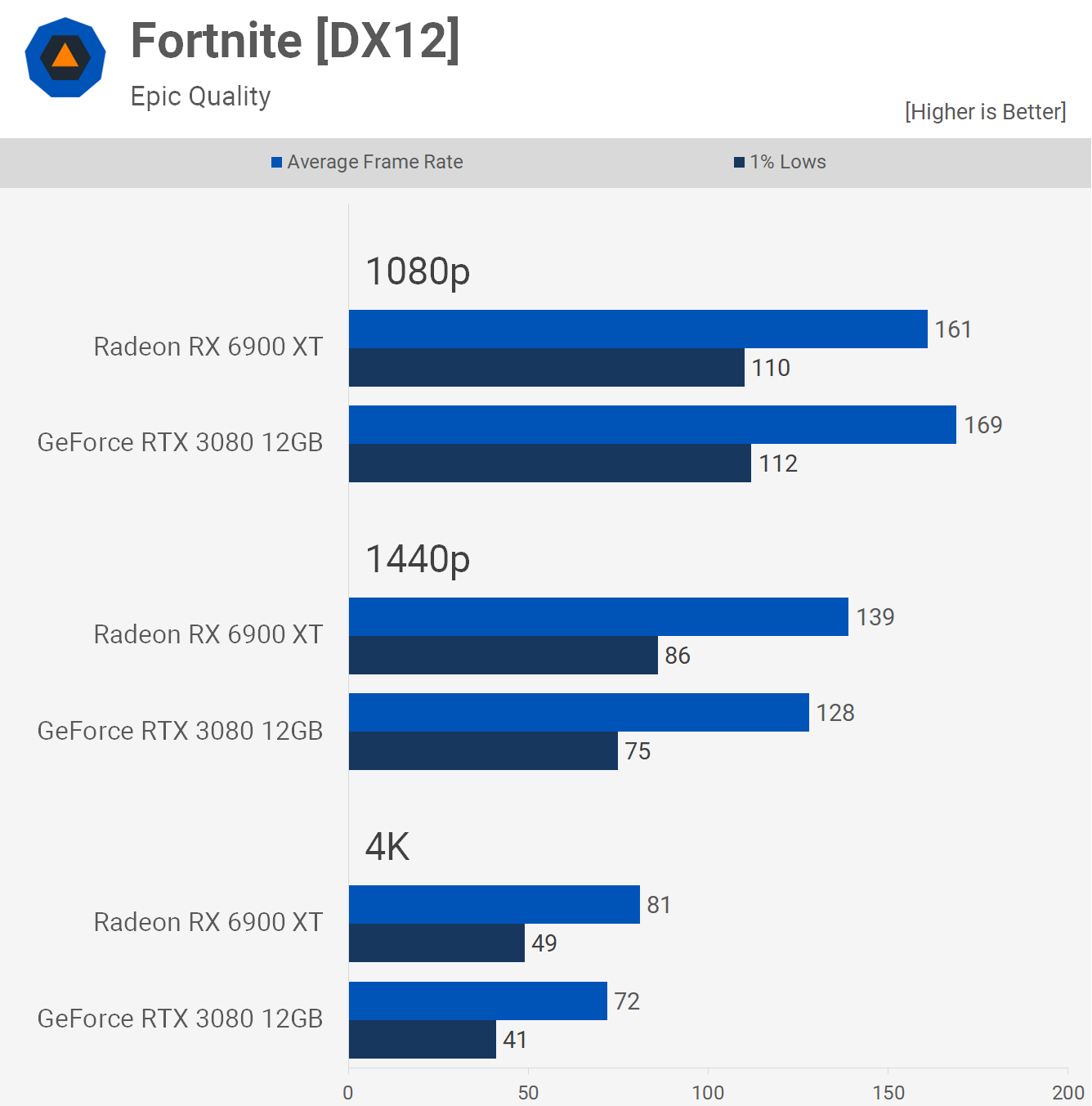

The Fortnite results were a bit unexpected as the 6900 XT was actually slower than the 3080 at 1080p, yet faster at 1440p and 4K. Admittedly the margins aren't huge in either direction, though the 13% increase at 4K isn't insignificant. For those of you using more competitive type quality settings, either of these GPUs should be able to push well over 100 fps at 1080p.

The 3080 can also benefit from DLSS support, though we don't include those numbers as they'd need to be accompanied by a detailed visual analysis. Still if you play a lot of Fortnite, DLSS would be a selling point for the RTX 3080 12GB.

Last up, here's a look at Cyberpunk 2077 and please note for this game we're using the second highest quality preset, without any ray tracing effects enabled. Upscaling techniques such as DLSS and FSR haven't been enabled, because we would have to judge visual quality for each GPU at each tested resolution to make it apples to apples.

Under these test conditions, the 6900 XT is 16% faster at 1080p and then 11% faster at 1440p, while the margins are neutralized at 4K with both GPUs rendering ~45 fps. Ideally for gaming at 4K you'll want to enable DLSS or FSR, or lower the quality settings further.

50 Game Average

Based on the dozen or so games we just looked at, the RTX 3080 12GB and 6900 XT appeared evenly matched for the most part. But before we draw any performance related conclusions, let's take a look at the data across all 50 games tested...

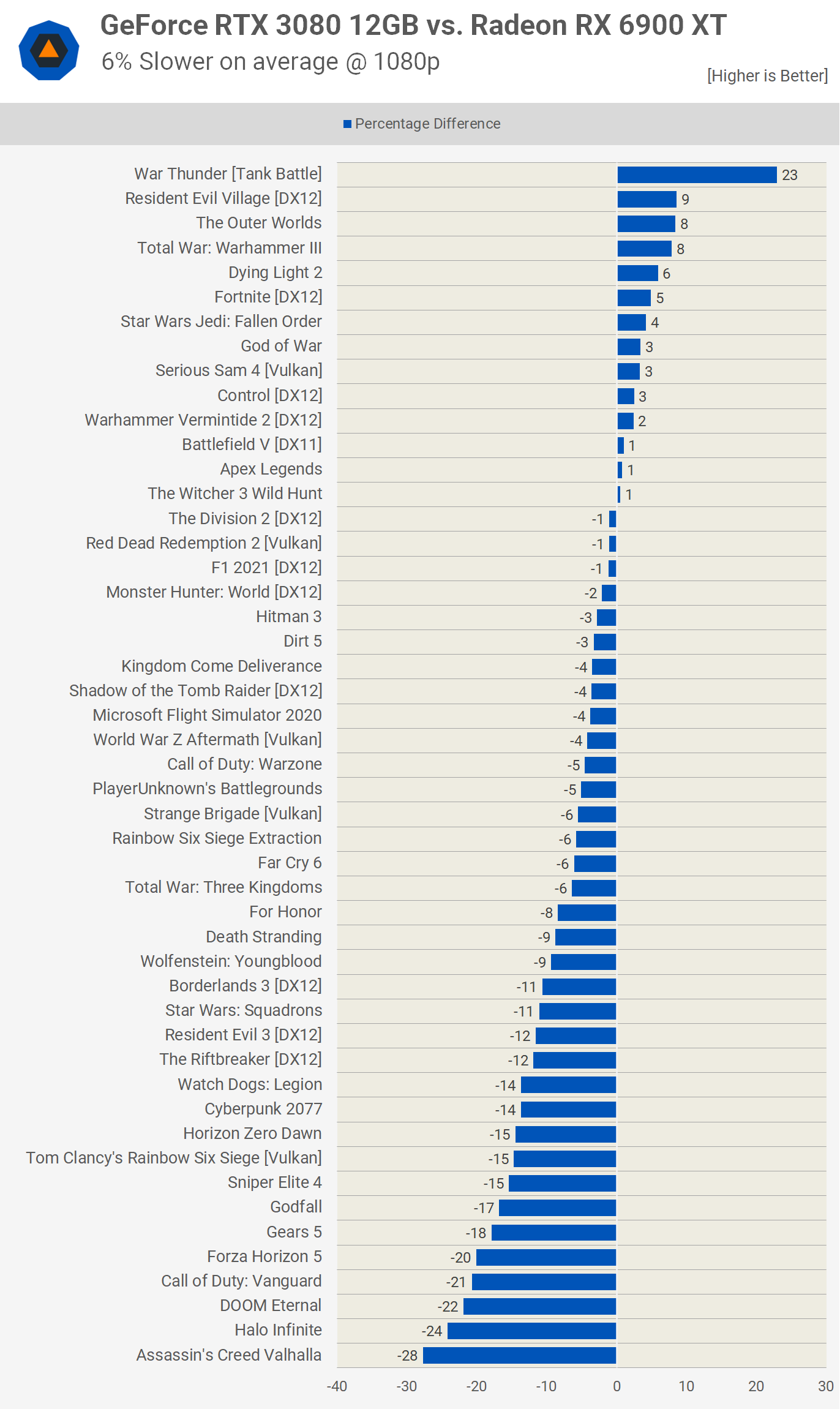

Starting with the 1080p results, we see that the RTX 3080 12GB was just 3% slower on average, meaning the 6900 XT was typically faster and there were just 7 games were the 3080 was faster by a 5% margin or more, with 25 games where it was slower by a 5% margin or greater.

Of course, the extreme outlier here is Metro Exodus Enhanced Edition and not all of you are going to want to play that game, or are even interested in ray tracing at this point in time.

Removing that outlier does double the margin seen previously, and now the RTX 3080 12GB is 6% slower than the 6900 XT. That's still a rather insignificant margin that suggests performance is typically going to be very similar between the two GPUs, though there were a handful of games where the 3080 was slower by a 20 - 30% margin.

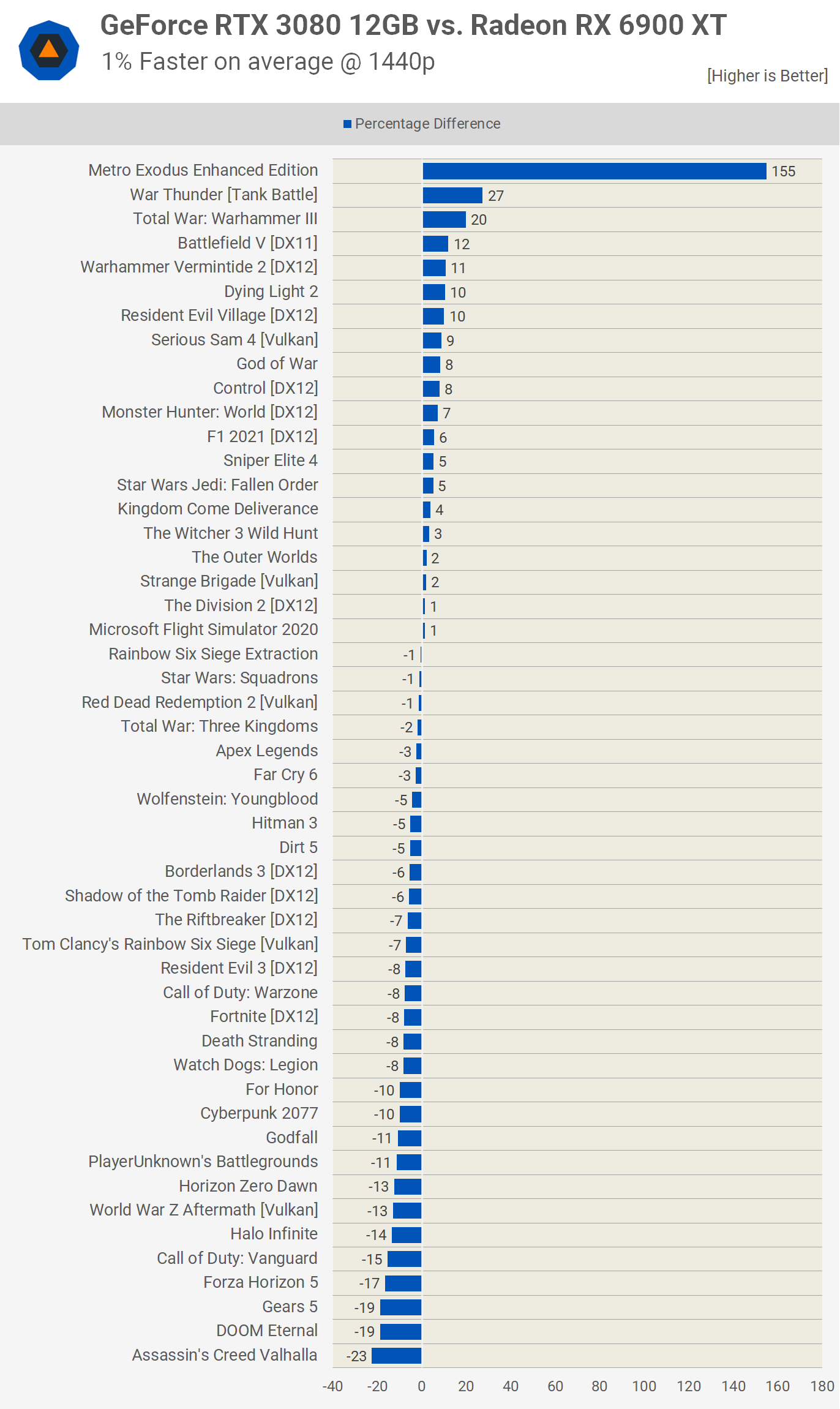

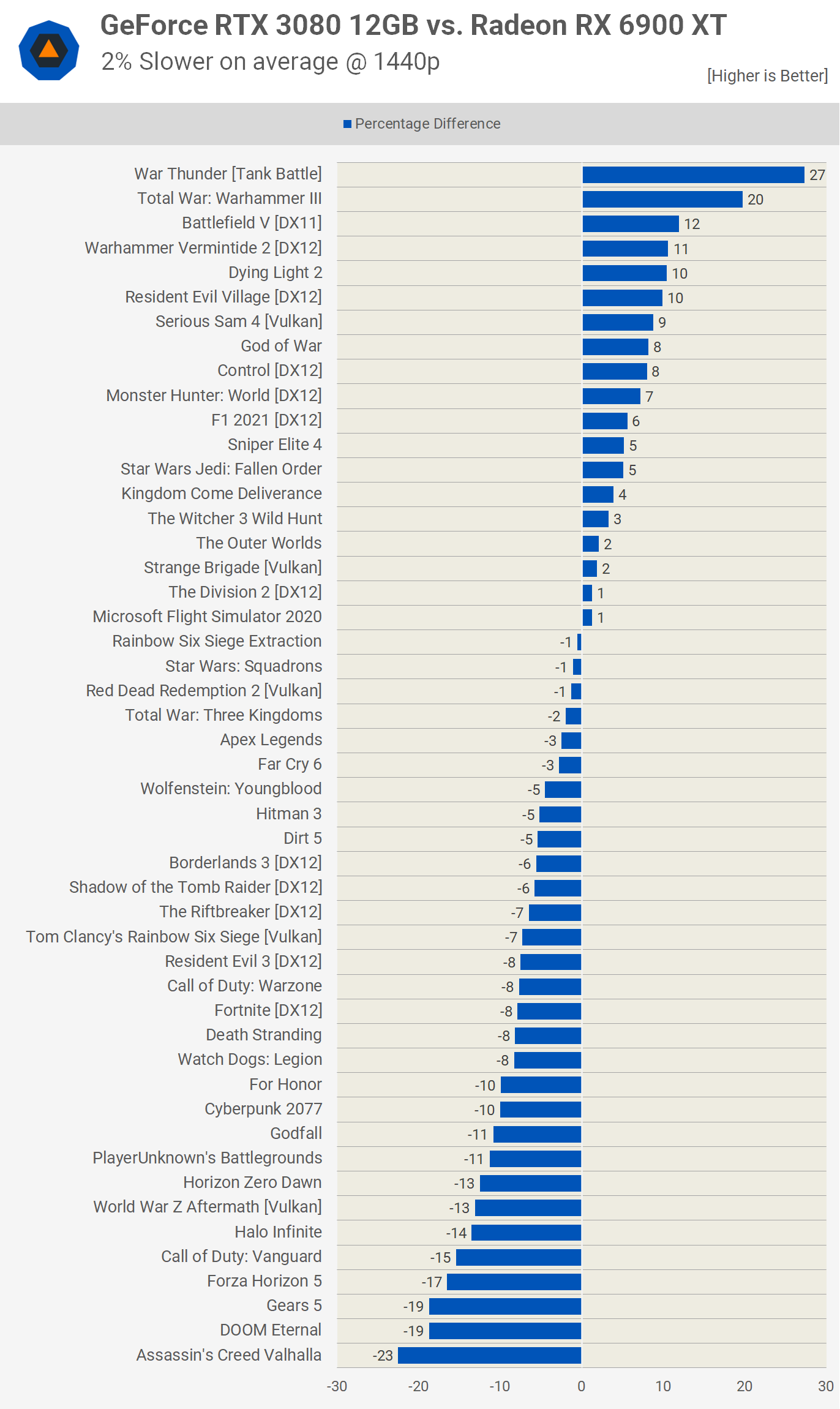

Moving up to 1440p reduces the margin further, and in fact the 3080 is now 1% faster on average when we include Metro Exodus Enhanced results. Removing Metro swung the margins around and now the 3080 is 2% slower, which is a negligible margin. There were 13 instances where the 3080 was faster by a 5% margin or greater, and 24 where it was slower by a 5% margin or greater.

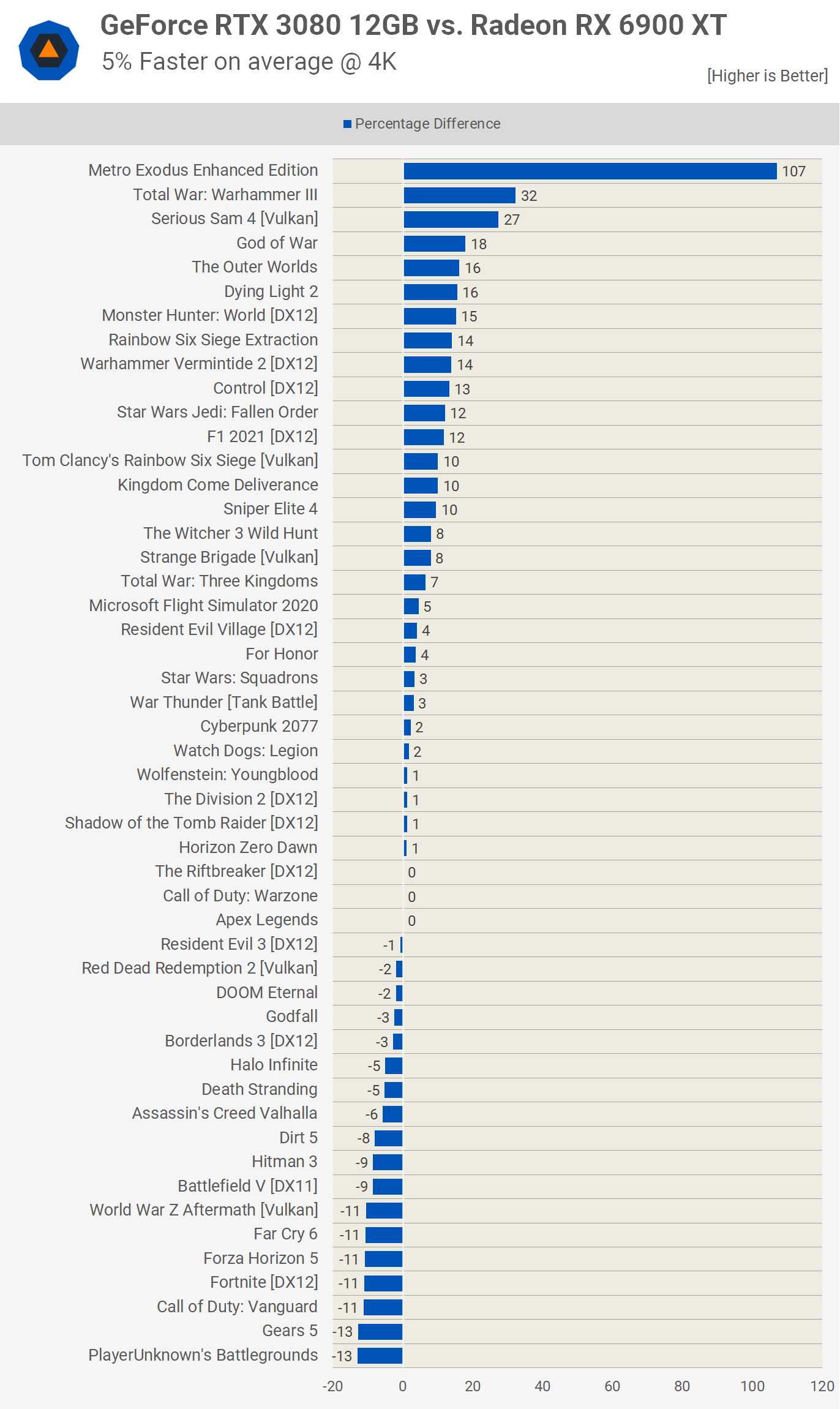

Playing games at 4K, the 3080 was 5% faster on average, and typically we deem anything 5% or less to be a tie. Removing the Metro Exodus Enhanced reduced the margin to 3%, though we are seeing more significant wins for the GeForce GPU in titles such as Total War Warhammer, Serious Sam 4, God of War, The Outer World and Dying Light 2, for example.

Which is Better?

That's how the Radeon RX 6800 XT and GeForce RTX 3080 compare across a huge number of games, now the question is which one should you buy? Assuming you've given up waiting and just want something now...

On that note, we still strongly recommend you continue to hold out if you can as we are slowly starting to see pricing improve as demand declines, particularly from miners. But we can't predict the future, and our advice is only meant to help guide you, for all we know pricing could blow up next week and won't recover for another 12 months, as unlikely as that sounds.

It's also worth keeping in mind that Ampere and RDNA 2 architectures are well over a year old at this point and are expected to be replaced later this year with what should be vastly superior products. There's no guarantee you'll be able to buy them this year, so it's a gamble either way.

Must read: Battle of the Architectures:

RTX 3000 vs. Radeon RX 6000

With all that in mind, if you're ready and willing to buy right now and have a $1500+ budget, the RTX 3080 12GB and 6900 XT are available, the question is which one should you buy?

Typically, the Radeon 6900 XT is the more affordable of the two, but of course pricing varies wildly from one region to the next, and even one day to the next within the same region. As it stands though, there's not enough of a price difference to make one of these GPUs the obvious choice based on pricing alone.

Thing is though, it's the same story when looking at rasterization performance. Surely they trade blows, but overall it's unlikely you'd be able to spot a difference between these two products when actually gaming.

This means when it comes to price and rasterization performance, you can go either way right now... they are that similar. There's only one reason why you might favor the 6900 XT and that's the extra 4GB of VRAM, though it's hard to say when that will be of benefit. Frankly, 12GB should be enough for the foreseeable future and shouldn't become an issue within the realistic lifespan of this GPU generation as a high-end gaming product.

On the other hand, the GeForce RTX 3080 12GB has the advantage of DLSS and more mature ray tracing, which generally yields far better results. In our opinion, DLSS has become a strong selling point of RTX branded products as support and quality continues to improve. Meanwhile, AMD still doesn't have a real answer to DLSS. Yes, FSR has helped to lessen that blow, and is quite an impressive solution in its own right, but it doesn't nullify DLSS and it's not a key selling point of Radeon GPUs, largely because it supports all GPUs, GeForce included.

As DLSS continues to improve, so does ray tracing, with more and more titles offering ray traced effects, and those that use it well generally see performance tank on RDNA2 based products.

For me personally, ray tracing performance isn't a priority, but as we've said the adoption and use of ray tracing is always improving and I'm certainly much more interested in it today than I was even a year ago, and I expect that trend to continue.

Regardless of your stance, we feel it's easy to argue in favor of the RTX 3080 12GB given those features. In short, if you're keen to check out ray tracing and think you'll play the latest titles with it enabled, a GeForce GPU is a must as performance is typically much better. We'd only go for the 6900 XT if we weren't at all interested in ray tracing and it was at least a few hundred dollars cheaper.

It's also worth mentioning that all testing was conducted on a Ryzen 5000 system with Smart Access Memory enabled (resizable BAR), and this tends to favor Radeon GPUs more than GeForce. If your system doesn't yet support resizable BAR, the RTX 3080 12GB will look slightly better overall when compared to what's been shown here.

Bottom line is that new technologies such as Smart Access Memory, ray tracing, and DLSS make it harder than ever to make a concise GPU recommendation, but hopefully this testing helped you narrow down your choice between these high-end GPUs, whether you're buying now or in the near future when prices (crossing fingers) get closer to MSRP.