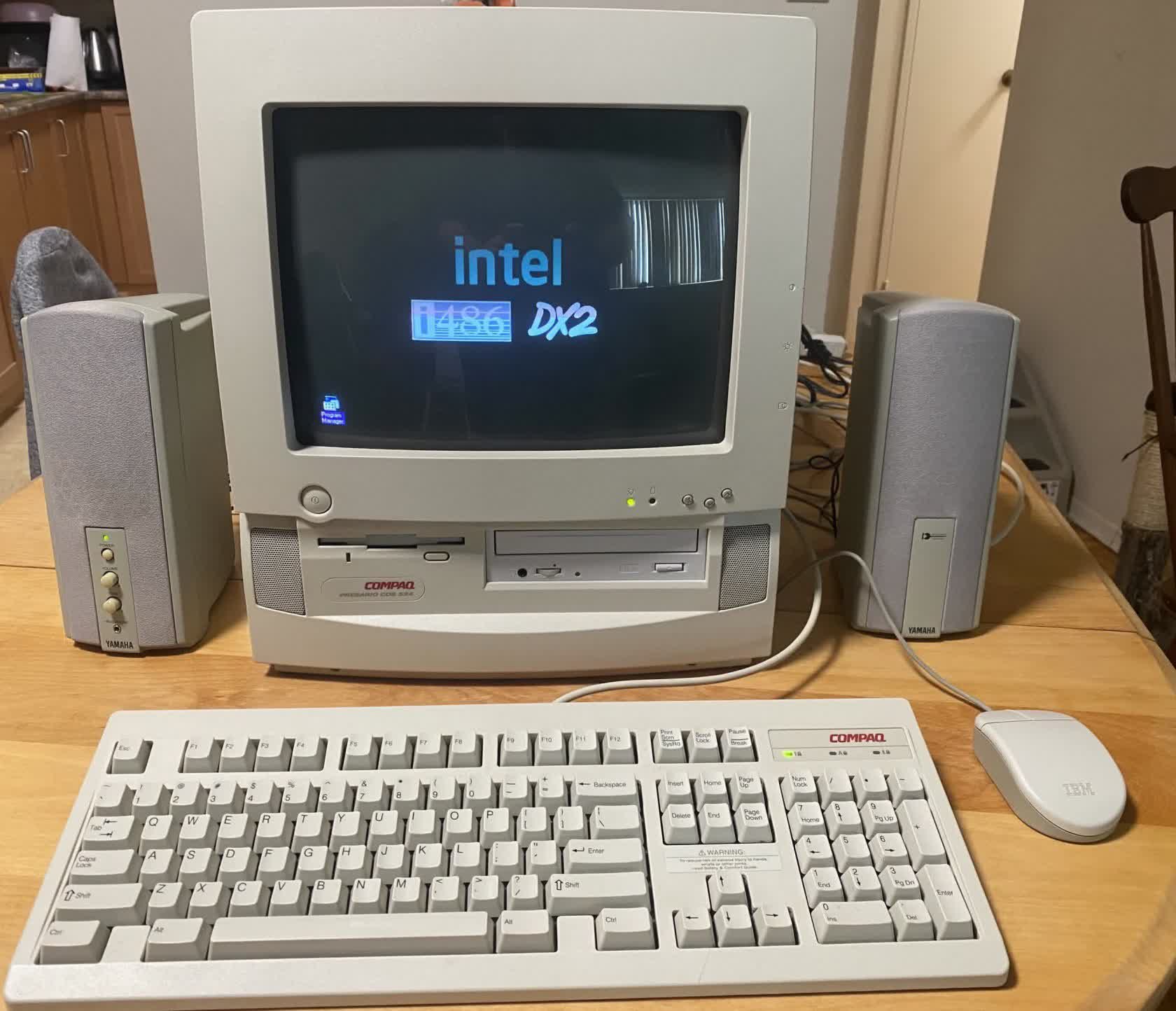

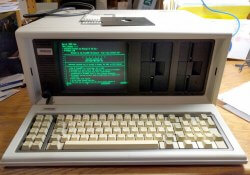

Compaq, Gone But Not Forgotten: The Best-Selling PC of the 1980s and 1990s

#TBT The year was 1982 and computers had finally made the jump from machines that took up a full room to something that could fit on a desk. But they were still far from portable, so three entrepreneurs decided to change that.

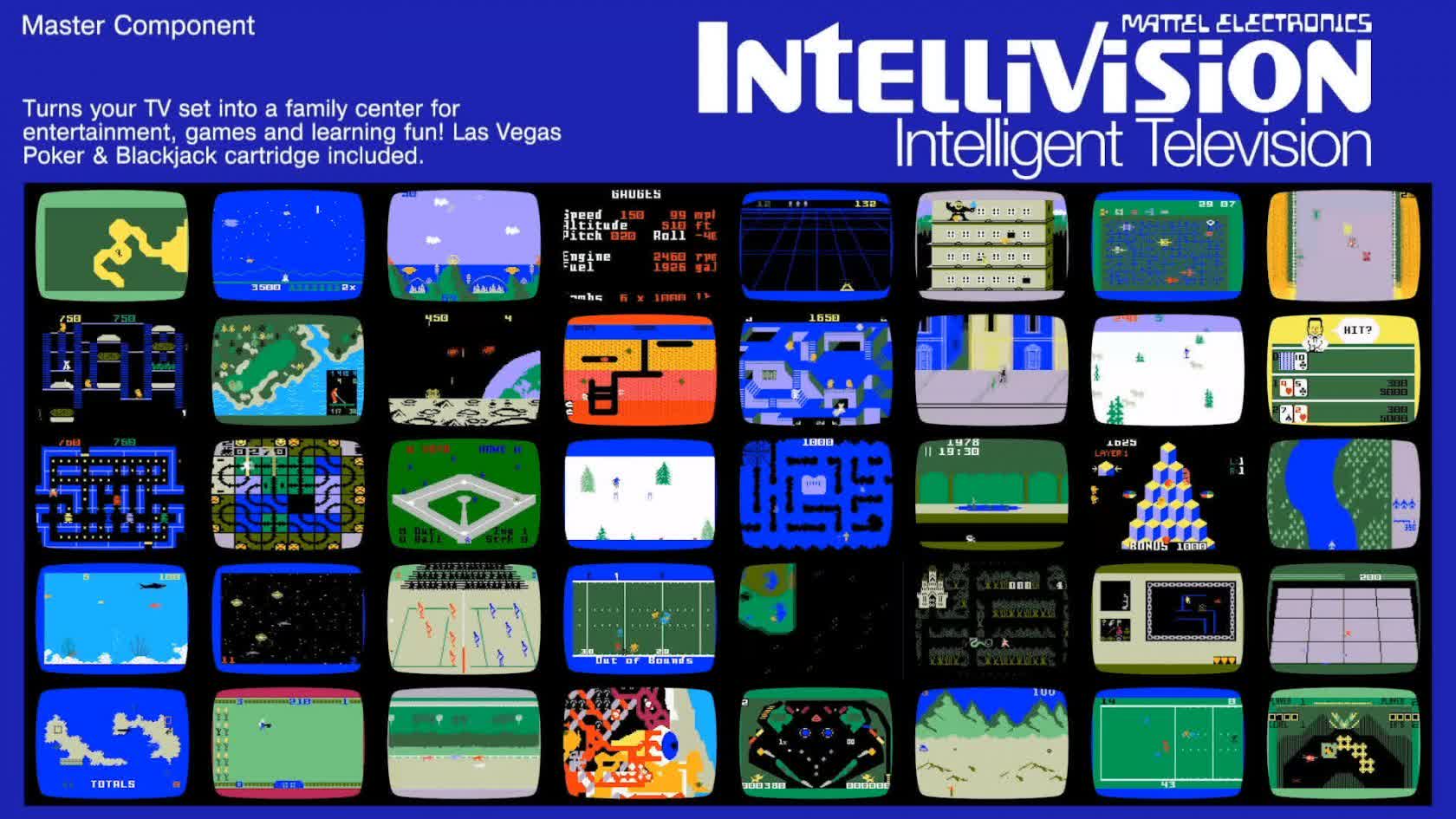

Intellivision: Gone But Not Forgotten

In the late 1970s, Mattel developed a gaming system called Intellivision to compete against the equally legendary Atari 2600. Both systems would leave a lasting impression on the history of console gaming.

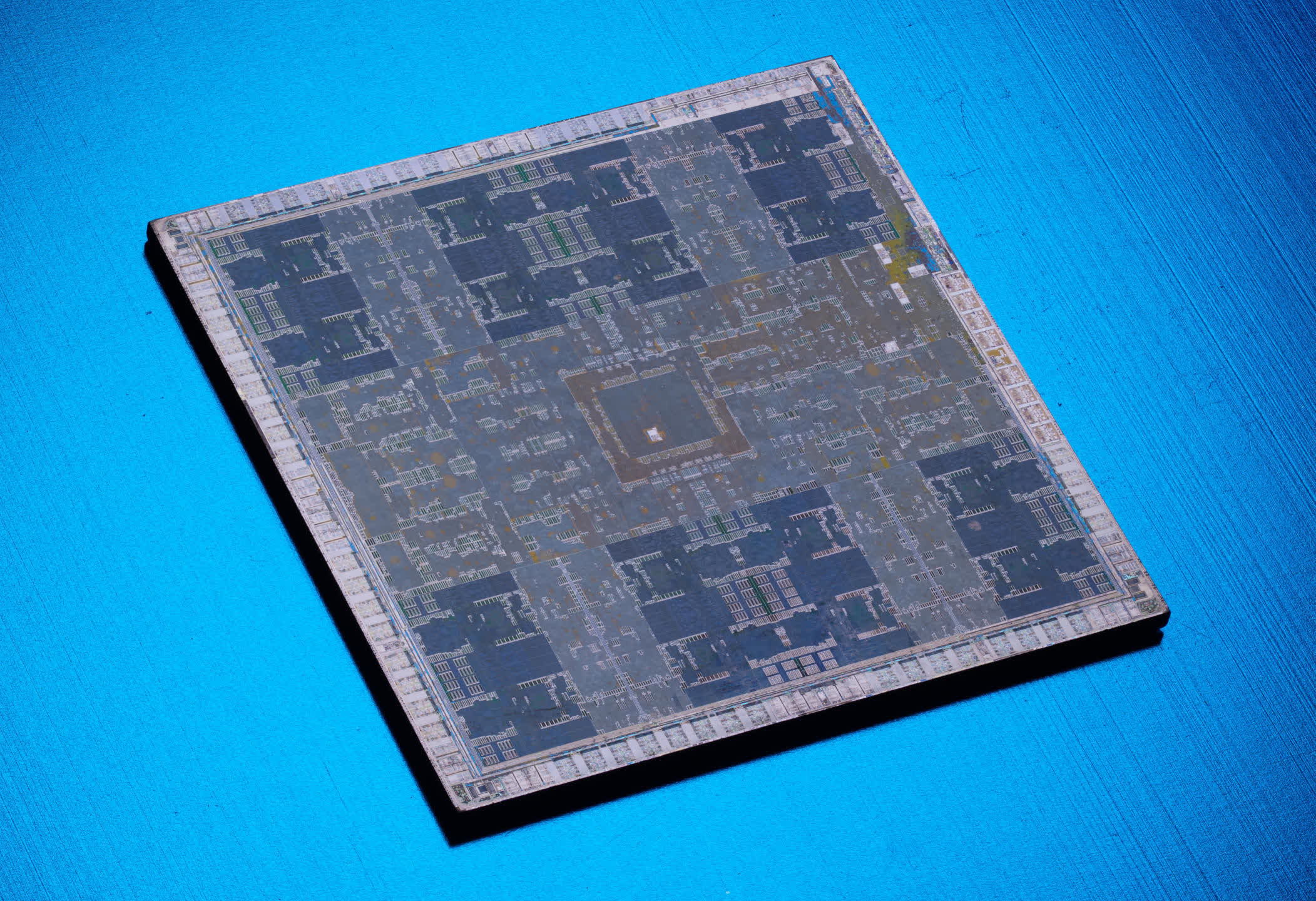

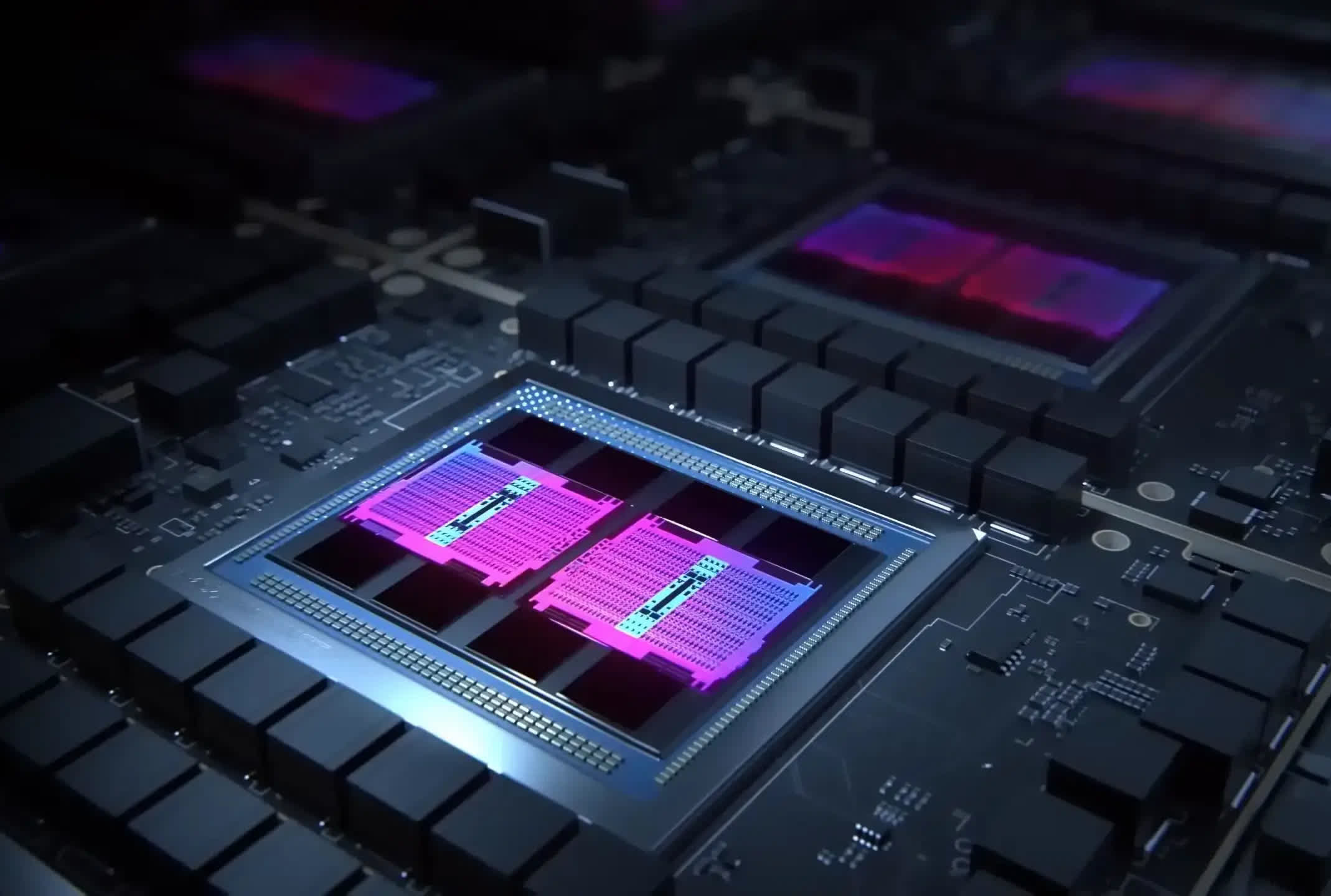

Goodbye to Graphics: How GPUs Came to Dominate Compute and AI

Gone are the days when the sole function for a graphics chip were, graphics. Let's explore how the GPU evolved from a modest pixel pusher into a blazing powerhouse of floating-point computation.

Two of the world's first desktop PCs discovered during routine home cleaning

In a nutshell: Even in this connected era where anything of value already seems to be on eBay, amazing discoveries are being made. In London, a routine house cleaning by a waste company uncovered two of the world's first desktop PCs, released back in the early 1970s, of which only three are known to still exist today.

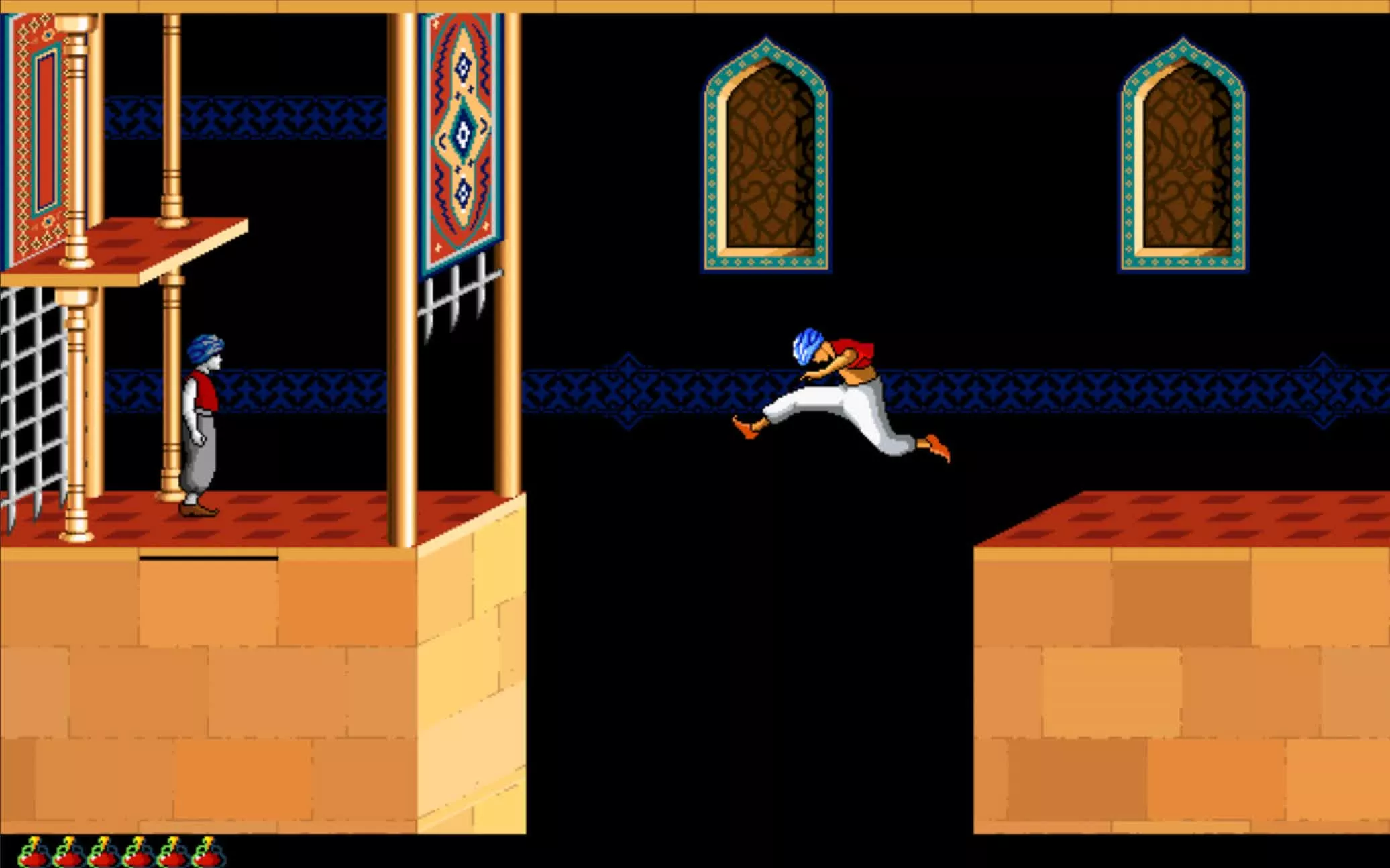

35 Years of Prince of Persia: A Game Series Like No Other

The Prince of Persia series likely means different things to different generations of gamers, from unprecedented realism in the 2D era, to unforgettable 3D platformers and pioneering mobile games. Whenever a new Prince of Persia game is released, you know it will be a game like no other.

Nokia: The Story of the Once-Legendary Phone Maker

#ThrowBackThursday Most people who hear the word "Nokia" associate it with mobile phones, but there's a convoluted history to tell since the company's humble beginnings over 150 years ago and many reinventions.

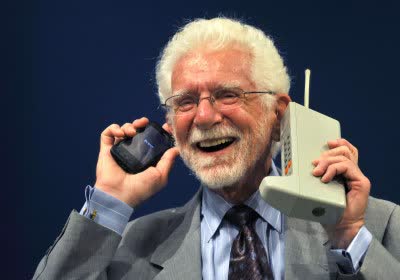

The Motorola DynaTAC was the first commercial mobile phone released in 1983. What was its price?

Ripping and Tearing: 3 Decades of Doom

Doom is rightly considered the granddaddy of the FPS genre, a claim few games can make. Let's look at each memorable entry in the Doom series from development to reception.

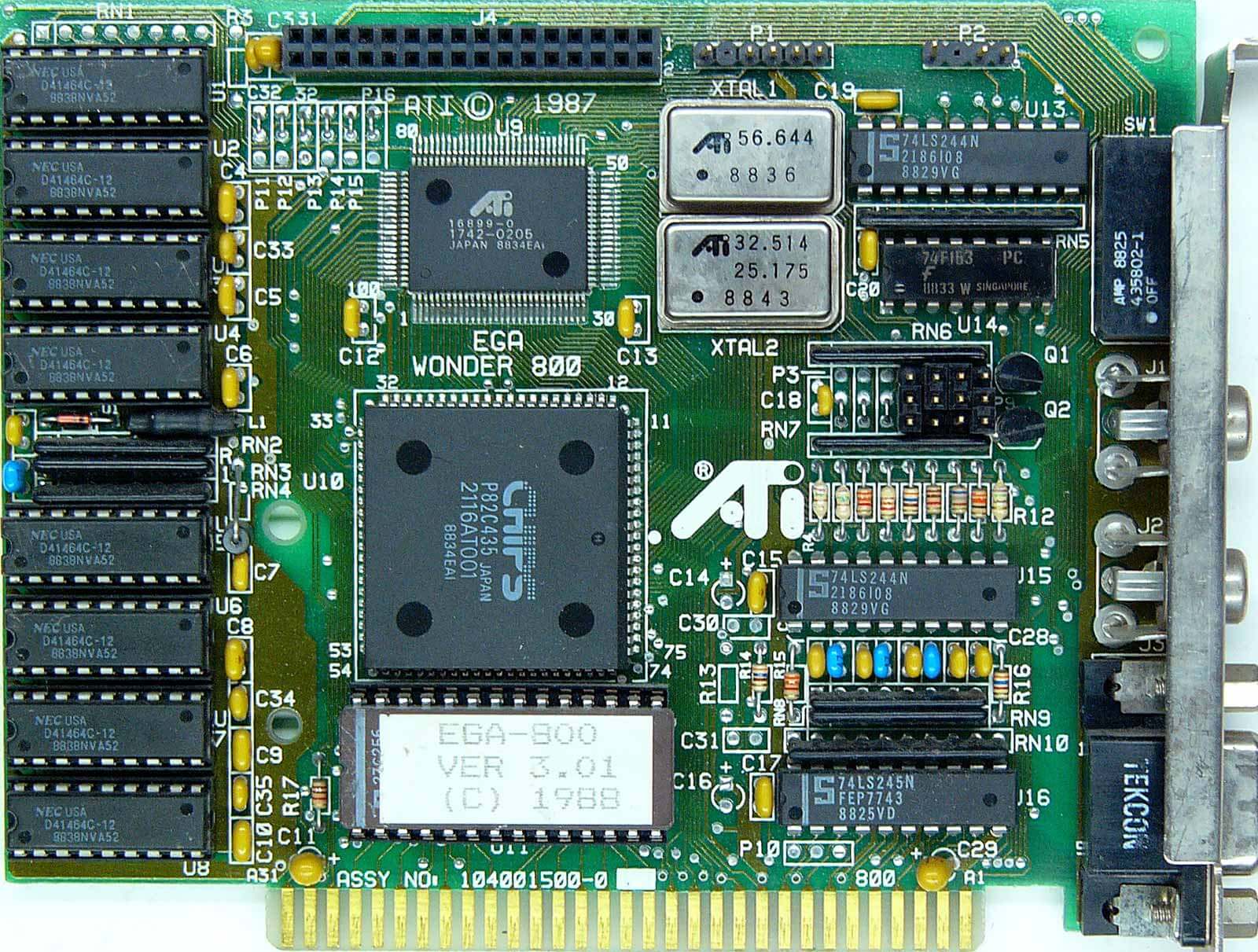

The History of the Modern Graphics Processor

3D graphics transformed a somewhat dull PC industry into a spectacle of light and magic after generations of innovative endeavor. TechSpot's look at the history of the GPU goes from the early days of 3D, to game-changing hardware and beyond.

Who famously said: "There is no reason anyone would want a computer in their home"?

What kind of company was Nokia when it was founded by Finnish engineer Fredrik Idestam in 1865?

A PC Gaming Music Journey: From Doom to Terraria, System Shock, and More Memorable Soundtracks

#TBT A journey through memorable video game music, this second article is dedicated exclusively to PC video game soundtracks. We have our hands full given the wealth of excellent material available.

Who were the 3 founders of Apple? Steve Jobs, Steve Wozniak, and ...?

4 Years of AMD RDNA: Another Zen or a New Bulldozer?

It's been four years since AMD launched RDNA, the successor to the venerable GCN graphics architecture. We take a look through the tech and numbers to see just how successful it's been.

Where did the name "Bluetooth" come from?

What was the first wristwatch with the ability to make phone calls?

Which company invented the hard disk drive?

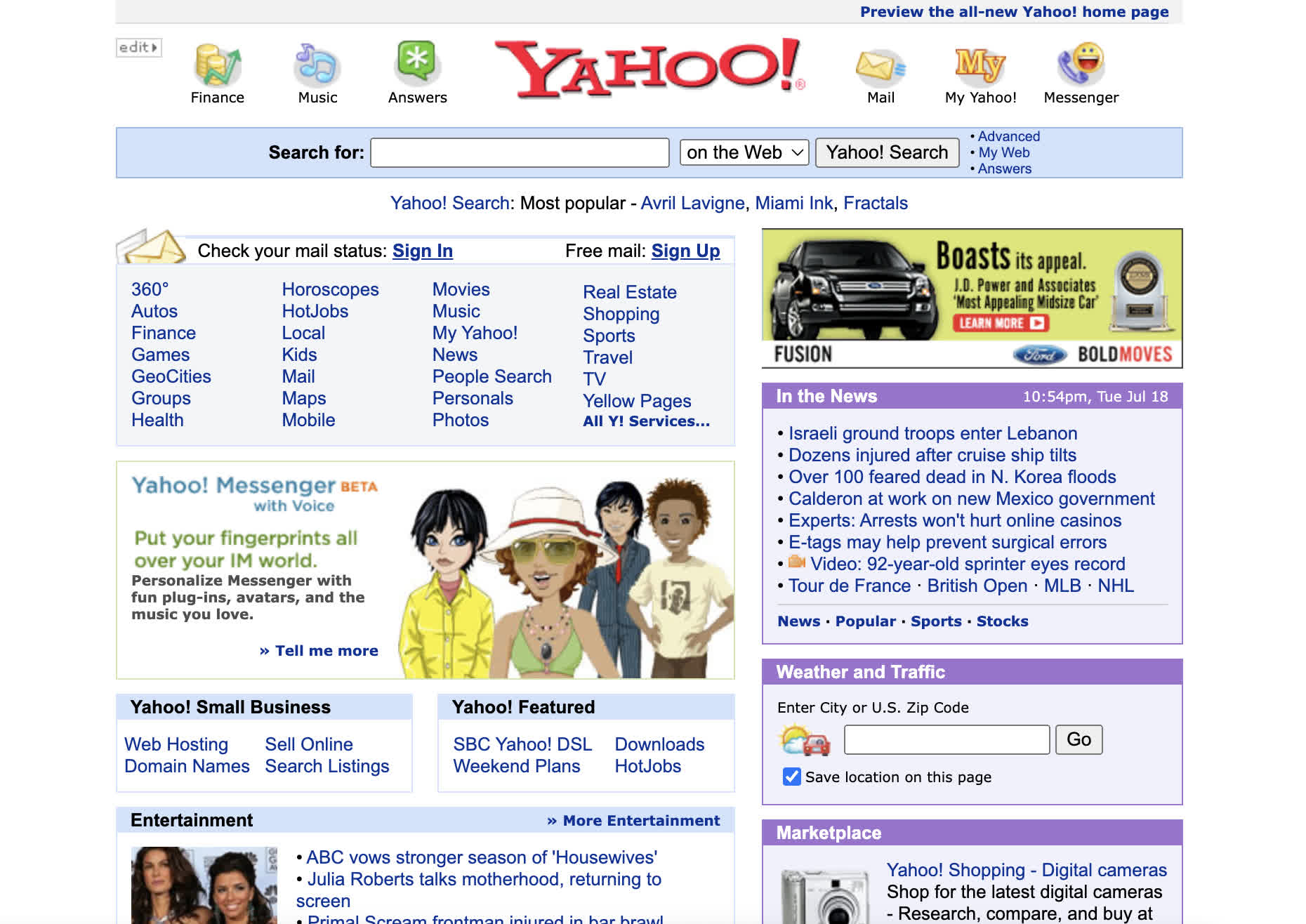

What Went Wrong With Yahoo!?

At the turn of the millennium, Yahoo! was the most visited site on the web. In Web 1.0 terms, Yahoo! was like Google and Facebook combined. So what happened?

Valve's Gabe Newell led a team at Microsoft that ported which game to Windows 95?

50 Years of Video Games - Part 3: Good Games Always Prevail

With an increasing number of people spending more of their hard-earned money on the latest tech and services, games expanded into new arenas. History showed once more, new tech paved the way for new opportunities.

What was the size of the first-generation floppy disk?

S3 Graphics: Gone But Not Forgotten

#TBT These days it's rare to see a new hardware company break ground in the world of PCs, but 30 years ago, they were popping up all over the place. Join us as we pay tribute to S3 and see how its remarkable story unfolded over the years.

Then and Now: How 30 Years of Progress Have Changed PCs

Over the last 30 years, the PC has utterly transformed in appearance, capability, and usage. From the hulking beige boxes to an astonishing array of powerful, colorful, and astonishing computers.