Rumor mill: We might still be more than a year away from Nvidia's next-gen RTX 5000-series graphics cards hitting the market, but that doesn't mean rumors aren't already arriving. The latest of these claims the cards will be built on TSMC's 3nm process node and come with features such as DisplayPort 2.1, which AMD already offers, and PCI Express 5.0.

Prolific and usually reliable leaker Kopite7kimi made the RTX 5000 claims on X. They might not be the most earth-shattering rumors, but that means they're likely accurate.

TSMC3

– kopite7kimi (@kopite7kimi) November 15, 2023

Moving from TSMC's 5nm process node used for the Lovelace generation to 3nm for its successor is an obvious step from Nvidia. Apple's recent M3 SoCs utilize the 3m node, which TSMC says brings a 15% performance improvement compared to 5nm, along with better efficiency (~30%) while shrinking the die size by around 42%. No word if the RTX 5000 will be a custom node or one of TSMC's announced N3 family: N3E, N3P, and N3A.

√

– kopite7kimi (@kopite7kimi) November 15, 2023

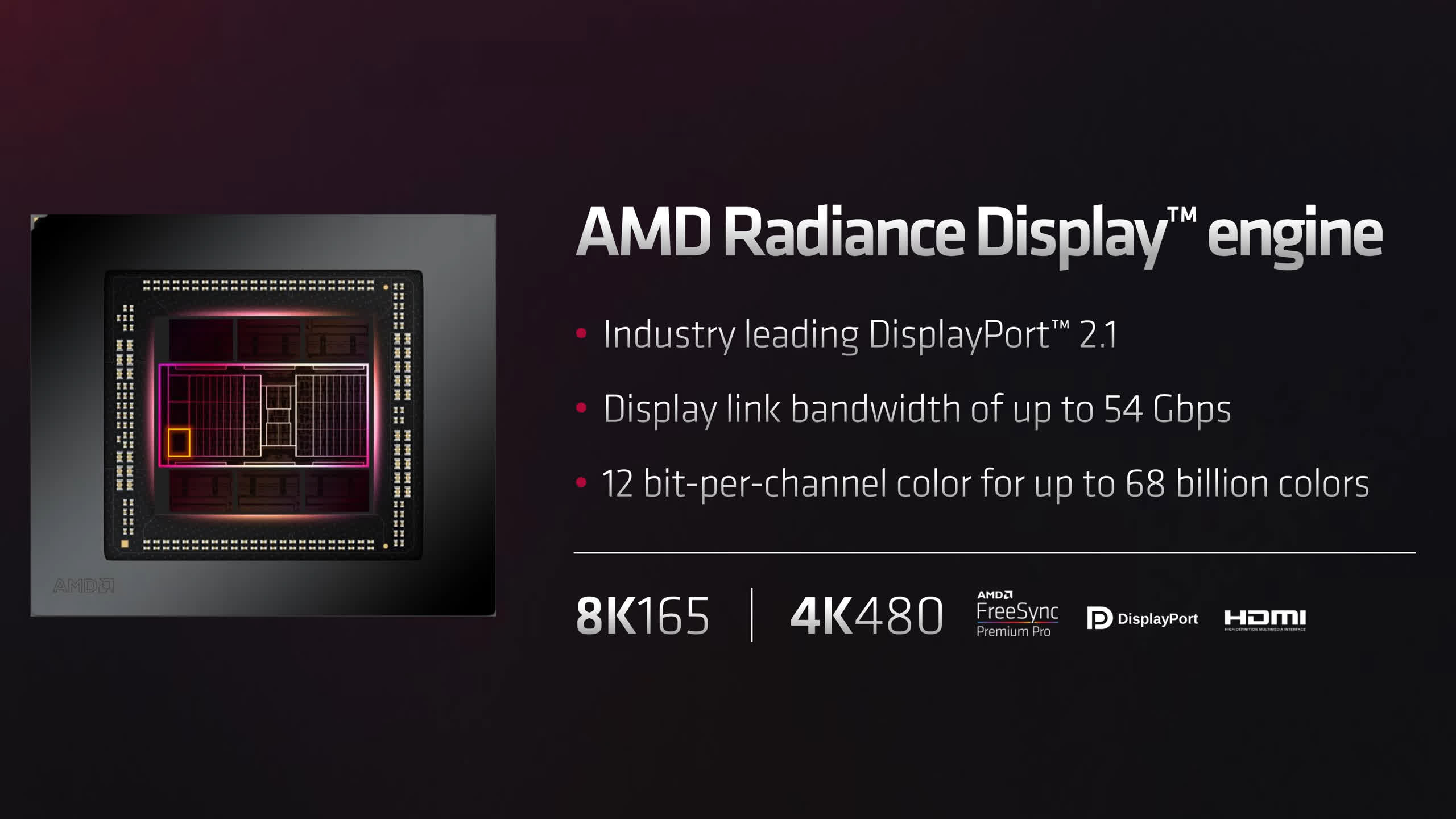

The RTX 5000 Blackwell graphics card are also predicted to offer DisplayPort 2.1. Some are have been disappointed with the RTX 4000 series sticking with DisplayPort 1.4a connections while AMD's RX 7000 line uses DP 2.1. AMD's standard with UHBR 13.5 supports a link bandwidth of up to 54 Gbps – not the full 80 Gbps (UHBR20) that DisplayPort 2 can provide but a huge bandwidth upgrade over DP 1.4. This allows for future display types such as 8K at 165Hz and 4K at a blistering 480Hz. It's not clear which DisplayPort 2.1 standard Nvidia will choose.

PCIe 5.0 compatibility is another rumored feature in the RTX 5000 series. The cards are also expected to keep the 16-pin power connector, albeit using the revised 12V-2x6 connector. The specter of the melting RTX 4090s remains a problem for buyers and Team Green, but there have been no reported issues since the company updated its flagship to 12V-2x6 adapters earlier this year. Tests have shown that the revised power cables are safe even when not fully connected.

There are plenty of other rumors about Blackwell, including its use of GDDR7 memory, but it's best to wait until more compelling evidence of these features arrives.

The big question everyone wants to know is when will the RTX 5000 series land. Many believe it won't be here until 2025, but the next-gen cards could launch late next year after the Blackwell HPC GPUs start shipping.

Something that suggests the RTX 5000 cards won't launch until 2025 is what appears to be the RTX 4000 Super variants' imminent arrival. There have been a slew of rumors that the RTX 4080 Super, 4070 Super, and 4070 Ti Super will be unveiled at CES in January. If they prove accurate, Nvidia might not want to launch a new card generation in the same year. Let's hope the company prices the RTX 5000 line a bit more sensibly, too.

https://www.techspot.com/news/100894-nvidia-geforce-rtx-5000-graphics-cards-expected-feature.html